在 KubeSphere 中使用 Rook 構(gòu)建云原生存儲環(huán)境

Rook 介紹

Rook 是一個(gè)開源的云原生存儲編排器,為各種存儲解決方案提供平臺、框架和支持,以便與云原生環(huán)境進(jìn)行原生集成。

Rook 將分布式存儲系統(tǒng)轉(zhuǎn)變?yōu)樽怨芾怼⒆詳U(kuò)展、自修復(fù)的存儲服務(wù)。它使存儲管理員的部署、引導(dǎo)、配置、配置、擴(kuò)展、升級、遷移、災(zāi)難恢復(fù)、監(jiān)控和資源管理等任務(wù)自動化。

簡而言之,Rook 就是一組 Kubernetes 的 Operator,它可以完全控制多種數(shù)據(jù)存儲解決方案(例如 Ceph、EdgeFS、Minio、Cassandra)的部署,管理以及自動恢復(fù)。

到目前為止,Rook 支持的最穩(wěn)定的存儲仍然是 Ceph,本文將介紹如何使用 Rook 來創(chuàng)建維護(hù) Ceph 集群,并作為 Kubernetes 的持久化存儲。

環(huán)境準(zhǔn)備

K8s 環(huán)境可以通過安裝 KubeSphere 進(jìn)行部署,我使用的是高可用方案。

在公有云上安裝 KubeSphere 參考文檔:多節(jié)點(diǎn)安裝[1]

?? 注意:kube-node(5,6,7)的節(jié)點(diǎn)上分別有兩塊數(shù)據(jù)盤。

kube-master1???Ready????master???118d???v1.17.9

kube-master2???Ready????master???118d???v1.17.9

kube-master3???Ready????master???118d???v1.17.9

kube-node1?????Ready????worker???118d???v1.17.9

kube-node2?????Ready????worker???118d???v1.17.9

kube-node3?????Ready????worker???111d???v1.17.9

kube-node4?????Ready????worker???111d???v1.17.9

kube-node5?????Ready????worker???11d????v1.17.9

kube-node6?????Ready????worker???11d????v1.17.9

kube-node7?????Ready????worker???11d????v1.17.9

安裝前請確保 node 節(jié)點(diǎn)都安裝上了 lvm2,否則會報(bào)錯(cuò)。

部署安裝 Rook、Ceph 集群

1.克隆 Rook 倉庫到本地

$?git?clone?-b?release-1.4?https://github.com/rook/rook.git

2.切換目錄

$?cd?/root/ceph/rook/cluster/examples/kubernetes/ceph

3.部署 Rook,創(chuàng)建 CRD 資源

$?kubectl?create?-f?common.yaml?-f?operator.yaml

#?說明:

#?1.comm.yaml里面主要是權(quán)限控制以及CRD資源定義

#?2.operator.yaml是rook-ceph-operator的deloyment

4.創(chuàng)建 Ceph 集群

$?kubectl?create?-f?cluster.yaml

#?重要說明:

#?演示不做定制化操作,Ceph集群默認(rèn)會動態(tài)去識別node節(jié)點(diǎn)上未格式化的全新空閑硬盤,自動會對這些盤進(jìn)行OSD初始化(至少是需要3個(gè)節(jié)點(diǎn),每個(gè)節(jié)點(diǎn)至少一塊空閑硬盤)

5.檢查 pod 狀態(tài)

$?kubectl?get?pod?-n?rook-ceph?-o?wide

NAME???????????????????????????????????????????????????READY???STATUS??????RESTARTS???AGE???IP???????????????NODE?????????NOMINATED?NODE???READINESS?GATES

csi-cephfsplugin-5fw92?????????????????????????????????3/3?????Running?????6??????????12d???192.168.0.31?????kube-node7??????????????

csi-cephfsplugin-78plf?????????????????????????????????3/3?????Running?????0??????????12d???192.168.0.134????kube-node1??????????????

csi-cephfsplugin-bkdl8?????????????????????????????????3/3?????Running?????3??????????12d???192.168.0.195????kube-node5??????????????

csi-cephfsplugin-provisioner-77f457bcb9-6w4cv??????????6/6?????Running?????0??????????12d???10.233.77.95?????kube-node4??????????????

csi-cephfsplugin-provisioner-77f457bcb9-q7vxh??????????6/6?????Running?????0??????????12d???10.233.76.156????kube-node3??????????????

csi-cephfsplugin-rqb4d?????????????????????????????????3/3?????Running?????0??????????12d???192.168.0.183????kube-node4??????????????

csi-cephfsplugin-vmrfj?????????????????????????????????3/3?????Running?????0??????????12d???192.168.0.91?????kube-node3??????????????

csi-cephfsplugin-wglsw?????????????????????????????????3/3?????Running?????3??????????12d???192.168.0.116????kube-node6??????????????

csi-rbdplugin-4m8hv????????????????????????????????????3/3?????Running?????0??????????12d???192.168.0.91?????kube-node3??????????????

csi-rbdplugin-7wt45????????????????????????????????????3/3?????Running?????3??????????12d???192.168.0.195????kube-node5??????????????

csi-rbdplugin-bn5pn????????????????????????????????????3/3?????Running?????3??????????12d???192.168.0.116????kube-node6??????????????

csi-rbdplugin-hwl4b????????????????????????????????????3/3?????Running?????6??????????12d???192.168.0.31?????kube-node7??????????????

csi-rbdplugin-provisioner-7897f5855-7m95p??????????????6/6?????Running?????0??????????12d???10.233.77.94?????kube-node4??????????????

csi-rbdplugin-provisioner-7897f5855-btwt5??????????????6/6?????Running?????0??????????12d???10.233.76.155????kube-node3??????????????

csi-rbdplugin-qvksp????????????????????????????????????3/3?????Running?????0??????????12d???192.168.0.183????kube-node4??????????????

csi-rbdplugin-rr296????????????????????????????????????3/3?????Running?????0??????????12d???192.168.0.134????kube-node1??????????????

rook-ceph-crashcollector-kube-node1-64cf6f49fb-bx8lz???1/1?????Running?????0??????????12d???10.233.101.46????kube-node1??????????????

rook-ceph-crashcollector-kube-node3-575b75dc64-gxwtp???1/1?????Running?????0??????????12d???10.233.76.149????kube-node3??????????????

rook-ceph-crashcollector-kube-node4-78549d6d7f-9zz5q???1/1?????Running?????0??????????8d????10.233.77.226????kube-node4??????????????

rook-ceph-crashcollector-kube-node5-5db8557476-b8zp6???1/1?????Running?????1??????????11d???10.233.81.239????kube-node5??????????????

rook-ceph-crashcollector-kube-node6-78b7946769-8qh45???1/1?????Running?????0??????????8d????10.233.66.252????kube-node6??????????????

rook-ceph-crashcollector-kube-node7-78c97898fd-k85l4???1/1?????Running?????1??????????8d????10.233.111.33????kube-node7??????????????

rook-ceph-mds-myfs-a-86bdb684b6-4pbj7??????????????????1/1?????Running?????0??????????8d????10.233.77.225????kube-node4??????????????

rook-ceph-mds-myfs-b-6697d66b7d-jgnkw??????????????????1/1?????Running?????0??????????8d????10.233.66.250????kube-node6??????????????

rook-ceph-mgr-a-658db99d5b-jbrzh???????????????????????1/1?????Running?????0??????????12d???10.233.76.162????kube-node3??????????????

rook-ceph-mon-a-5cbf5947d8-vvfgf???????????????????????1/1?????Running?????1??????????12d???10.233.101.44????kube-node1??????????????

rook-ceph-mon-b-6495c96d9d-b82st???????????????????????1/1?????Running?????0??????????12d???10.233.76.144????kube-node3??????????????

rook-ceph-mon-d-dc4c6f4f9-rdfpg????????????????????????1/1?????Running?????1??????????12d???10.233.66.219????kube-node6??????????????

rook-ceph-operator-56fc54bb77-9rswg????????????????????1/1?????Running?????0??????????12d???10.233.76.138????kube-node3??????????????

rook-ceph-osd-0-777979f6b4-jxtg9???????????????????????1/1?????Running?????1??????????11d???10.233.81.237????kube-node5??????????????

rook-ceph-osd-10-589487764d-8bmpd??????????????????????1/1?????Running?????0??????????8d????10.233.111.59????kube-node7??????????????

rook-ceph-osd-11-5b7dd4c7bc-m4nqz??????????????????????1/1?????Running?????0??????????8d????10.233.111.60????kube-node7??????????????

rook-ceph-osd-2-54cbf4d9d8-qn4z7???????????????????????1/1?????Running?????1??????????10d???10.233.66.222????kube-node6??????????????

rook-ceph-osd-6-c94cd566-ndgzd?????????????????????????1/1?????Running?????1??????????10d???10.233.81.238????kube-node5??????????????

rook-ceph-osd-7-d8cdc94fd-v2lm8????????????????????????1/1?????Running?????0??????????9d????10.233.66.223????kube-node6??????????????

rook-ceph-osd-prepare-kube-node1-4bdch?????????????????0/1?????Completed???0??????????66m???10.233.101.91????kube-node1??????????????

rook-ceph-osd-prepare-kube-node3-bg4wk?????????????????0/1?????Completed???0??????????66m???10.233.76.252????kube-node3??????????????

rook-ceph-osd-prepare-kube-node4-r9dk4?????????????????0/1?????Completed???0??????????66m???10.233.77.107????kube-node4??????????????

rook-ceph-osd-prepare-kube-node5-rbvcn?????????????????0/1?????Completed???0??????????66m???10.233.81.73?????kube-node5??????????????

rook-ceph-osd-prepare-kube-node5-rcngg?????????????????0/1?????Completed???5??????????10d???10.233.81.98?????kube-node5??????????????

rook-ceph-osd-prepare-kube-node6-jc8cm?????????????????0/1?????Completed???0??????????66m???10.233.66.109????kube-node6??????????????

rook-ceph-osd-prepare-kube-node6-qsxrp?????????????????0/1?????Completed???0??????????11d???10.233.66.109????kube-node6??????????????

rook-ceph-osd-prepare-kube-node7-5c52p?????????????????0/1?????Completed???5??????????8d????10.233.111.58????kube-node7??????????????

rook-ceph-osd-prepare-kube-node7-h5d6c?????????????????0/1?????Completed???0??????????66m???10.233.111.110???kube-node7??????????????

rook-ceph-osd-prepare-kube-node7-tzvp5?????????????????0/1?????Completed???0??????????11d???10.233.111.102???kube-node7??????????????

rook-ceph-osd-prepare-kube-node7-wd6dt?????????????????0/1?????Completed???7??????????8d????10.233.111.56????kube-node7??????????????

rook-ceph-tools-64fc489556-5clvj???????????????????????1/1?????Running?????0??????????12d???10.233.77.118????kube-node4??????????????

rook-discover-6kbvg????????????????????????????????????1/1?????Running?????0??????????12d???10.233.101.42????kube-node1??????????????

rook-discover-7dr44????????????????????????????????????1/1?????Running?????2??????????12d???10.233.66.220????kube-node6??????????????

rook-discover-dqr82????????????????????????????????????1/1?????Running?????0??????????12d???10.233.77.74?????kube-node4??????????????

rook-discover-gqppp????????????????????????????????????1/1?????Running?????0??????????12d???10.233.76.139????kube-node3??????????????

rook-discover-hdkxf????????????????????????????????????1/1?????Running?????1??????????12d???10.233.81.236????kube-node5??????????????

rook-discover-pzhsw????????????????????????????????????1/1?????Running?????3??????????12d???10.233.111.36????kube-node7??????????????

以上是所有組件的 pod 完成后的狀態(tài),其中 rook-ceph-osd-prepare 開頭的 pod 是自動感知集群新掛載硬盤的,只要有新硬盤掛載到集群自動會觸發(fā) OSD。

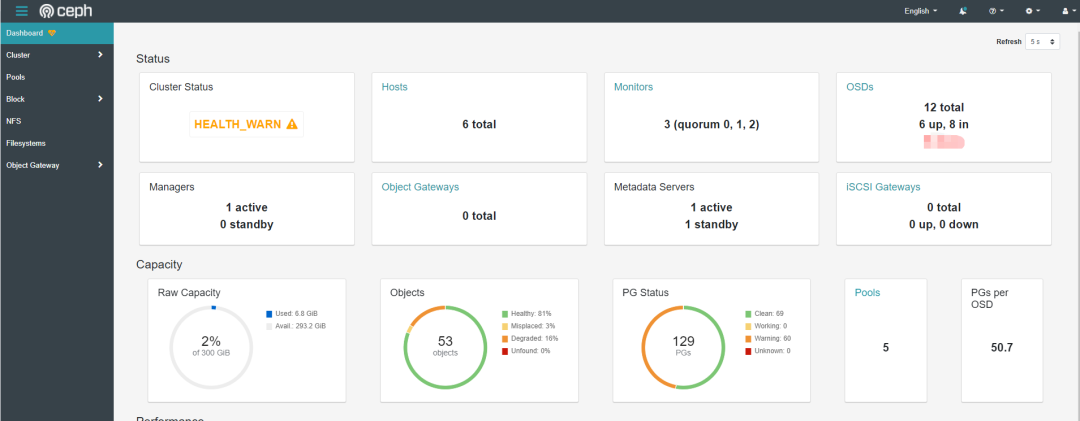

6.配置 Ceph 集群 dashboard

Ceph Dashboard 是一個(gè)內(nèi)置的基于 Web 的管理和監(jiān)視應(yīng)用程序,它是開源 Ceph 發(fā)行版的一部分。通過 Dashboard 可以獲取 Ceph 集群的各種基本狀態(tài)信息。

默認(rèn)的 ceph 已經(jīng)安裝的 ceph-dashboard,其 SVC 地址是 service clusterIP,并不能被外部訪問,需要創(chuàng)建 service 服務(wù)

$?kubectl?apply?-f?dashboard-external-http.yaml

apiVersion:?v1

kind:?Service

metadata:

??name:?rook-ceph-mgr-dashboard-external-https

??namespace:?rook-ceph?#?namespace:cluster

??labels:

????app:?rook-ceph-mgr

????rook_cluster:?rook-ceph?#?namespace:cluster

spec:

??ports:

????-?name:?dashboard

??????port:?7000

??????protocol:?TCP

??????targetPort:?7000

??selector:

????app:?rook-ceph-mgr

????rook_cluster:?rook-ceph

??sessionAffinity:?None

??type:?NodePort

說明:由于 8443 是 https 訪問端口需要配置證書,本教程只展示 http 訪問 port 上只配置了 7000

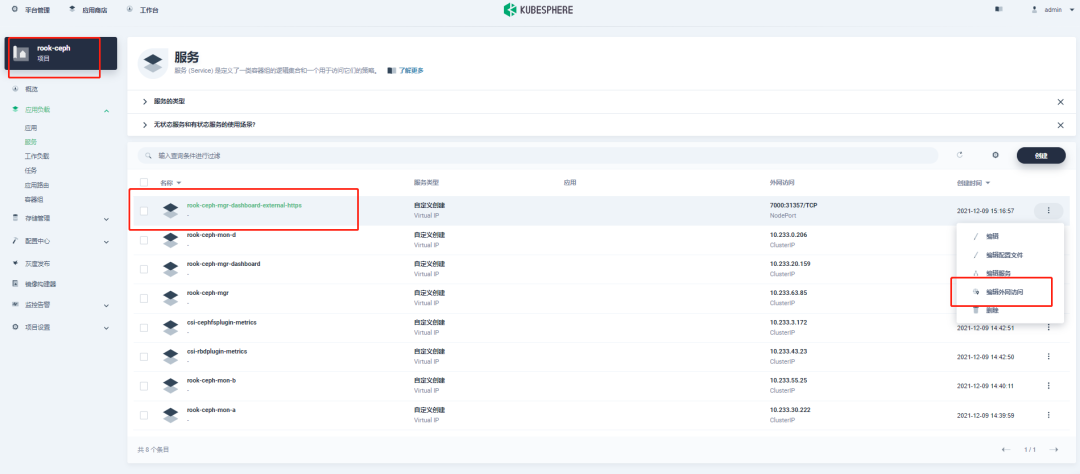

7.查看 svc 狀態(tài)

$?kubectl?get?svc?-n?rook-ceph

NAME?????????????????????????????????????TYPE????????CLUSTER-IP??????EXTERNAL-IP???PORT(S)?????????????AGE

csi-cephfsplugin-metrics?????????????????ClusterIP???10.233.3.172????????????8080/TCP,8081/TCP???12d

csi-rbdplugin-metrics????????????????????ClusterIP???10.233.43.23????????????8080/TCP,8081/TCP???12d

rook-ceph-mgr????????????????????????????ClusterIP???10.233.63.85????????????9283/TCP????????????12d

rook-ceph-mgr-dashboard??????????????????ClusterIP???10.233.20.159???????????7000/TCP????????????12d

rook-ceph-mgr-dashboard-external-https???NodePort????10.233.56.73????????????7000:31357/TCP??????12d

rook-ceph-mon-a??????????????????????????ClusterIP???10.233.30.222???????????6789/TCP,3300/TCP???12d

rook-ceph-mon-b??????????????????????????ClusterIP???10.233.55.25????????????6789/TCP,3300/TCP???12d

rook-ceph-mon-d??????????????????????????ClusterIP???10.233.0.206????????????6789/TCP,3300/TCP???12d

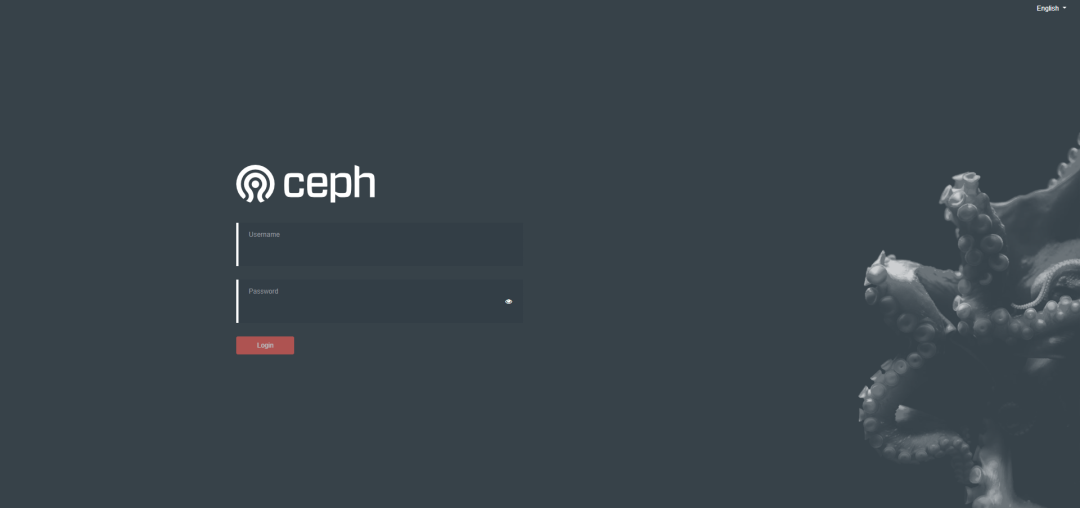

8.驗(yàn)證訪問 dashboard

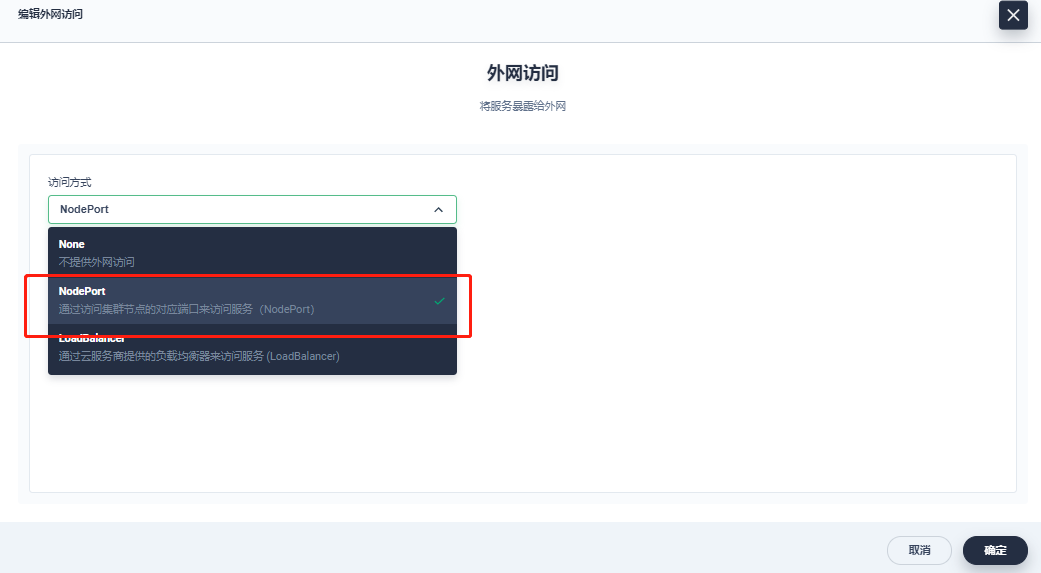

打開 KubeSphere 平臺開啟外網(wǎng)服務(wù)

訪問方式:

http://{master1-ip:31357}

用戶名獲取方法:

$?kubectl?-n?rook-ceph?get?secret?rook-ceph-dashboard-password?-o?jsonpath="{['data']['password']}"|base64?--decode?&&?echo

說明:dashboard 顯示 HEALTH_WARN 警告可以通過 seelog 的方式查看具體的原因,一般是 osd down、pg 數(shù)量不夠等

9.部署 rook 工具箱

Rook 工具箱是一個(gè)包含用于 Rook 調(diào)試和測試的常用工具的容器

$?kubectl?apply?-f?toolbox.yaml

進(jìn)入工具箱查看 Ceph 集群狀態(tài)

$?kubectl?-n?rook-ceph?exec?-it?$(kubectl?-n?rook-ceph?get?pod?-l?"app=rook-ceph-tools"?-o?jsonpath='{.items[0].metadata.name}')?--?bash

$?ceph?-s

??cluster:

????id:?????1457045a-4926-411f-8be8-c7a958351a38

????health:?HEALTH_WARN

????????????mon?a?is?low?on?available?space

????????????2?osds?down

????????????Degraded?data?redundancy:?25/159?objects?degraded?(15.723%),?16?pgs?degraded,?51?pgs?undersized

????????????3?daemons?have?recently?crashed

??services:

????mon:?3?daemons,?quorum?a,b,d?(age?9d)

????mgr:?a(active,?since?4h)

????mds:?myfs:1?{0=myfs-b=up:active}?1?up:standby-replay

????osd:?12?osds:?6?up?(since?8d),?8?in?(since?8d);?9?remapped?pgs

??data:

????pools:???5?pools,?129?pgs

????objects:?53?objects,?37?MiB

????usage:???6.8?GiB?used,?293?GiB?/?300?GiB?avail

????pgs:?????25/159?objects?degraded?(15.723%)

?????????????5/159?objects?misplaced?(3.145%)

?????????????69?active+clean

?????????????35?active+undersized

?????????????16?active+undersized+degraded

?????????????9??active+clean+remapped

工具箱相關(guān)查詢命令

ceph?status

ceph?osd?status

ceph?df

rados?df

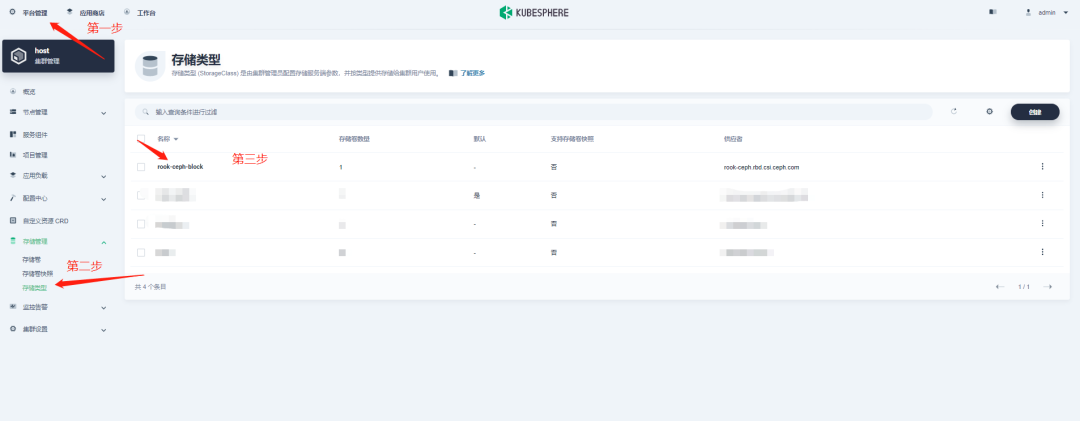

部署 StorageClass

1.rbd 塊存儲簡介

Ceph 可以同時(shí)提供對象存儲 RADOSGW、塊存儲 RBD、文件系統(tǒng)存儲 Ceph FS。RBD 即 RADOS Block Device 的簡稱,RBD 塊存儲是最穩(wěn)定且最常用的存儲類型。RBD 塊設(shè)備類似磁盤可以被掛載。RBD 塊設(shè)備具有快照、多副本、克隆和一致性等特性,數(shù)據(jù)以條帶化的方式存儲在 Ceph 集群的多個(gè) OSD 中。

2.創(chuàng)建 StorageClass

[root@kube-master1?rbd]#?kubectl??apply?-f?storageclass.yaml

3.查看 StorageClass 部署狀態(tài)

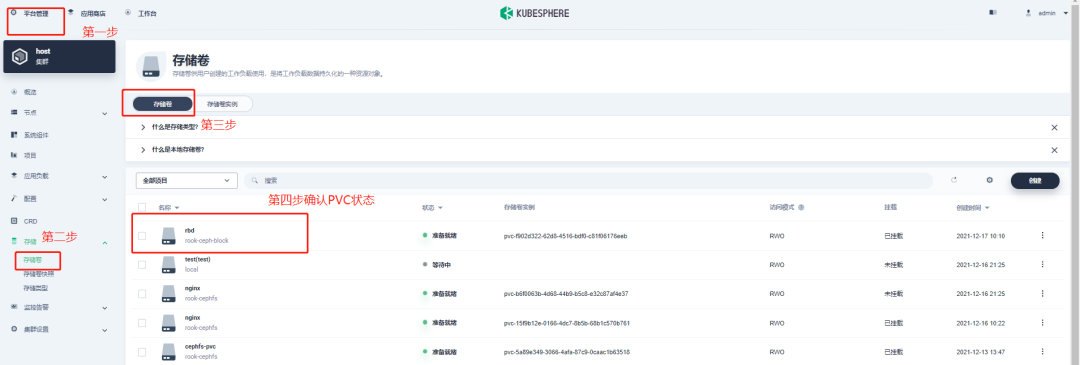

4.創(chuàng)建 pvc

$?kubectl?apply?-f?pvc.yaml

apiVersion:?v1

kind:?PersistentVolumeClaim

metadata:

??name:?rbd-pvc

spec:

??accessModes:

????-?ReadWriteOnce

??resources:

????requests:

??????storage:?2Gi

??storageClassName:?rook-ceph-block

~

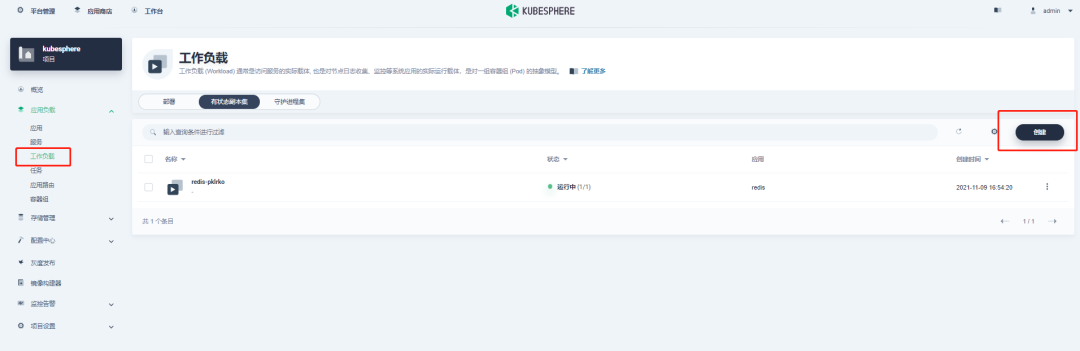

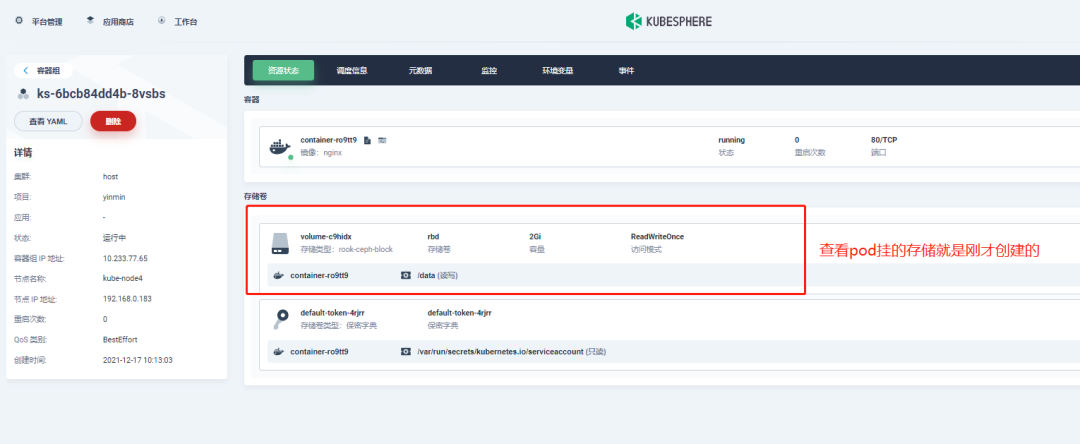

5.創(chuàng)建帶有 pvc 的 pod

$?kubectl?apply?-f?pod.yaml

apiVersion:?v1

kind:?Pod

metadata:

??name:?csirbd-demo-pod

spec:

??containers:

????-?name:?web-server

??????image:?nginx

??????volumeMounts:

????????-?name:?mypvc

??????????mountPath:?/var/lib/www/html

??volumes:

????-?name:?mypvc

??????persistentVolumeClaim:

????????claimName:?rbd-pvc

????????readOnly:?false

6.查看 pod、pvc、pv 狀態(tài)

總結(jié)

對于首次接觸 rook+Ceph 部署體驗(yàn)的同學(xué)來說需要了解的內(nèi)容較多,遇到的坑也會比較的多。希望通過以上的部署過程記錄可以幫助到大家。

1.Ceph 集群一直提示沒有可 osd 的盤

答:這里遇到過幾個(gè)情況,查看下掛載的數(shù)據(jù)盤是不是以前已經(jīng)使用過雖然格式化了但是以前的 raid 信息還存在?可以使用一下腳本進(jìn)行清理后在格式化在進(jìn)行掛載。

#!/usr/bin/env?bash

DISK="/dev/vdc"??#按需修改自己的盤符信息

#?Zap?the?disk?to?a?fresh,?usable?state?(zap-all?is?important,?b/c?MBR?has?to?be?clean)

#?You?will?have?to?run?this?step?for?all?disks.

sgdisk?--zap-all?$DISK

#?Clean?hdds?with?dd

dd?if=/dev/zero?of="$DISK"?bs=1M?count=100?oflag=direct,dsync

#?Clean?disks?such?as?ssd?with?blkdiscard?instead?of?dd

blkdiscard?$DISK

#?These?steps?only?have?to?be?run?once?on?each?node

#?If?rook?sets?up?osds?using?ceph-volume,?teardown?leaves?some?devices?mapped?that?lock?the?disks.

ls?/dev/mapper/ceph-*?|?xargs?-I%?--?dmsetup?remove?%

#?ceph-volume?setup?can?leave?ceph-?directories?in?/dev?and?/dev/mapper?(unnecessary?clutter)

rm?-rf?/dev/ceph-*

rm?-rf?/dev/mapper/ceph--*

#?Inform?the?OS?of?partition?table?changes

partprobe?$DISK

~

2.Ceph 支持哪些存儲類型?

答:rdb 塊存儲、cephfs 文件存儲、s3 對象存儲等

3.部署中出現(xiàn)各種坑應(yīng)該怎么排查?

答:強(qiáng)烈建議通過 rook、ceph 官網(wǎng)去查看相關(guān)文檔進(jìn)行排錯(cuò)

https://rook.github.io/docs/rook/ https://docs.ceph.com/en/pacific/

4.訪問 dashboard 失敗

答:如果是公有云搭建的 KubeSphere 或 K8s 請把 nodeport 端口在安全組里放行即可

引用鏈接

多節(jié)點(diǎn)安裝: https://v3-1.docs.kubesphere.io/zh/docs/installing-on-linux/public-cloud/install-kubesphere-on-huaweicloud-ecs/

關(guān)于?KubeSphere

KubeSphere (https://kubesphere.io)是在 Kubernetes 之上構(gòu)建的開源容器混合云,提供全棧的 IT 自動化運(yùn)維的能力,簡化企業(yè)的 DevOps 工作流。

KubeSphere?已被?Aqara?智能家居、愛立信、本來生活、東軟、華云、新浪、三一重工、華夏銀行、四川航空、國藥集團(tuán)、微眾銀行、杭州數(shù)跑科技、紫金保險(xiǎn)、去哪兒網(wǎng)、中通、中國人民銀行、中國銀行、中國人保壽險(xiǎn)、中國太平保險(xiǎn)、中國移動、中國電信、天翼云、中移金科、Radore、ZaloPay?等海內(nèi)外數(shù)千家企業(yè)采用。KubeSphere 提供了開發(fā)者友好的向?qū)讲僮鹘缑婧拓S富的企業(yè)級功能,包括?Kubernetes?多云與多集群管理、DevOps?(CI/CD)、應(yīng)用生命周期管理、邊緣計(jì)算、微服務(wù)治理?(Service?Mesh)、多租戶管理、可觀測性、存儲與網(wǎng)絡(luò)管理、GPU?support?等功能,幫助企業(yè)快速構(gòu)建一個(gè)強(qiáng)大和功能豐富的容器云平臺。

<b id="afajh"><abbr id="afajh"></abbr></b>