見鬼了,容器好端端就重啟了?

在日常的開發(fā)工作中相信使用 Kubernetes 的同學(xué)們一定會偶爾收到容器重啟的事件告警。由于應(yīng)用層面的問題導(dǎo)致的容器重啟相對容易排查,比如看容器的內(nèi)存監(jiān)控我們能確定是不是內(nèi)存超過配置的 limit; 又或者看是不是應(yīng)用有 panic 沒有 recovery。

一個正常的工作日我們突然連續(xù)收到多條容器重啟告警,查看報(bào)警還是來自不同的應(yīng)用。按照一般的排查思路先去查看監(jiān)控,內(nèi)存沒有異常,使用值一直在 limit 之下;然后去看日志也沒有找到任何 panic 或者其他錯誤。仔細(xì)一看這幾個告警的應(yīng)用都是來自同一個集群,這個時候猜測大概率和集群有關(guān)系,但是這個集群我們還有其他很多應(yīng)用并沒有發(fā)生容器重啟,所以猜測應(yīng)該不是集群本身的問題,那是不是和機(jī)器有關(guān)系呢?然后我把重啟過的實(shí)例所在的 node ip 都篩選出來發(fā)現(xiàn)重啟的應(yīng)用都是集中在某幾臺機(jī)器。在這些節(jié)點(diǎn)上我去查看了一下 kubelet進(jìn)程,發(fā)現(xiàn) kubelet 在容器告警的時間段都重啟了進(jìn)程。在這種情況下基本就找到了容器重啟的直接原因--kubelet 重啟了。但是我們并沒有更新實(shí)例,kubelet 重啟怎么會把我們的容器重啟呢?下面我們就介紹一下根本原因--kubelet計(jì)算容器的 hash 值。

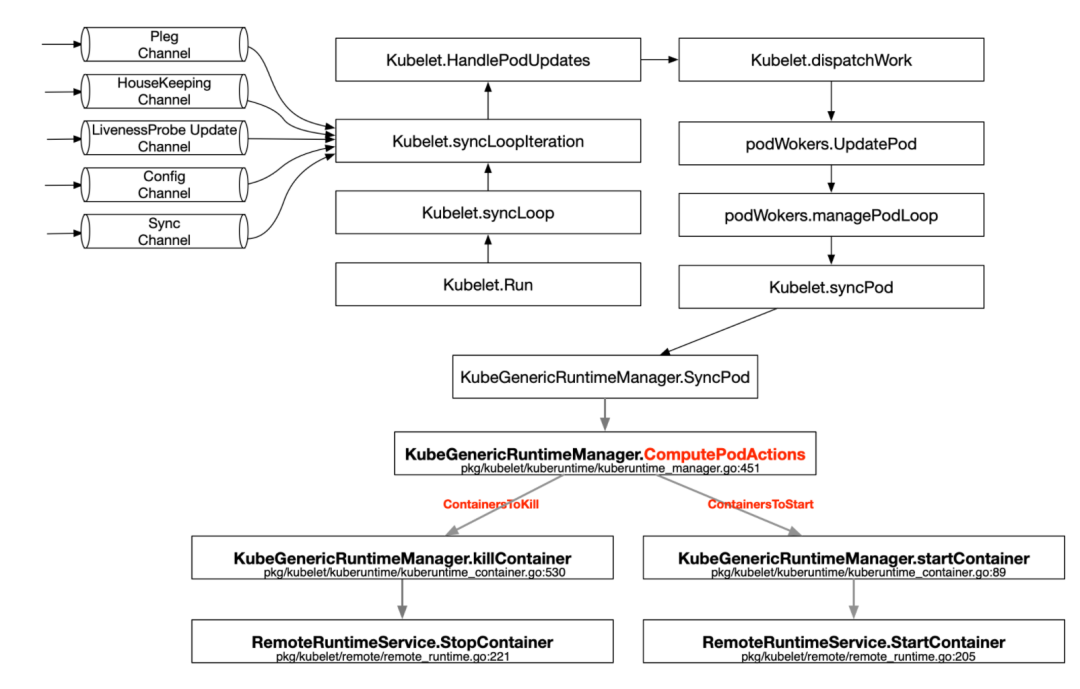

我們知道在 Kubernetes 中的節(jié)點(diǎn)上運(yùn)行著 kubelet 進(jìn)程,這個進(jìn)程負(fù)責(zé)當(dāng)前節(jié)點(diǎn)上所有 Pod 的生命周期。在這里我們從源碼層面看看 kubelet 怎么實(shí)現(xiàn)容器的重啟。

SyncPod

我們首先看 https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/kuberuntime/kuberuntime_manager.go 中的 SyncPod 方法, 這個方法就是保證運(yùn)行中的 Pod 與我們期望的配置時刻保持一致。通過以下步驟完成

根據(jù)從 API Server 獲得的 Pod Spec 以及當(dāng)前 Pod 的 Status 計(jì)算所需要執(zhí)行的 Actions 在需要情況下 Kill 掉當(dāng)前 Pod 的 sandbox 根據(jù)需要(如重啟)kill 掉 Pod 內(nèi)的 containers 根據(jù)需要創(chuàng)建 Pod 的 sandbox 啟動下一個 init container 啟動 Pod 內(nèi)的 containers

func (m *kubeGenericRuntimeManager) SyncPod(pod *v1.Pod, _ v1.PodStatus, podStatus *kubecontainer.PodStatus, pullSecrets []v1.Secret, backOff *flowcontrol.Backoff) (result kubecontainer.PodSyncResult) {

// Step 1: Compute sandbox and container changes.

// 計(jì)算 pod 的

podContainerChanges := m.computePodActions(pod, podStatus)

glog.V(3).Infof("computePodActions got %+v for pod %q", podContainerChanges, format.Pod(pod))

if podContainerChanges.CreateSandbox {

ref, err := ref.GetReference(legacyscheme.Scheme, pod)

if err != nil {

glog.Errorf("Couldn't make a ref to pod %q: '%v'", format.Pod(pod), err)

}

if podContainerChanges.SandboxID != "" {

m.recorder.Eventf(ref, v1.EventTypeNormal, events.SandboxChanged, "Pod sandbox changed, it will be killed and re-created.")

} else {

glog.V(4).Infof("SyncPod received new pod %q, will create a sandbox for it", format.Pod(pod))

}

}

// Step 2: Kill the pod if the sandbox has changed.

// sandbox 有更新,需要 kill pod

if podContainerChanges.KillPod {

...

killResult := m.killPodWithSyncResult(pod, kubecontainer.ConvertPodStatusToRunningPod(m.runtimeName, podStatus), nil)

result.AddPodSyncResult(killResult)

if killResult.Error() != nil {

glog.Errorf("killPodWithSyncResult failed: %v", killResult.Error())

return

}

if podContainerChanges.CreateSandbox {

m.purgeInitContainers(pod, podStatus)

}

} else {

// Step 3: kill any running containers in this pod which are not to keep.

// kill 掉 pod 中不需要保留的容器

for containerID, containerInfo := range podContainerChanges.ContainersToKill {

glog.V(3).Infof("Killing unwanted container %q(id=%q) for pod %q", containerInfo.name, containerID, format.Pod(pod))

killContainerResult := kubecontainer.NewSyncResult(kubecontainer.KillContainer, containerInfo.name)

result.AddSyncResult(killContainerResult)

if err := m.killContainer(pod, containerID, containerInfo.name, containerInfo.message, nil); err != nil {

killContainerResult.Fail(kubecontainer.ErrKillContainer, err.Error())

glog.Errorf("killContainer %q(id=%q) for pod %q failed: %v", containerInfo.name, containerID, format.Pod(pod), err)

return

}

}

}

...

// Step 4: Create a sandbox for the pod if necessary.

// 按需創(chuàng)建 sandbox

podSandboxID := podContainerChanges.SandboxID

if podContainerChanges.CreateSandbox {

var msg string

var err error

glog.V(4).Infof("Creating sandbox for pod %q", format.Pod(pod))

createSandboxResult := kubecontainer.NewSyncResult(kubecontainer.CreatePodSandbox, format.Pod(pod))

result.AddSyncResult(createSandboxResult)

podSandboxID, msg, err = m.createPodSandbox(pod, podContainerChanges.Attempt)

...

}

...

}

...

// Step 5: start the init container.

// 啟動 init 容器

if container := podContainerChanges.NextInitContainerToStart; container != nil {

// Start the next init container.

startContainerResult := kubecontainer.NewSyncResult(kubecontainer.StartContainer, container.Name)

result.AddSyncResult(startContainerResult)

...

if msg, err := m.startContainer(podSandboxID, podSandboxConfig, container, pod, podStatus, pullSecrets, podIP, kubecontainer.ContainerTypeInit); err != nil {

startContainerResult.Fail(err, msg)

utilruntime.HandleError(fmt.Errorf("init container start failed: %v: %s", err, msg))

return

}

// Successfully started the container; clear the entry in the failure

glog.V(4).Infof("Completed init container %q for pod %q", container.Name, format.Pod(pod))

}

// Step 6: start containers in podContainerChanges.ContainersToStart.

// 根據(jù) step1 結(jié)果啟動容器

for _, idx := range podContainerChanges.ContainersToStart {

container := &pod.Spec.Containers[idx]

startContainerResult := kubecontainer.NewSyncResult(kubecontainer.StartContainer, container.Name)

result.AddSyncResult(startContainerResult)

...

glog.V(4).Infof("Creating container %+v in pod %v", container, format.Pod(pod))

if msg, err := m.startContainer(podSandboxID, podSandboxConfig, container, pod, podStatus, pullSecrets, podIP, kubecontainer.ContainerTypeRegular); err != nil {

...

}

}

return

}

computePodActions

在上面 SyncPod 方法中我們可以看到 step 1 的 computePodActions 是決定容器是否需要重啟的關(guān)鍵調(diào)用,我們看看這個方法具體的邏輯

// computePodActions checks whether the pod spec has changed and returns the changes if true.

func (m *kubeGenericRuntimeManager) computePodActions(pod *v1.Pod, podStatus *kubecontainer.PodStatus) podActions {

glog.V(5).Infof("Syncing Pod %q: %+v", format.Pod(pod), pod)

createPodSandbox, attempt, sandboxID := m.podSandboxChanged(pod, podStatus)

changes := podActions{

KillPod: createPodSandbox,

CreateSandbox: createPodSandbox,

SandboxID: sandboxID,

Attempt: attempt,

ContainersToStart: []int{},

ContainersToKill: make(map[kubecontainer.ContainerID]containerToKillInfo),

}

// 這里我們省略其他內(nèi)容,直接看判斷容器是否需要重啟的核心邏輯

// Number of running containers to keep.

keepCount := 0

// check the status of containers.

for idx, container := range pod.Spec.Containers {

containerStatus := podStatus.FindContainerStatusByName(container.Name)

// Call internal container post-stop lifecycle hook for any non-running container so that any

// allocated cpus are released immediately. If the container is restarted, cpus will be re-allocated

// to it.

if containerStatus != nil && containerStatus.State != kubecontainer.ContainerStateRunning {

if err := m.internalLifecycle.PostStopContainer(containerStatus.ID.ID); err != nil {

glog.Errorf("internal container post-stop lifecycle hook failed for container %v in pod %v with error %v",

container.Name, pod.Name, err)

}

}

// If container does not exist, or is not running, check whether we

// need to restart it.

if containerStatus == nil || containerStatus.State != kubecontainer.ContainerStateRunning {

if kubecontainer.ShouldContainerBeRestarted(&container, pod, podStatus) {

message := fmt.Sprintf("Container %+v is dead, but RestartPolicy says that we should restart it.", container)

glog.V(3).Infof(message)

changes.ContainersToStart = append(changes.ContainersToStart, idx)

}

continue

}

// The container is running, but kill the container if any of the following condition is met.

reason := ""

restart := shouldRestartOnFailure(pod)

// 計(jì)算容器的期望的 hash 和 當(dāng)前 hash, 來判斷是否需要重啟容器

if expectedHash, actualHash, changed := containerChanged(&container, containerStatus); changed {

reason = fmt.Sprintf("Container spec hash changed (%d vs %d).", actualHash, expectedHash)

// Restart regardless of the restart policy because the container

// spec changed.

restart = true

} else if liveness, found := m.livenessManager.Get(containerStatus.ID); found && liveness == proberesults.Failure {

// If the container failed the liveness probe, we should kill it.

reason = "Container failed liveness probe."

} else {

// Keep the container.

keepCount += 1

continue

}

// We need to kill the container, but if we also want to restart the

// container afterwards, make the intent clear in the message. Also do

// not kill the entire pod since we expect container to be running eventually.

message := reason

// 可以看到如果需要重啟容器,則把容器 id 放到待啟動 slice 里準(zhǔn)備重啟

if restart {

message = fmt.Sprintf("%s. Container will be killed and recreated.", message)

changes.ContainersToStart = append(changes.ContainersToStart, idx)

}

// 容器信息更新到待 kill 的 map 里

changes.ContainersToKill[containerStatus.ID] = containerToKillInfo{

name: containerStatus.Name,

container: &pod.Spec.Containers[idx],

message: message,

}

glog.V(2).Infof("Container %q (%q) of pod %s: %s", container.Name, containerStatus.ID, format.Pod(pod), message)

}

if keepCount == 0 && len(changes.ContainersToStart) == 0 {

changes.KillPod = true

}

return changes

}

containerChanged

在上個方法里我們看到 containerChanged的調(diào)用決定了容器是否需要重啟,接下來我們看看如果計(jì)算容器的 hash 值

func containerChanged(container *v1.Container, containerStatus *kubecontainer.ContainerStatus) (uint64, uint64, bool) {

expectedHash := kubecontainer.HashContainer(container)

return expectedHash, containerStatus.Hash, containerStatus.Hash != expectedHash

}

在文件`kubernetes/pkg/kubelet/container/helpers.go` 中提供了計(jì)算 hash 的方法

// HashContainer returns the hash of the container. It is used to compare

// the running container with its desired spec.

func HashContainer(container *v1.Container) uint64 {

hash := fnv.New32a()

hashutil.DeepHashObject(hash, *container)

return uint64(hash.Sum32())

}

通過上述的代碼的我們可以清楚的看到只要 v1.Container 這個 struct 里任何一個字段發(fā)生改變都會導(dǎo)致期望的容器 hash 值更新。

下面這種圖清晰總結(jié)了 Kubelet 重啟容器的過程,詳相信對照下圖和上面的代碼大家應(yīng)該能很好的了解 Kubernetes 的容器重啟過程。

原文鏈接:https://lxkaka.wang/kubelet-hash/

你可能還喜歡

點(diǎn)擊下方圖片即可閱讀

云原生是一種信仰 ??

關(guān)注公眾號

后臺回復(fù)?k8s?獲取史上最方便快捷的 Kubernetes 高可用部署工具,只需一條命令,連 ssh 都不需要!

點(diǎn)擊 "閱讀原文" 獲取更好的閱讀體驗(yàn)!

發(fā)現(xiàn)朋友圈變“安靜”了嗎?