【小白學習PyTorch教程】六、基于CIFAR-10 數(shù)據集,使用PyTorch 從頭開始??構建圖像分類模型

「@Author:Runsen」

圖像識別本質上是一種計算機視覺技術,它賦予計算機“眼睛”,讓計算機通過圖像和視頻“看”和理解世界。

在開始閱讀本文之前,建議先了解一下什么是tensor、什么是torch.autograd以及如何在 PyTorch 中構建神經網絡模型。

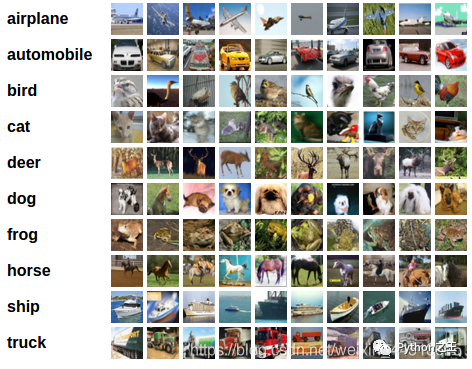

CIFAR-10 數(shù)據集

本教程使用具有 10 個類的CIFAR10 數(shù)據集:‘airplane’, ‘automobile’, ‘bird’, ‘cat’, ‘deer’, ‘dog’, ‘frog’, ‘horse’, ‘ship’, 和‘truck’.

構建圖像分類模型的 5 個步驟

加載并標準化訓練和測試數(shù)據 定義卷積神經網絡 (CNN) 定義損失函數(shù)和優(yōu)化器 在訓練數(shù)據上訓練模型 在測試數(shù)據上測試模型

首先,我們導入庫matplotlib和numpy. 這些分別是繪圖和數(shù)據轉換的基本庫。

import matplotlib.pyplot as plt # for plotting

import numpy as np # for transformation

import torch # PyTorch package

import torchvision # load datasets

import torchvision.transforms as transforms # transform data

import torch.nn as nn # basic building block for neural neteorks

import torch.nn.functional as F # import convolution functions like Relu

import torch.optim as optim # optimzer

torchvision 用于加載流行的數(shù)據集 torchvision.transforms 用于對圖像數(shù)據進行變換 torch.nn 用于定義神經網絡 torch.nn.functional 用于導入 Relu 等函數(shù) torch.optim 用于實現(xiàn)優(yōu)化算法,例如隨機梯度下降 (SGD)

在加載數(shù)據之前,首先定義一個應用于 CIFAR10 數(shù)據集中的圖像數(shù)據的轉換器transform。

#將多個變換組合在一起

transform = transforms.Compose(

# to tensor object

[transforms.ToTensor(),

# mean = 0.5, std = 0.5

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# 設置 batch_size

batch_size = 4

# 設置 num_workers

num_workers = 2

# 加載train數(shù)據

trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batch_size, shuffle=True, num_workers=num_workers)

# 加載test數(shù)據

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=batch_size,shuffle=False, num_workers=num_workers)

# 10個label

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

在上面代碼,首先將想要的轉換并將其放入括號列表中[]并將其傳遞給transforms.Compose()函數(shù)。這里有這兩個轉換:

ToTensor()

將 CIFAR10 數(shù)據集中的類型圖像轉換為由 Python 圖像庫 ( PIL ) 圖像組成的張量,縮放到[0,1]。

Normalize(mean, std)

mean 和 std 參數(shù)的參數(shù)數(shù)量取決于 PIL 圖像的模式,由于PIL 圖像是 RGB,這意味著它們具有三個通道——紅色、綠色和藍色,其范圍是[0,1]。設置mean = 0.5, std = 0.5,基于歸一化公式 : (x — mean) /std,最終得到[-1, 1] 的范圍。

接下來,我們將一些訓練圖像可視化。

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy() # numpy objects

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# 利用ITER函數(shù)獲取隨機訓練圖像

dataiter = iter(trainloader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

# print the class of the image

print(' '.join('%s' % classes[labels[j]] for j in range(batch_size)))

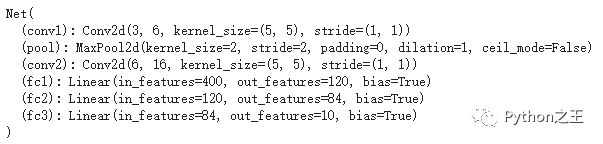

「定義CNN模型」

「定義CNN模型」

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 3 input image channel, 6 output channels,

# 5x5平方卷積核

# in_channels = 3 因為我們的圖像是 RGB

self.conv1 = nn.Conv2d(3, 6, 5)

# Max pooling over a (2, 2) window

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)# 5x5 from image dimension

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

# 展平 conv 層的輸出并將其提供給我們的全連接層

x = x.flatten(1)

# x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

print(net)

定義一個損失函數(shù)和優(yōu)化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

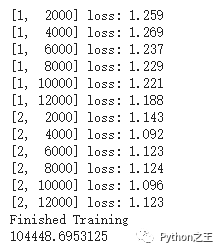

訓練模型

start = torch.cuda.Event(enable_timing=True)

end = torch.cuda.Event(enable_timing=True)

start.record()

for epoch in range(2): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 2000 == 1999: # print every 2000 mini-batches

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

# whatever you are timing goes here

end.record()

# Waits for everything to finish running

torch.cuda.synchronize()

print('Finished Training')

print(start.elapsed_time(end)) # milliseconds

保存神經網絡

# save

PATH = './cifar_net.pth'

torch.save(net.state_dict(), PATH)

# reload

net = Net()

net.load_state_dict(torch.load(PATH))

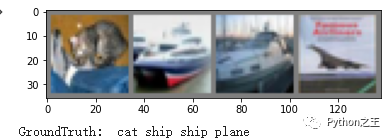

「在測試數(shù)據上測試模型」

dataiter = iter(testloader)

images, labels = dataiter.next()

# print images

imshow(torchvision.utils.make_grid(images))

print('GroundTruth: ', ' '.join('%s' % classes[labels[j]] for j in range(4)))

下面Testing on 10,000 images

下面Testing on 10,000 images

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: %d %%' % (

100 * correct / total))

寫在后面

我們的模型準確度得分很低,那么有什么方法可以提高它呢?

調超參數(shù) 使用不同的優(yōu)化器 圖像數(shù)據增強 嘗試更復雜的架構,例如ImageNet 模型 處理過擬合