監(jiān)控Kubernetes集群的GPU資源

友情提示:全文3090多文字,預(yù)計(jì)閱讀時(shí)間5分鐘

摘要

????上周CNCF:1)微軟Dapr項(xiàng)目擬捐獻(xiàn)給CNCF 2)Flux項(xiàng)目進(jìn)入孵化階段

一、背景說(shuō)明

對(duì)于SRE團(tuán)隊(duì)來(lái)說(shuō),實(shí)現(xiàn)監(jiān)控AI、高性能計(jì)算平臺(tái)上大規(guī)模GPU資源,至關(guān)重要。SRE團(tuán)隊(duì)可以通過(guò)GPU指標(biāo)了解工作負(fù)載等相關(guān)性能,從而優(yōu)化資源分配,提升資源利用率及異常診斷,以提高數(shù)據(jù)中心資源的整體效能。除了SRE及基礎(chǔ)設(shè)施團(tuán)隊(duì)之外,不管你是從事GPU加速方向的研究人員,還是數(shù)據(jù)中心架構(gòu)師,都可以通過(guò)相關(guān)監(jiān)控指標(biāo),了解GPU利用率和工作飽和度以進(jìn)行容量規(guī)劃及任務(wù)調(diào)度等。

隨著AI/ML工作負(fù)載的容器化,調(diào)度平臺(tái)采用具備動(dòng)態(tài)擴(kuò)縮特性的Kubernetes解決方案,針對(duì)其監(jiān)控的急迫性日益提升。在這篇文章中,我們將介紹NVIDIA數(shù)據(jù)中心GPU管理器(DCGM),以及如何將其集成到Prometheus和Grafana等開(kāi)源工具中,以實(shí)現(xiàn)Kubernetes的GPU監(jiān)控的整體解決方案。

NVIDIA DCGM是用于管理和監(jiān)控基于Linux系統(tǒng)的NVIDIA GPU大規(guī)模集群的一體化工具。它是一個(gè)低開(kāi)銷(xiāo)的工具,提供多種能力,包括主動(dòng)健康監(jiān)控、診斷、系統(tǒng)驗(yàn)證、策略、電源和時(shí)鐘管理、配置管理和審計(jì)等。

DCGM提供用于收集GPU遙測(cè)的API。特別值得關(guān)注的是GPU利用率指標(biāo)、內(nèi)存指標(biāo)和流量指標(biāo)。DCGM提供了各種語(yǔ)言的客戶(hù)端,如C和Python。對(duì)于與容器生態(tài)系統(tǒng)的集成,提供基于DCGM APIs的Go綁定實(shí)現(xiàn)。

1.3?NVIDIA exporter

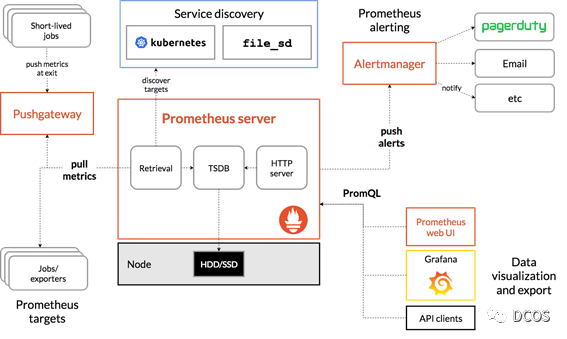

監(jiān)控系統(tǒng)通常由指標(biāo)采集器、用于存儲(chǔ)指標(biāo)的時(shí)間序列數(shù)據(jù)庫(kù)和可視組件組成。例如CNCF畢業(yè)項(xiàng)目Prometheus,它和Grafana一起構(gòu)成監(jiān)控集成方案。其中Prometheus還包括Alertmanager來(lái)創(chuàng)建和管理警報(bào)。Prometheus、kube-state-metrics及node_exporter一起部署,以獲取Kubernetes API對(duì)象的集群指標(biāo)和CPU利用率等節(jié)點(diǎn)指標(biāo)。下圖為Prometheus的示例架構(gòu)。

在前面介紹的Go API基礎(chǔ)上,可以通過(guò)DCGM向Prometheus暴露GPU指標(biāo)。NVIDIA為此構(gòu)建了dcgm-exporter的項(xiàng)目。

dcgm-exporter 使用 Go 綁定從 DCGM 收集 GPU 遙測(cè)數(shù)據(jù),然后通過(guò) http 接口 (/metrics) 向 Prometheus 暴露指標(biāo)。

dcgm-exporter可以通過(guò)使用csv格式的配置文件來(lái)定制DCGM收集的GPU指標(biāo)。

1.4?Kubelet設(shè)備監(jiān)控

dcgm-exporter收集了節(jié)點(diǎn)上所有可用GPU的指標(biāo)。然而,在Kubernetes中,當(dāng)一個(gè)節(jié)點(diǎn)請(qǐng)求GPU資源時(shí),可能不能確定哪些GPU會(huì)被分配給pod。從v1.13開(kāi)始,Kubelet增加了一個(gè)設(shè)備監(jiān)控功能,可以通過(guò)pod-resources套接字了解分配給pod的設(shè)備,其中包括pod名稱(chēng)、pod命名空間和設(shè)備ID。

dcgm-exporter中的http服務(wù)連接到kubelet中的pod-resources服務(wù)(/var/lib/kubelet/pod-resources)來(lái)識(shí)別pod上運(yùn)行的GPU設(shè)備,并將GPU設(shè)備的pod相關(guān)信息添加到收集的指標(biāo)中。

二、GPU監(jiān)控

????????2.1 部署說(shuō)明

下面是一些設(shè)置dcgm-exporter的示例。如果使用NVIDIA GPU Operator,那么dcgm-exporter同樣是部署組件之一。

文檔中包含了設(shè)置Kubernetes集群的步驟。為了簡(jiǎn)潔起見(jiàn),假定已經(jīng)存在一個(gè)運(yùn)行著NVIDIA軟件組件的Kubernetes集群,例如,驅(qū)動(dòng)程序、容器運(yùn)行時(shí)和Kubernetes設(shè)備插件等。在使用Prometheus Operator部署Prometheus時(shí),還可以方便地部署Grafana。在該篇文章中,為了簡(jiǎn)單起見(jiàn),使用了單節(jié)點(diǎn)Kubernetes集群。

在設(shè)置社區(qū)提供的Prometheus Operator的Helm chart時(shí),必須暴露Grafana供外部訪問(wèn),并且prometheusSpec.serviceMonitorSelectorNilUsesHelmValues必須設(shè)置為false。

簡(jiǎn)單來(lái)說(shuō),設(shè)置監(jiān)控包括運(yùn)行以下命令。

$ helm repo add prometheus-community \https://prometheus-community.github.io/helm-charts$ helm repo update$ helm inspect values prometheus-community/kube-prometheus-stack > /tmp/kube-prometheus-stack.values# Edit /tmp/kube-prometheus-stack.values in your favorite editor# according to the documentation# This exposes the service via NodePort so that Prometheus/Grafana# are accessible outside the cluster with a browser$ helm install prometheus-community/kube-prometheus-stack \--create-namespace --namespace prometheus \--generate-name \--set prometheus.service.type=NodePort \--set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues=false

? ? ? ??此時(shí),集群配置如下所示,其中所有的Prometheus pods和服務(wù)健康運(yùn)行。

$ kubectl get pods -ANAMESPACE NAME READY STATUS RESTARTS AGEkube-system calico-kube-controllers-8f59968d4-zrsdt 1/1 Running 0 18mkube-system calico-node-c257f 1/1 Running 0 18mkube-system coredns-f9fd979d6-c52hz 1/1 Running 0 19mkube-system coredns-f9fd979d6-ncbdp 1/1 Running 0 19mkube-system etcd-ip-172-31-27-93 1/1 Running 1 19mkube-system kube-apiserver-ip-172-31-27-93 1/1 Running 1 19mkube-system kube-controller-manager-ip-172-31-27-93 1/1 Running 1 19mkube-system kube-proxy-b9szp 1/1 Running 1 19mkube-system kube-scheduler-ip-172-31-27-93 1/1 Running 1 19mkube-system nvidia-device-plugin-1602308324-jg842 1/1 Running 0 17mprometheus alertmanager-kube-prometheus-stack-1602-alertmanager-0 2/2 Running 0 92sprometheus kube-prometheus-stack-1602-operator-c4bc5c4d5-f5vzc 2/2 Running 0 98sprometheus kube-prometheus-stack-1602309230-grafana-6b4fc97f8f-66kdv 2/2 Running 0 98sprometheus kube-prometheus-stack-1602309230-kube-state-metrics-76887bqzv2b 1/1 Running 0 98sprometheus kube-prometheus-stack-1602309230-prometheus-node-exporter-rrk9l 1/1 Running 0 98sprometheus prometheus-kube-prometheus-stack-1602-prometheus-0 3/3 Running 1 92s$ kubectl get svc -ANAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdefault kubernetes ClusterIP 10.96.0.1443/TCP 20m kube-system kube-dns ClusterIP 10.96.0.1053/UDP,53/TCP,9153/TCP 20m kube-system kube-prometheus-stack-1602-coredns ClusterIP None9153/TCP 2m18s kube-system kube-prometheus-stack-1602-kube-controller-manager ClusterIP None10252/TCP 2m18s kube-system kube-prometheus-stack-1602-kube-etcd ClusterIP None2379/TCP 2m18s kube-system kube-prometheus-stack-1602-kube-proxy ClusterIP None10249/TCP 2m18s kube-system kube-prometheus-stack-1602-kube-scheduler ClusterIP None10251/TCP 2m18s kube-system kube-prometheus-stack-1602-kubelet ClusterIP None10250/TCP,10255/TCP,4194/TCP 2m12s prometheus alertmanager-operated ClusterIP None9093/TCP,9094/TCP,9094/UDP 2m12s prometheus kube-prometheus-stack-1602-alertmanager ClusterIP 10.104.106.1749093/TCP 2m18s prometheus kube-prometheus-stack-1602-operator ClusterIP 10.98.165.1488080/TCP,443/TCP 2m18s prometheus kube-prometheus-stack-1602-prometheus NodePort 10.105.3.199090:30090/TCP 2m18s prometheus kube-prometheus-stack-1602309230-grafana ClusterIP 10.100.178.4180/TCP 2m18s prometheus kube-prometheus-stack-1602309230-kube-state-metrics ClusterIP 10.100.119.138080/TCP 2m18s prometheus kube-prometheus-stack-1602309230-prometheus-node-exporter ClusterIP 10.100.56.749100/TCP 2m18s prometheus prometheus-operated ClusterIP None9090/TCP 2m12s

????????b. 部署dcgm-exporter

$ helm repo add gpu-helm-charts \https://nvidia.github.io/gpu-monitoring-tools/helm-charts$ helm repo update

? ? ? ? 使用helm安裝

helm install \--generate-name \gpu-helm-charts/dcgm-exporter

$ helm lsNAME NAMESPACE REVISION APP VERSIONdcgm-exporter-1-1601677302 default 1 dcgm-exporter-1.1.0 2.0.10nvidia-device-plugin-1601662841 default 1 nvidia-device-plugin-0.7.0 0.7.0

????????Prometheus和Grafana服務(wù)暴露如下:

$ kubectl get svc -ANAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdefault dcgm-exporter ClusterIP 10.99.34.128 <none> 9400/TCP 43ddefault kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20mkube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 20mkube-system kube-prometheus-stack-1602-coredns ClusterIP None <none> 9153/TCP 2m18skube-system kube-prometheus-stack-1602-kube-controller-manager ClusterIP None <none> 10252/TCP 2m18skube-system kube-prometheus-stack-1602-kube-etcd ClusterIP None <none> 2379/TCP 2m18skube-system kube-prometheus-stack-1602-kube-proxy ClusterIP None <none> 10249/TCP 2m18skube-system kube-prometheus-stack-1602-kube-scheduler ClusterIP None <none> 10251/TCP 2m18skube-system kube-prometheus-stack-1602-kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 2m12sprometheus alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 2m12sprometheus kube-prometheus-stack-1602-alertmanager ClusterIP 10.104.106.174 <none> 9093/TCP 2m18sprometheus kube-prometheus-stack-1602-operator ClusterIP 10.98.165.148 <none> 8080/TCP,443/TCP 2m18sprometheus kube-prometheus-stack-1602-prometheus NodePort 10.105.3.19 <none> 9090:30090/TCP 2m18sprometheus kube-prometheus-stack-1602309230-grafana ClusterIP 10.100.178.41 <none> 80:32032/TCP 2m18sprometheus kube-prometheus-stack-1602309230-kube-state-metrics ClusterIP 10.100.119.13 <none> 8080/TCP 2m18sprometheus kube-prometheus-stack-1602309230-prometheus-node-exporter ClusterIP 10.100.56.74 <none> 9100/TCP 2m18sprometheus prometheus-operated ClusterIP None <none> 9090/TCP 2m12s

???????使用32032端口暴露的Grafana服務(wù),訪問(wèn)Grafana主頁(yè)。使用Prometheus chart中設(shè)置的憑證登錄到儀表板:prometheus.values中的adminPassword字段。

???現(xiàn)在要啟動(dòng)一個(gè)用于GPU指標(biāo)的Grafana儀表板,請(qǐng)從Grafana儀表板(https://grafana.com/grafana/dashboards/12239)導(dǎo)入NVIDIA儀表板。

????現(xiàn)在運(yùn)行一些GPU工作負(fù)載,為此,DCGM社區(qū)提供了一個(gè)名為dcgmproftester的CUDA負(fù)載生成器,它可以用來(lái)生成確定性的CUDA工作負(fù)載,用于讀取和驗(yàn)證GPU指標(biāo)。

????要生成一個(gè)Pod,首先必須下載DCGM并將制成鏡像。以下腳本創(chuàng)建了一個(gè)可用于運(yùn)行dcgmproftester的容器。這個(gè)容器可以在NVIDIA DockerHub倉(cāng)庫(kù)中找到。

#!/usr/bin/env bashset -exo pipefailmkdir -p /tmp/dcgm-dockerpushd /tmp/dcgm-dockercat > Dockerfile <ARG BASE_DISTARG CUDA_VERFROM nvidia/cuda:\${CUDA_VER}-base-\${BASE_DIST}LABEL io.k8s.display-name="NVIDIA dcgmproftester"ARG DCGM_VERSIONWORKDIR /dcgmRUN apt-get update && apt-get install -y --no-install-recommends \libgomp1 \wget && \rm -rf /var/lib/apt/lists/* && \wget --no-check-certificate https://developer.download.nvidia.com/compute/redist/dcgm/\${DCGM_VERSION}/DEBS/datacenter-gpu-manager_\${DCGM_VERSION}_amd64.deb && \dpkg -i datacenter-gpu-manager_*.deb && \rm -f datacenter-gpu-manager_*.debENTRYPOINT ["/usr/bin/dcgmproftester11"]EOFDIR=.DCGM_REL_VERSION=2.0.10BASE_DIST=ubuntu18.04CUDA_VER=11.0IMAGE_NAME=nvidia/samples:dcgmproftester-$DCGM_REL_VERSION-cuda$CUDA_VER-$BASE_DISTdocker build --pull \-t "$IMAGE_NAME" \--build-arg DCGM_VERSION=$DCGM_REL_VERSION \--build-arg BASE_DIST=$BASE_DIST \--build-arg CUDA_VER=$CUDA_VER \--file Dockerfile \"$DIR"popd

????????在Kubernetes集群上部署容器之前,嘗試直接使用Docker運(yùn)行它。在這個(gè)例子中,通過(guò)指定-t 1004來(lái)使用Tensor Cores觸發(fā)FP16矩陣乘法,并以-d 45(45秒)的速度運(yùn)行測(cè)試。您可以通過(guò)修改-t參數(shù)來(lái)嘗試運(yùn)行其他工作負(fù)載。

Skipping CreateDcgmGroups() since DCGM validation is disabledCU_DEVICE_ATTRIBUTE_MAX_THREADS_PER_MULTIPROCESSOR: 1024CU_DEVICE_ATTRIBUTE_MULTIPROCESSOR_COUNT: 40CU_DEVICE_ATTRIBUTE_MAX_SHARED_MEMORY_PER_MULTIPROCESSOR: 65536CU_DEVICE_ATTRIBUTE_COMPUTE_CAPABILITY_MAJOR: 7CU_DEVICE_ATTRIBUTE_COMPUTE_CAPABILITY_MINOR: 5CU_DEVICE_ATTRIBUTE_GLOBAL_MEMORY_BUS_WIDTH: 256CU_DEVICE_ATTRIBUTE_MEMORY_CLOCK_RATE: 5001000Max Memory bandwidth: 320064000000 bytes (320.06 GiB)CudaInit completed successfully.Skipping WatchFields() since DCGM validation is disabledTensorEngineActive: generated ???, dcgm 0.000 (27605.2 gflops)TensorEngineActive:?generated????,?dcgm?0.000?(28697.6?gflops)

????????將其部署到Kubernetes集群上,可以通過(guò)Grafana儀表板觀測(cè)相應(yīng)的指標(biāo)。下面的代碼示例:

cat << EOF | kubectl create -f -apiVersion: v1kind: Podmetadata:name: dcgmproftesterspec:restartPolicy: OnFailurecontainers:name: dcgmproftester11image: nvidia/samples:dcgmproftester-2.0.10-cuda11.0-ubuntu18.04args: ["--no-dcgm-validation", "-t 1004", "-d 120"]resources:limits:: 1securityContext:capabilities:add: ["SYS_ADMIN"]EOF

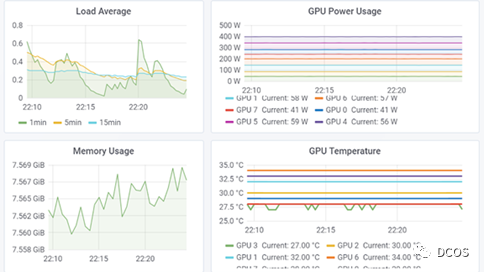

????????可以看到dcgmproftester pod健康運(yùn)行,隨后指標(biāo)顯示在Grafana儀表板上。GPU利用率(GrActive)已經(jīng)達(dá)到了98%的利用率峰值,可能還會(huì)發(fā)現(xiàn)其他有趣的指標(biāo),比如功率或GPU內(nèi)存。

$ kubectl get pods -ANAMESPACE NAMEREADY STATUS RESTARTS AGE...default dcgmproftester1/1 Running 0 6s

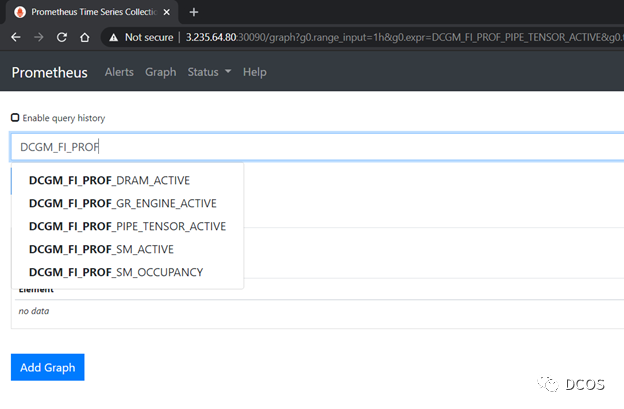

????????下圖顯示了 Prometheus獲取的由dcgm-exporter 提供的監(jiān)控指標(biāo)。

????????下面的儀表板包括Tensor Core利用率。重新啟動(dòng)dcgmproftester容器后,你可以看到T4上的Tensor Core已經(jīng)達(dá)到了約87%的利用率。

二、參考資料

1.?https://developer.nvidia.com/blog/monitoring-gpus-in-kubernetes-with-dcgm/#