【NLP】簡單學習一下NLP中的transformer的pytorch代碼

經(jīng)典transformer的學習 文章轉自微信公眾號【機器學習煉丹術】 作者:陳亦新(已授權) 聯(lián)系方式: 微信cyx645016617 歡迎交流,共同進步

代碼細講

transformer

Embedding

Encoder_MultipleLayers

Encoder

完整代碼

代碼細講

transformer

class transformer(nn.Sequential):

def __init__(self, encoding, **config):

super(transformer, self).__init__()

if encoding == 'drug':

self.emb = Embeddings(config['input_dim_drug'], config['transformer_emb_size_drug'], 50, config['transformer_dropout_rate'])

self.encoder = Encoder_MultipleLayers(config['transformer_n_layer_drug'],

config['transformer_emb_size_drug'],

config['transformer_intermediate_size_drug'],

config['transformer_num_attention_heads_drug'],

config['transformer_attention_probs_dropout'],

config['transformer_hidden_dropout_rate'])

elif encoding == 'protein':

self.emb = Embeddings(config['input_dim_protein'], config['transformer_emb_size_target'], 545, config['transformer_dropout_rate'])

self.encoder = Encoder_MultipleLayers(config['transformer_n_layer_target'],

config['transformer_emb_size_target'],

config['transformer_intermediate_size_target'],

config['transformer_num_attention_heads_target'],

config['transformer_attention_probs_dropout'],

config['transformer_hidden_dropout_rate'])

### parameter v (tuple of length 2) is from utils.drug2emb_encoder

def forward(self, v):

e = v[0].long().to(device)

e_mask = v[1].long().to(device)

print(e.shape,e_mask.shape)

ex_e_mask = e_mask.unsqueeze(1).unsqueeze(2)

ex_e_mask = (1.0 - ex_e_mask) * -10000.0

emb = self.emb(e)

encoded_layers = self.encoder(emb.float(), ex_e_mask.float())

return encoded_layers[:,0]

只要有兩個組件,一個是Embedding層,一個是Encoder_MultipleLayers模塊; forward的輸入v是一個元組,包含兩個元素:第一個是數(shù)據(jù),第二個是mask。對應有效數(shù)據(jù)的位置。

Embedding

class Embeddings(nn.Module):

"""Construct the embeddings from protein/target, position embeddings.

"""

def __init__(self, vocab_size, hidden_size, max_position_size, dropout_rate):

super(Embeddings, self).__init__()

self.word_embeddings = nn.Embedding(vocab_size, hidden_size)

self.position_embeddings = nn.Embedding(max_position_size, hidden_size)

self.LayerNorm = nn.LayerNorm(hidden_size)

self.dropout = nn.Dropout(dropout_rate)

def forward(self, input_ids):

seq_length = input_ids.size(1)

position_ids = torch.arange(seq_length, dtype=torch.long, device=input_ids.device)

position_ids = position_ids.unsqueeze(0).expand_as(input_ids)

words_embeddings = self.word_embeddings(input_ids)

position_embeddings = self.position_embeddings(position_ids)

embeddings = words_embeddings + position_embeddings

embeddings = self.LayerNorm(embeddings)

embeddings = self.dropout(embeddings)

return embeddings

包含三個組件,一個是Embedding,其他是LayerNorm和Dropout層。

torch.nn.Embedding(num_embeddings, embedding_dim, padding_idx=None,

max_norm=None, norm_type=2.0, scale_grad_by_freq=False,

sparse=False, _weight=None)

其為一個簡單的存儲固定大小的詞典的嵌入向量的查找表,意思就是說,給一個編號,嵌入層就能返回這個編號對應的嵌入向量,嵌入向量反映了各個編號代表的符號之間的語義關系。

輸入為一個編號列表,輸出為對應的符號嵌入向量列表。

num_embeddings (python:int) – 詞典的大小尺寸,比如總共出現(xiàn)5000個詞,那就輸入5000。此時index為(0-4999) embedding_dim (python:int) – 嵌入向量的維度,即用多少維來表示一個符號。 padding_idx (python:int, optional) – 填充id,比如,輸入長度為100,但是每次的句子長度并不一樣,后面就需要用統(tǒng)一的數(shù)字填充,而這里就是指定這個數(shù)字,這樣,網(wǎng)絡在遇到填充id時,就不會計算其與其它符號的相關性。(初始化為0) max_norm (python:float, optional) – 最大范數(shù),如果嵌入向量的范數(shù)超過了這個界限,就要進行再歸一化。 norm_type (python:float, optional) – 指定利用什么范數(shù)計算,并用于對比max_norm,默認為2范數(shù)。 scale_grad_by_freq (boolean, optional) – 根據(jù)單詞在mini-batch中出現(xiàn)的頻率,對梯度進行放縮。默認為False. sparse (bool, optional) – 若為True,則與權重矩陣相關的梯度轉變?yōu)橄∈鑿埩俊?/section>

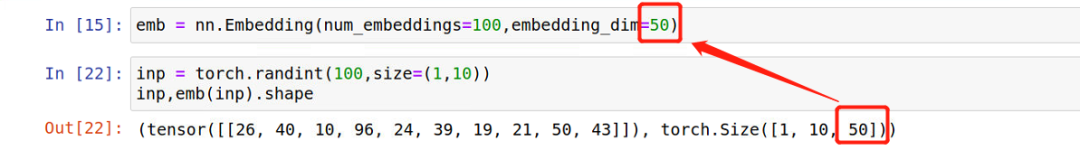

舉一個例子:

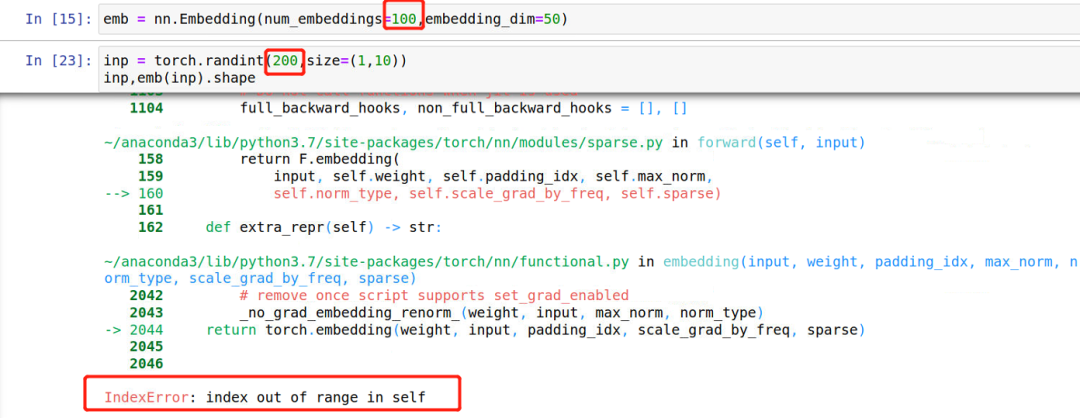

如果你的整數(shù)最大超過了設置的字典的容量,那么就會出錯誤:

Embedding其中有可學習參數(shù)!是一個num_embedding * embedding_dim的矩陣。

Encoder_MultipleLayers

class Encoder_MultipleLayers(nn.Module):

def __init__(self, n_layer, hidden_size, intermediate_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob):

super(Encoder_MultipleLayers, self).__init__()

layer = Encoder(hidden_size, intermediate_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob)

self.layer = nn.ModuleList([copy.deepcopy(layer) for _ in range(n_layer)])

def forward(self, hidden_states, attention_mask, output_all_encoded_layers=True):

all_encoder_layers = []

for layer_module in self.layer:

hidden_states = layer_module(hidden_states, attention_mask)

return hidden_states

transformer中的embedding,目的是將數(shù)據(jù)轉換成對應的向量。這個Encoder-multilayer則是提取特征的關鍵。 結構很簡單,就是由==n_layer==個Encoder堆疊而成。

Encoder

class Encoder(nn.Module):

def __init__(self, hidden_size, intermediate_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob):

super(Encoder, self).__init__()

self.attention = Attention(hidden_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob)

self.intermediate = Intermediate(hidden_size, intermediate_size)

self.output = Output(intermediate_size, hidden_size, hidden_dropout_prob)

def forward(self, hidden_states, attention_mask):

attention_output = self.attention(hidden_states, attention_mask)

intermediate_output = self.intermediate(attention_output)

layer_output = self.output(intermediate_output, attention_output)

return layer_output

其中包含了Attention部分,Intermediate和Output。

class Attention(nn.Module):

def __init__(self, hidden_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob):

super(Attention, self).__init__()

self.self = SelfAttention(hidden_size, num_attention_heads, attention_probs_dropout_prob)

self.output = SelfOutput(hidden_size, hidden_dropout_prob)

def forward(self, input_tensor, attention_mask):

self_output = self.self(input_tensor, attention_mask)

attention_output = self.output(self_output, input_tensor)

return attention_output

class SelfAttention(nn.Module):

def __init__(self, hidden_size, num_attention_heads, attention_probs_dropout_prob):

super(SelfAttention, self).__init__()

if hidden_size % num_attention_heads != 0:

raise ValueError(

"The hidden size (%d) is not a multiple of the number of attention "

"heads (%d)" % (hidden_size, num_attention_heads))

self.num_attention_heads = num_attention_heads

self.attention_head_size = int(hidden_size / num_attention_heads)

self.all_head_size = self.num_attention_heads * self.attention_head_size

self.query = nn.Linear(hidden_size, self.all_head_size)

self.key = nn.Linear(hidden_size, self.all_head_size)

self.value = nn.Linear(hidden_size, self.all_head_size)

self.dropout = nn.Dropout(attention_probs_dropout_prob)

def transpose_for_scores(self, x):

# num_attention_heads = 8, attention_head_size = 128 / 8 = 16

new_x_shape = x.size()[:-1] + (self.num_attention_heads, self.attention_head_size)

x = x.view(*new_x_shape)

return x.permute(0, 2, 1, 3)

def forward(self, hidden_states, attention_mask):

# hidden_states.shape = [batch,50,128]

mixed_query_layer = self.query(hidden_states)

mixed_key_layer = self.key(hidden_states)

mixed_value_layer = self.value(hidden_states)

query_layer = self.transpose_for_scores(mixed_query_layer)

key_layer = self.transpose_for_scores(mixed_key_layer)

value_layer = self.transpose_for_scores(mixed_value_layer)

# query_layer.shape = [batch,8,50,16]

# Take the dot product between "query" and "key" to get the raw attention scores.

attention_scores = torch.matmul(query_layer, key_layer.transpose(-1, -2))

# attention_score.shape = [batch,8,50,50]

attention_scores = attention_scores / math.sqrt(self.attention_head_size)

attention_scores = attention_scores + attention_mask

# Normalize the attention scores to probabilities.

attention_probs = nn.Softmax(dim=-1)(attention_scores)

# This is actually dropping out entire tokens to attend to, which might

# seem a bit unusual, but is taken from the original Transformer paper.

attention_probs = self.dropout(attention_probs)

context_layer = torch.matmul(attention_probs, value_layer)

context_layer = context_layer.permute(0, 2, 1, 3).contiguous()

new_context_layer_shape = context_layer.size()[:-2] + (self.all_head_size,)

context_layer = context_layer.view(*new_context_layer_shape)

return context_layer

這一段和一般的vit處理的流程類似。雖然transformer是從NLP到CV的,但從CV的vit再回看NLP的transformer也是有一種樂趣。里面要注意的點是multihead的概念。本來hidden-size是128,如果設置multihead的數(shù)量為8,那么其實好比卷積里面的通道數(shù)量。會把128的token看成8個16個token,然后分別做自注意力。但是把multihead比作卷積的概念感覺說的過去,比作分組卷積的概念好像也OK:

比作卷積。如果固定了每一個head的size數(shù)量為16,那么head就好比通道數(shù),那么增加head的數(shù)量,其實就是增加了卷積核通道數(shù)的感覺; 比作分組卷積。如果固定了hidden-size的數(shù)量為128,那么head的數(shù)量就是分組的數(shù)量,那么增加head的數(shù)量就好比卷積分組變多,降低了計算量。

-其他部分的代碼都是FC + LayerNorm +Dropout,不再贅述。

完整代碼

import torch.nn as nn

import torch.nn.functional as F

import copy,math

class Embeddings(nn.Module):

"""Construct the embeddings from protein/target, position embeddings.

"""

def __init__(self, vocab_size, hidden_size, max_position_size, dropout_rate):

super(Embeddings, self).__init__()

self.word_embeddings = nn.Embedding(vocab_size, hidden_size)

self.position_embeddings = nn.Embedding(max_position_size, hidden_size)

self.LayerNorm = nn.LayerNorm(hidden_size)

self.dropout = nn.Dropout(dropout_rate)

def forward(self, input_ids):

seq_length = input_ids.size(1)

position_ids = torch.arange(seq_length, dtype=torch.long, device=input_ids.device)

position_ids = position_ids.unsqueeze(0).expand_as(input_ids)

words_embeddings = self.word_embeddings(input_ids)

position_embeddings = self.position_embeddings(position_ids)

embeddings = words_embeddings + position_embeddings

embeddings = self.LayerNorm(embeddings)

embeddings = self.dropout(embeddings)

return embeddings

class Encoder_MultipleLayers(nn.Module):

def __init__(self, n_layer, hidden_size, intermediate_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob):

super(Encoder_MultipleLayers, self).__init__()

layer = Encoder(hidden_size, intermediate_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob)

self.layer = nn.ModuleList([copy.deepcopy(layer) for _ in range(n_layer)])

def forward(self, hidden_states, attention_mask, output_all_encoded_layers=True):

all_encoder_layers = []

for layer_module in self.layer:

hidden_states = layer_module(hidden_states, attention_mask)

#if output_all_encoded_layers:

# all_encoder_layers.append(hidden_states)

#if not output_all_encoded_layers:

# all_encoder_layers.append(hidden_states)

return hidden_states

class Encoder(nn.Module):

def __init__(self, hidden_size, intermediate_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob):

super(Encoder, self).__init__()

self.attention = Attention(hidden_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob)

self.intermediate = Intermediate(hidden_size, intermediate_size)

self.output = Output(intermediate_size, hidden_size, hidden_dropout_prob)

def forward(self, hidden_states, attention_mask):

attention_output = self.attention(hidden_states, attention_mask)

intermediate_output = self.intermediate(attention_output)

layer_output = self.output(intermediate_output, attention_output)

return layer_output

class Attention(nn.Module):

def __init__(self, hidden_size, num_attention_heads, attention_probs_dropout_prob, hidden_dropout_prob):

super(Attention, self).__init__()

self.self = SelfAttention(hidden_size, num_attention_heads, attention_probs_dropout_prob)

self.output = SelfOutput(hidden_size, hidden_dropout_prob)

def forward(self, input_tensor, attention_mask):

self_output = self.self(input_tensor, attention_mask)

attention_output = self.output(self_output, input_tensor)

return attention_output

class SelfAttention(nn.Module):

def __init__(self, hidden_size, num_attention_heads, attention_probs_dropout_prob):

super(SelfAttention, self).__init__()

if hidden_size % num_attention_heads != 0:

raise ValueError(

"The hidden size (%d) is not a multiple of the number of attention "

"heads (%d)" % (hidden_size, num_attention_heads))

self.num_attention_heads = num_attention_heads

self.attention_head_size = int(hidden_size / num_attention_heads)

self.all_head_size = self.num_attention_heads * self.attention_head_size

self.query = nn.Linear(hidden_size, self.all_head_size)

self.key = nn.Linear(hidden_size, self.all_head_size)

self.value = nn.Linear(hidden_size, self.all_head_size)

self.dropout = nn.Dropout(attention_probs_dropout_prob)

def transpose_for_scores(self, x):

new_x_shape = x.size()[:-1] + (self.num_attention_heads, self.attention_head_size)

x = x.view(*new_x_shape)

return x.permute(0, 2, 1, 3)

def forward(self, hidden_states, attention_mask):

mixed_query_layer = self.query(hidden_states)

mixed_key_layer = self.key(hidden_states)

mixed_value_layer = self.value(hidden_states)

query_layer = self.transpose_for_scores(mixed_query_layer)

key_layer = self.transpose_for_scores(mixed_key_layer)

value_layer = self.transpose_for_scores(mixed_value_layer)

# Take the dot product between "query" and "key" to get the raw attention scores.

attention_scores = torch.matmul(query_layer, key_layer.transpose(-1, -2))

attention_scores = attention_scores / math.sqrt(self.attention_head_size)

attention_scores = attention_scores + attention_mask

# Normalize the attention scores to probabilities.

attention_probs = nn.Softmax(dim=-1)(attention_scores)

# This is actually dropping out entire tokens to attend to, which might

# seem a bit unusual, but is taken from the original Transformer paper.

attention_probs = self.dropout(attention_probs)

context_layer = torch.matmul(attention_probs, value_layer)

context_layer = context_layer.permute(0, 2, 1, 3).contiguous()

new_context_layer_shape = context_layer.size()[:-2] + (self.all_head_size,)

context_layer = context_layer.view(*new_context_layer_shape)

return context_layer

class SelfOutput(nn.Module):

def __init__(self, hidden_size, hidden_dropout_prob):

super(SelfOutput, self).__init__()

self.dense = nn.Linear(hidden_size, hidden_size)

self.LayerNorm = nn.LayerNorm(hidden_size)

self.dropout = nn.Dropout(hidden_dropout_prob)

def forward(self, hidden_states, input_tensor):

hidden_states = self.dense(hidden_states)

hidden_states = self.dropout(hidden_states)

hidden_states = self.LayerNorm(hidden_states + input_tensor)

return hidden_states

class Intermediate(nn.Module):

def __init__(self, hidden_size, intermediate_size):

super(Intermediate, self).__init__()

self.dense = nn.Linear(hidden_size, intermediate_size)

def forward(self, hidden_states):

hidden_states = self.dense(hidden_states)

hidden_states = F.relu(hidden_states)

return hidden_states

class Output(nn.Module):

def __init__(self, intermediate_size, hidden_size, hidden_dropout_prob):

super(Output, self).__init__()

self.dense = nn.Linear(intermediate_size, hidden_size)

self.LayerNorm = nn.LayerNorm(hidden_size)

self.dropout = nn.Dropout(hidden_dropout_prob)

def forward(self, hidden_states, input_tensor):

hidden_states = self.dense(hidden_states)

hidden_states = self.dropout(hidden_states)

hidden_states = self.LayerNorm(hidden_states + input_tensor)

return hidden_states

class transformer(nn.Sequential):

def __init__(self, encoding, **config):

super(transformer, self).__init__()

if encoding == 'drug':

self.emb = Embeddings(config['input_dim_drug'], config['transformer_emb_size_drug'], 50, config['transformer_dropout_rate'])

self.encoder = Encoder_MultipleLayers(config['transformer_n_layer_drug'],

config['transformer_emb_size_drug'],

config['transformer_intermediate_size_drug'],

config['transformer_num_attention_heads_drug'],

config['transformer_attention_probs_dropout'],

config['transformer_hidden_dropout_rate'])

elif encoding == 'protein':

self.emb = Embeddings(config['input_dim_protein'], config['transformer_emb_size_target'], 545, config['transformer_dropout_rate'])

self.encoder = Encoder_MultipleLayers(config['transformer_n_layer_target'],

config['transformer_emb_size_target'],

config['transformer_intermediate_size_target'],

config['transformer_num_attention_heads_target'],

config['transformer_attention_probs_dropout'],

config['transformer_hidden_dropout_rate'])

### parameter v (tuple of length 2) is from utils.drug2emb_encoder

def forward(self, v):

e = v[0].long().to(device)

e_mask = v[1].long().to(device)

print(e.shape,e_mask.shape)

ex_e_mask = e_mask.unsqueeze(1).unsqueeze(2)

ex_e_mask = (1.0 - ex_e_mask) * -10000.0

emb = self.emb(e)

encoded_layers = self.encoder(emb.float(), ex_e_mask.float())

return encoded_layers[:,0]

往期精彩回顧

適合初學者入門人工智能的路線及資料下載 (圖文+視頻)機器學習入門系列下載 中國大學慕課《機器學習》(黃海廣主講) 機器學習及深度學習筆記等資料打印 《統(tǒng)計學習方法》的代碼復現(xiàn)專輯 機器學習交流qq群955171419,加入微信群請掃碼:

評論

圖片

表情