Scrapy 源碼分析之 RetryMiddleware 模塊

↑ 關注 + 星標 ,每天學Python新技能

后臺回復【大禮包】送你Python自學大禮包

時隔一個多月,scrapy 章節(jié)又迎來了重大更新,今天分享的主題是 RetryMiddleware 中間件。文中若有錯誤內容,歡迎各位讀者多多指正。在閱讀的同時不要忘記點贊+關注哦??

一、問題思考

二、文檔查尋

三、源碼分析

四、源碼重寫

五、總結分享

趣味模塊

娜娜是一名爬蟲工程師,最近小娜在采集數(shù)據(jù)過程中遇到了難題。原因是因為任務積壓代理超時了,所有的 request 全部無法下載了。娜娜很是苦惱,不知道如何解決這類型問題。后來小娜看了 TheWeiJun 發(fā)表的文章,存在的問題立馬迎刃而解,接下來,讓我們一起去看看他們是怎么做的吧。

一、問題思考

Question

①使用 scrapy 框架時,如果請求失敗,如何保證該請求成功率?

Question

②scrapy 的重試機制是否了解,默認是幾次?在什么樣的情況下觸發(fā)?

Question

③scrapy 重試機制,重試狀態(tài)碼有哪些,我們是否可以動態(tài)定義?

Question

④scrapy 在重試過程中,如何實時更換代理?如何清除失效的代理?

前言:那么帶著這些問題,接下來我們對 Scrapy 源碼進行分析探索吧,我相信這篇文章會讓大家受益匪淺!

二、文檔查尋

1、查看官網文檔,搜索指定的模塊 RetryMiddleware,搜索結果如下:

說明:觀察搜索結果,我們發(fā)現(xiàn)官方文檔中存在對 RetryMiddleware 模塊的解釋,接下來讓我們點進去,一起去看看官方說明吧。

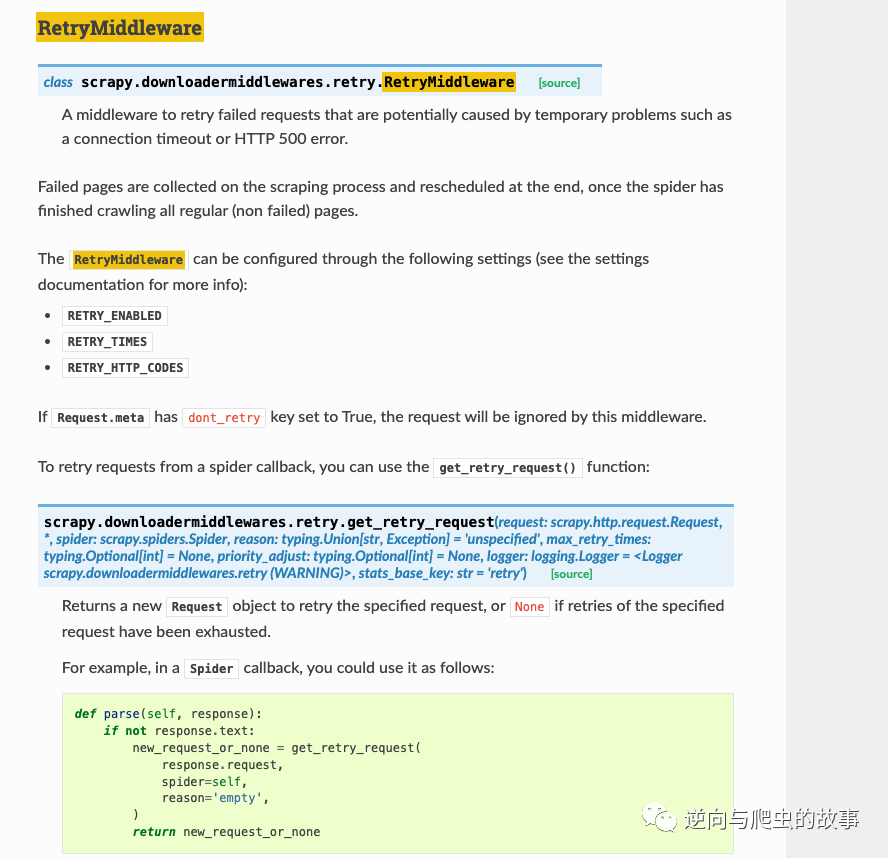

2、點擊搜索結果,查看官方對當前模塊的說明解釋截圖如下:

說明:觀察上面的截圖,我們發(fā)現(xiàn)上面提到的問題大家應該已經知道了部分答案吧。但是還是不夠清晰,接下來,讓我?guī)Т蠹疫M入源碼分析環(huán)節(jié)一探究竟吧!

三、源碼分析

RetryMiddleware 模塊源碼如下:

def get_retry_request(request: Request,*,spider: Spider,reason: Union[str, Exception] = 'unspecified',max_retry_times: Optional[int] = None,priority_adjust: Optional[int] = None,logger: Logger = retry_logger,stats_base_key: str = 'retry',):settings = spider.crawler.settingsstats = spider.crawler.statsretry_times = request.meta.get('retry_times', 0) + 1if max_retry_times is None:max_retry_times = request.meta.get('max_retry_times')if max_retry_times is None:max_retry_times = settings.getint('RETRY_TIMES')if retry_times <= max_retry_times:logger.debug("Retrying %(request)s (failed %(retry_times)d times): %(reason)s",{'request': request, 'retry_times': retry_times, 'reason': reason},extra={'spider': spider})new_request: Request = request.copy()new_request.meta['retry_times'] = retry_timesnew_request.dont_filter = Trueif priority_adjust is None:priority_adjust = settings.getint('RETRY_PRIORITY_ADJUST')new_request.priority = request.priority + priority_adjustif callable(reason):reason = reason()if isinstance(reason, Exception):reason = global_object_name(reason.__class__)stats.inc_value(f'{stats_base_key}/count')stats.inc_value(f'{stats_base_key}/reason_count/{reason}')return new_requestelse:stats.inc_value(f'{stats_base_key}/max_reached')logger.error("Gave up retrying %(request)s (failed %(retry_times)d times): ""%(reason)s",{'request': request, 'retry_times': retry_times, 'reason': reason},extra={'spider': spider},)return Noneclass RetryMiddleware:# IOError is raised by the HttpCompression middleware when trying to# decompress an empty responseEXCEPTIONS_TO_RETRY = (defer.TimeoutError, TimeoutError, DNSLookupError,ConnectionRefusedError, ConnectionDone, ConnectError,ConnectionLost, TCPTimedOutError, ResponseFailed,IOError, TunnelError)def __init__(self, settings):if not settings.getbool('RETRY_ENABLED'):raise NotConfiguredself.max_retry_times = settings.getint('RETRY_TIMES')self.retry_http_codes = set(int(x) for x in settings.getlist('RETRY_HTTP_CODES'))self.priority_adjust = settings.getint('RETRY_PRIORITY_ADJUST')def from_crawler(cls, crawler):return cls(crawler.settings)def process_response(self, request, response, spider):if request.meta.get('dont_retry', False):return responseif response.status in self.retry_http_codes:reason = response_status_message(response.status)return self._retry(request, reason, spider) or responsereturn responsedef process_exception(self, request, exception, spider):if (isinstance(exception, self.EXCEPTIONS_TO_RETRY)and not request.meta.get('dont_retry', False)):return self._retry(request, exception, spider)def _retry(self, request, reason, spider):max_retry_times = request.meta.get('max_retry_times', self.max_retry_times)priority_adjust = request.meta.get('priority_adjust', self.priority_adjust)return get_retry_request(request,reason=reason,spider=spider,max_retry_times=max_retry_times,priority_adjust=priority_adjust,)

環(huán)節(jié)說明:代碼一共也就 94 行,但是卻能實現(xiàn)多個功能。在好奇心的驅使下,我們還是對源碼進行一一講解分析吧。

from_crawler 函數(shù)

# 類方法,創(chuàng)建當前class的實例對象,參數(shù):當前spider settings對象def from_crawler(cls, crawler):return cls(crawler.settings)

__init__ 函數(shù)

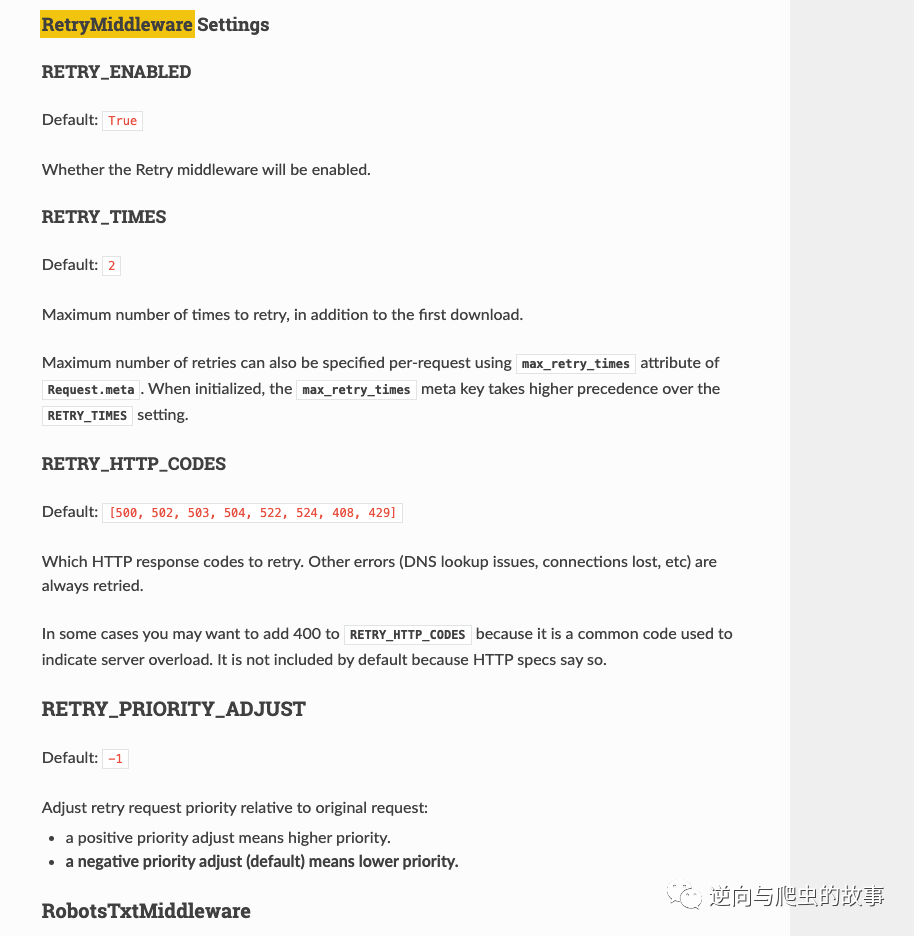

"""這里涉及到了settings.py配置文件中定義的一些參數(shù)。RETRY_ENABLED: 用于開啟中間件,默認為TrueRETRY_TIMES: 重試次數(shù), 默認為2RETRY_HTTP_CODES: 遇到哪些返回狀態(tài)碼需要重試, 一個列表,默認為[500, 503, 504, 400, 408]RETRY_PRIORITY_ADJUST:調整相對于原始請求的重試請求優(yōu)先級,默認為-1"""def __init__(self, settings):if not settings.getbool('RETRY_ENABLED'):raise NotConfiguredself.max_retry_times = settings.getint('RETRY_TIMES')self.retry_http_codes = set(int(x) for x in settings.getlist('RETRY_HTTP_CODES'))self.priority_adjust = settings.getint('RETRY_PRIORITY_ADJUST')

process_response 函數(shù)

process_exception 函數(shù)

EXCEPTIONS_TO_RETRY = (defer.TimeoutError, TimeoutError, DNSLookupError,ConnectionRefusedError, ConnectionDone, ConnectError,ConnectionLost, TCPTimedOutError, ResponseFailed,IOError, TunnelError)def process_response(self, request, response, spider):# 處理request請求,確定是否需要請求重試,重試觸發(fā)機制,前面提到的問題.if request.meta.get('dont_retry', False):return response# 檢查response狀態(tài)碼是否在重試機制list中,如果存在就要調用_retry方法進行重試if response.status in self.retry_http_codes:reason = response_status_message(response.status)return self._retry(request, reason, spider) or response# 不存在會返回response,但會被spider parse方法是過濾掉,只處理200狀態(tài)碼return responsedef process_exception(self, request, exception, spider):# 如果產生了EXCEPTIONS_TO_RETRY列表中的異常錯誤并且重試機制為開啟狀態(tài),則會調用_retry方法進行重試。if (isinstance(exception, self.EXCEPTIONS_TO_RETRY)and not request.meta.get('dont_retry', False)):return self._retry(request, exception, spider)

_retry 函數(shù)

get_retry_request 函數(shù)

# 該方法獲取最大重試次數(shù),和請求重試優(yōu)先級,然后調用get_retry_request方法def _retry(self, request, reason, spider):max_retry_times = request.meta.get('max_retry_times', self.max_retry_times)priority_adjust = request.meta.get('priority_adjust', self.priority_adjust)return get_retry_request(request,reason=reason,spider=spider,max_retry_times=max_retry_times,priority_adjust=priority_adjust,)"""讀取當前重試次數(shù)和最大重試次數(shù)進行比較,如果小于等于最大重試次數(shù):利用copy方法在原來的request上復制一個新request,并更新其retry_times,并將dont_filter設為True來防止因url重復而被過濾。如果超出最大重試次數(shù):記錄重試失敗請求量,并放棄該請求記錄到logger日志中,logger級別為:error"""def get_retry_request(request: Request,*,spider: Spider,reason: Union[str, Exception] = 'unspecified',max_retry_times: Optional[int] = None,priority_adjust: Optional[int] = None,logger: Logger = retry_logger,stats_base_key: str = 'retry',):settings = spider.crawler.settingsstats = spider.crawler.statsretry_times = request.meta.get('retry_times', 0) + 1if max_retry_times is None:max_retry_times = request.meta.get('max_retry_times')if max_retry_times is None:max_retry_times = settings.getint('RETRY_TIMES')if retry_times <= max_retry_times:logger.debug("Retrying %(request)s (failed %(retry_times)d times): %(reason)s",{'request': request, 'retry_times': retry_times, 'reason': reason},extra={'spider': spider})new_request: Request = request.copy()new_request.meta['retry_times'] = retry_timesnew_request.dont_filter = Trueif priority_adjust is None:priority_adjust = settings.getint('RETRY_PRIORITY_ADJUST')new_request.priority = request.priority + priority_adjustif callable(reason):reason = reason()if isinstance(reason, Exception):reason = global_object_name(reason.__class__)stats.inc_value(f'{stats_base_key}/count')stats.inc_value(f'{stats_base_key}/reason_count/{reason}')return new_requestelse:stats.inc_value(f'{stats_base_key}/max_reached')logger.error("Gave up retrying %(request)s (failed %(retry_times)d times): ""%(reason)s",{'request': request, 'retry_times': retry_times, 'reason': reason},extra={'spider': spider},)return None

環(huán)節(jié)總結:整個源碼分析流程到這里就結束了,接下來我們一起進入源碼重寫環(huán)節(jié)來解決下娜娜遇到的問題吧,我相信大家會豁然開朗的。

四、源碼重寫

重寫 RetryMiddleware 源碼后完整代碼如下:

class RetryMiddleware:EXCEPTIONS_TO_RETRY = (defer.TimeoutError, TimeoutError, DNSLookupError,ConnectionRefusedError, ConnectionDone, ConnectError,ConnectionLost, TCPTimedOutError, ResponseFailed,IOError, TunnelError)def __init__(self, settings):if not settings.getbool('RETRY_ENABLED'):raise NotConfiguredself.max_retry_times = settings.getint('RETRY_TIMES')self.retry_http_codes = set(int(x) for x in settings.getlist('RETRY_HTTP_CODES'))self.priority_adjust = settings.getint('RETRY_PRIORITY_ADJUST')@classmethoddef from_crawler(cls, crawler):return cls(crawler.settings)def process_response(self, request, response, spider):if request.meta.get('dont_retry', False):return responseif response.status in self.retry_http_codes: # 可以自定義重試狀態(tài)碼reason = response_status_message(response.status)response.last_content = request.metareturn self._retry(request, reason, spider) or responsereturn responsedef process_exception(self, request, exception, spider):if (isinstance(exception, self.EXCEPTIONS_TO_RETRY)and not request.meta.get('dont_retry', False)):return self._retry(request, exception, spider)def _retry(self, request, reason, spider):max_retry_times = request.meta.get('max_retry_times', self.max_retry_times)priority_adjust = request.meta.get('priority_adjust', self.priority_adjust)request.meta['proxy'] = "xxx:xxxx"request.headers['Proxy-Authorization'] = "proxyauth"return get_retry_request(request,reason=reason,spider=spider,max_retry_times=max_retry_times,priority_adjust=priority_adjust,)

重寫總結:我們只需要在 _retry 函數(shù)中實時更換代理即可,如果涉及到代理池需要剔除失敗代理的問題,同樣在 _retry 函數(shù)中刪除代理池中指定代理即可。我們還可以自定義重試機制狀態(tài)碼,大家可自行添加即可!

五、總結分享

通過本次案例分析,上面的幾個問題我們都已經得到了答案。今天分享到這里就結束了,歡迎大家關注下期文章,我們不見不散??。最后希望大家多多轉發(fā)、點贊、在看支持一波