【深度學(xué)習(xí)】深度學(xué)習(xí)手寫代碼匯總(建議收藏,面試用)

這幾天一些同學(xué)在面試的時候,遇到了一些手寫代碼的題,因為之前都沒有準(zhǔn)備到,所以基本上在寫的時候都有點蒙。

今天我就把一些常見的考題給大家整理下,這些題也是我之前準(zhǔn)備面試的時候整理的,很多的代碼都是網(wǎng)上現(xiàn)有的代碼,感謝各位大佬的付出,我這里就作為一個搬運工了,把這些代碼跟我之前整理到的一些資料都給大家系統(tǒng)整理下,希望各位也可以去他們那里給個star或者贊!

https://github.com/heyxhh/nnet-numpy

https://gitee.com/bitosky/numpy_cnn

https://blog.csdn.net/csuyzt/article/details/82633051

https://github.com/yizt/numpy_neural_network

上面的大佬們,主要是使用numpy來搭建了一個神經(jīng)網(wǎng)絡(luò),我之前也是參考這些大佬們的代碼準(zhǔn)備的面試。這里給大家安利下他們的代碼庫,當(dāng)然,需要配合下我之前的一些文章呀~

當(dāng)然,還有我自己的百面計算機(jī)視覺的面經(jīng)倉庫:https://github.com/zonechen1994/CV_Interview,歡迎大佬star!

全連接層的前向與反向

首先看下全連接層的前向與反向,這里先看下之前的一篇文章。

面試必問|手撕反向傳播

import numpy as np

# 定義線性層網(wǎng)絡(luò)

class Linear():

"""

線性全連接層

"""

def __init__(self, dim_in, dim_out):

"""

參數(shù):

dim_in: 輸入維度

dim_out: 輸出維度

"""

# 初始化參數(shù)

scale = np.sqrt(dim_in / 2)

self.weight = np.random.standard_normal((dim_in, dim_out)) / scale

self.bias = np.random.standard_normal(dim_out) / scale

# self.weight = np.random.randn(dim_in, dim_out)

# self.bias = np.zeros(dim_out)

self.params = [self.weight, self.bias]

def __call__(self, X):

"""

參數(shù):

X:這一層的輸入,shape=(batch_size, dim_in)

return:

xw + b

"""

self.X = X

return self.forward()

def forward(self):

return np.dot(self.X, self.weight) + self.bias

def backward(self, d_out):

"""

參數(shù):

d_out:輸出的梯度, shape=(batch_size, dim_out)

return:

返回loss對輸入 X 的梯度(前一層(l-1)的激活值的梯度)

"""

# 計算梯度

# 對input的梯度有batch維度,對參數(shù)的梯度對batch維度取平均

d_x = np.dot(d_out, self.weight.T) # 輸入也即上一層激活值的梯度

d_w = np.dot(self.X.T, d_out) # weight的梯度

d_b = np.mean(d_out, axis=0) # bias的梯度

return d_x, [d_w, d_b]

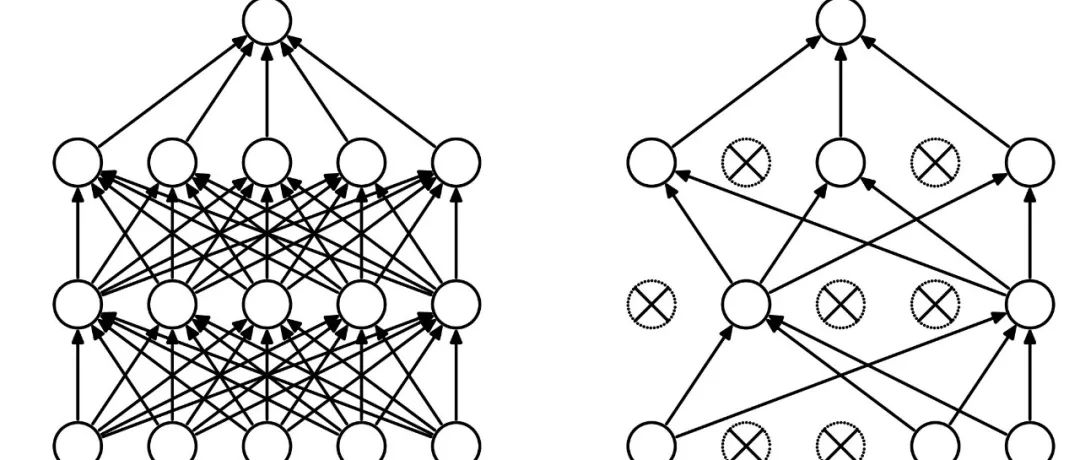

Dropout前向與反向

這里給大家分享下我之前的兩篇文章:

我丟!算法崗必問!建議收藏!

我再丟! 算法必問!

class Dropout():

"""

在訓(xùn)練時隨機(jī)將部分feature置為0

"""

def __init__(self, p):

"""

parameters:

p: 保留比例

"""

self.p = p

def __call__(self, X, mode):

"""

mode: 是在訓(xùn)練階段還是測試階段. train 或者 test

"""

return self.forward(X, mode)

def forward(self, X, mode):

if mode == 'train':

self.mask = np.random.binomial(1, self.p, X.shape) / self.p

out = self.mask * X

else:

out = X

return out

def backward(self, d_out):

"""

d_out: loss對dropout輸出的梯度

"""

return d_out * self.mask

激活函數(shù)之ReLu/Sigmoid/Tanh

這里給大家看下我之前關(guān)于激活函數(shù)的一些總結(jié):

非零均值?激活函數(shù)也太硬核了!

Softmax與Sigmoid你還不知道存在這些聯(lián)系?

ReLu

import numpy as np

# 定義Relu層

class Relu(object):

def __init__(self):

self.X = None

def __call__(self, X):

self.X = X

return self.forward(self.X)

def forward(self, X):

return np.maximum(0, X)

def backward(self, grad_output):

"""

grad_output: loss對relu激活輸出的梯度

return: relu對輸入input_z的梯度

"""

grad_relu = self.X > 0 # input_z大于0的提放梯度為1,其它為0

return grad_relu * grad_output # numpy中*為點乘

Tanh

class Tanh():

def __init__(self):

self.X = None

def __call__(self, X):

self.X = X

return self.forward(self.X)

def forward(self, X):

return np.tanh(X)

def backward(self, grad_output):

grad_tanh = 1 - (np.tanh(self.X)) ** 2

return grad_output * grad_tanh

Sigmoid

class Sigmoid():

def __init__(self):

self.X = None

def __call__(self, X):

self.X = X

return self.forward(self.X)

def forward(self, X):

return self._sigmoid(X)

def backward(self, grad_output):

sigmoid_grad = self._sigmoid(self.X) * (1 - self._sigmoid(self.X))

return grad_output * sigmoid_grad

def _sigmoid(self, X):

return 1.0 / (1 + np.exp(-X))

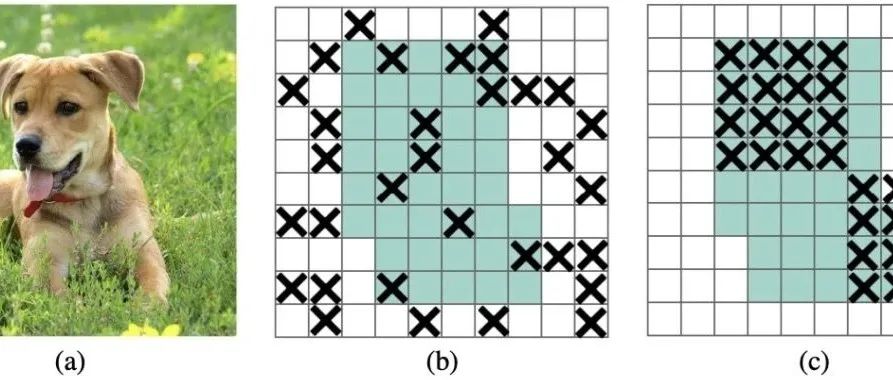

卷積層前向與反向傳播

卷積在這里,如果你之前用過caffe,你就知道我們卷積是im2col來做的。這里先給出大佬的兩段代碼/

Im2Col

class Img2colIndices():

"""

卷積網(wǎng)絡(luò)的滑動計算實際上是將feature map轉(zhuǎn)換成為矩陣乘法的方式。

卷積計算forward前需要將feature map轉(zhuǎn)換成為cols格式,每一次滑動的窗口作為cols的一列

卷積計算backward時需要將cols態(tài)的梯度轉(zhuǎn)換成為與輸入map shape一致的格式

該輔助類完成feature map --> cols 以及 cols --> feature map

設(shè)計卷積、maxpool、average pool都有可能用到該類進(jìn)行轉(zhuǎn)換操作

"""

def __init__(self, filter_size, padding, stride):

"""

parameters:

filter_shape: 卷積核的尺寸(h_filter, w_filter)

padding: feature邊緣填充0的個數(shù)

stride: filter滑動步幅

"""

self.h_filter, self.w_filter = filter_size

self.padding = padding

self.stride = stride

def get_img2col_indices(self, h_out, w_out):

"""

獲得需要由image轉(zhuǎn)換為col的索引, 返回的索引是在feature map填充后對于尺寸的索引

獲得每次卷積時,在feature map上卷積的元素的坐標(biāo)索引。以后img2col時根據(jù)索引獲得

i 的每一行,如第r行是filter第r個元素(左右上下的順序)在不同位置卷積時點乘的元素的位置的row坐標(biāo)索引

j 的每一行,如第r行是filter第r個元素(左右上下的順序)在不同位置卷積時點乘的元素的位置的column坐標(biāo)索引

結(jié)果i、j每一列,如第c列是filter第c次卷積的位置卷積的k×k個元素(左右上下的順序)。

每一列長filter_height*filter_width*C,由于C個通道,每C個都是重復(fù)的,表示在第幾個通道上做的卷積。

parameters:

h_out: 卷積層輸出feature的height

w_out: 卷積層輸出feature的width。每次調(diào)用imgcol時計算得到

return:

k: shape=(filter_height*filter_width*C, 1), 每挨著的filter_height*filter_width元素值都一樣,表示從第幾個通道取點

i: shape=(filter_height*filter_width*C, out_height*out_width), 依次待取元素的橫坐標(biāo)索引

j: shape=(filter_height*filter_width*C, out_height*out_width), 依次待取元素的縱坐標(biāo)索引

"""

i0 = np.repeat(np.arange(self.h_filter), self.w_filter)

i1 = np.repeat(np.arange(h_out), w_out) * self.stride

i = i0.reshape(-1, 1) + i1

i = np.tile(i, [self.c_x, 1])

j0 = np.tile(np.arange(self.w_filter), self.h_filter)

j1 = np.tile(np.arange(w_out), h_out) * self.stride

j = j0.reshape(-1, 1) + j1

j = np.tile(j, [self.c_x, 1])

k = np.repeat(np.arange(self.c_x), self.h_filter * self.w_filter).reshape(-1, 1)

return k, i, j

def img2col(self, X):

"""

基于索引取元素的方法實現(xiàn)img2col

parameters:

x: 輸入feature map,shape=(batch_size, channels, height, width)

return:

轉(zhuǎn)換img2col,shape=(h_filter * w_filter*chanels, batch_size * h_out * w_out)

"""

self.n_x, self.c_x, self.h_x, self.w_x = X.shape

# 首先計算出輸出特征的尺寸

# 計算輸出feature的尺寸,并且保證是整數(shù)

h_out = (self.h_x + 2 * self.padding - self.h_filter) / self.stride + 1

w_out = (self.w_x + 2 * self.padding - self.w_filter) / self.stride + 1

if not h_out.is_integer() or not w_out.is_integer():

raise Exception("Invalid dimention")

else:

h_out, w_out = int(h_out), int(w_out) # 上一步在進(jìn)行除法后類型會是float

# 0填充輸入feature map

x_padded = None

if self.padding > 0:

x_padded = np.pad(X, ((0, 0), (0, 0), (self.padding, self.padding), (self.padding, self.padding)), mode='constant')

else:

x_padded = X

# 在計算出輸出feature尺寸后,并且0填充X后,獲得img2col_indices

# img2col_indices設(shè)為實例的屬性,col2img時用,避免重復(fù)計算

self.img2col_indices = self.get_img2col_indices(h_out, w_out)

k, i, j = self.img2col_indices

# 獲得參與卷積計算的col形式

cols = x_padded[:, k, i, j] # shape=(batch_size, h_filter*w_filter*n_channel, h_out*w_out)

cols = cols.transpose(1, 2, 0).reshape(self.h_filter * self.w_filter * self.c_x, -1) # reshape

return cols

def col2img(self, cols):

"""

img2col的逆過程

卷積網(wǎng)絡(luò),在求出x的梯度時,dx是col矩陣的形式(filter_height*filter_width*chanels, batch_size*out_height*out_width)

將dx有col格式轉(zhuǎn)換成feature map的原尺寸格式。由get_img2col_indices獲得該尺寸下的索引,使用numpt.add.at方法還原成img格式

parameters:

cols: dx的col形式, shape=(h_filter*w_filter*n_chanels, batch_size*h_out*w_out)

"""

# 將col還原成img2col的輸出shape

cols = cols.reshape(self.h_filter * self.w_filter * self.c_x, -1, self.n_x)

cols = cols.transpose(2, 0, 1)

h_padded, w_padded = self.h_x + 2 * self.padding, self.w_x + 2 * self.padding

x_padded = np.zeros((self.n_x, self.c_x, h_padded, w_padded))

k, i, j = self.img2col_indices

np.add.at(x_padded, (slice(None), k, i, j), cols)

if self.padding == 0:

return x_padded

else:

return x_padded[:, :, self.padding : -self.padding, self.padding : -self.padding]

Conv2d前向與反向

卷積的過程,會調(diào)用im2col的函數(shù)。

class Conv2d():

def __init__(self, in_channels, n_filter, filter_size, padding, stride):

"""

parameters:

in_channel: 輸入feature的通道數(shù)

n_filter: 卷積核數(shù)目

filter_size: 卷積核的尺寸(h_filter, w_filter)

padding: 0填充數(shù)目

stride: 卷積核滑動步幅

"""

self.in_channels = in_channels

self.n_filter = n_filter

self.h_filter, self.w_filter = filter_size

self.padding = padding

self.stride = stride

# 初始化參數(shù),卷積網(wǎng)絡(luò)的參數(shù)size與輸入的size無關(guān)

self.W = np.random.randn(n_filter, self.in_channels, self.h_filter, self.w_filter) / np.sqrt(n_filter / 2.)

self.b = np.zeros((n_filter, 1))

self.params = [self.W, self.b]

def __call__(self, X):

# 計算輸出feature的尺寸

self.n_x, _, self.h_x, self.w_x = X.shape

self.h_out = (self.h_x + 2 * self.padding - self.h_filter) / self.stride + 1

self.w_out = (self.w_x + 2 * self.padding - self.w_filter) / self.stride + 1

if not self.h_out.is_integer() or not self.w_out.is_integer():

raise Exception("Invalid dimensions!")

self.h_out, self.w_out = int(self.h_out), int(self.w_out)

# 聲明Img2colIndices實例

self.img2col_indices = Img2colIndices((self.h_filter, self.w_filter), self.padding, self.stride)

return self.forward(X)

def forward(self, X):

# 將X轉(zhuǎn)換成col

self.x_col = self.img2col_indices.img2col(X)

# 轉(zhuǎn)換參數(shù)W的形狀,使它適合與col形態(tài)的x做計算

self.w_row = self.W.reshape(self.n_filter, -1)

# 計算前向傳播

out = self.w_row @ self.x_col + self.b # @在numpy中相當(dāng)于矩陣乘法,等價于numpy.matmul()

out = out.reshape(self.n_filter, self.h_out, self.w_out, self.n_x)

out = out.transpose(3, 0, 1, 2)

return out

def backward(self, d_out):

"""

parameters:

d_out: loss對卷積輸出的梯度

"""

# 轉(zhuǎn)換d_out的形狀

d_out_col = d_out.transpose(1, 2, 3, 0)

d_out_col = d_out_col.reshape(self.n_filter, -1)

d_w = d_out_col @ self.x_col.T

d_w = d_w.reshape(self.W.shape) # shape=(n_filter, d_x, h_filter, w_filter)

d_b = d_out_col.sum(axis=1).reshape(self.n_filter, 1)

d_x = self.w_row.T @ d_out_col

# 將col態(tài)的d_x轉(zhuǎn)換成image格式

d_x = self.img2col_indices.col2img(d_x)

return d_x, [d_w, d_b]

MaxPool2d

class Maxpool():

def __init__(self, size, stride):

"""

parameters:

size: maxpool框框的尺寸,int類型

stride: maxpool框框的滑動步幅,一般設(shè)計步幅和size一樣

"""

self.size = size # maxpool框的尺寸

self.stride = stride

def __call__(self, X):

"""

parameters:

X: 輸入feature,shape=(batch_size, channels, height, width)

"""

self.n_x, self.c_x, self.h_x, self.w_x = X.shape

# 計算maxpool輸出尺寸

self.h_out = (self.h_x - self.size) / self.stride + 1

self.w_out = (self.w_x - self.size) / self.stride + 1

if not self.h_out.is_integer() or not self.w_out.is_integer():

raise Exception("Invalid dimensions!")

self.h_out, self.w_out = int(self.h_out), int(self.w_out)

# 聲明Img2colIndices實例

self.img2col_indices = Img2colIndices((self.size, self.size), padding=0, stride=self.stride) # maxpool不需要padding

return self.forward(X)

def forward(self, X):

"""

parameters:

X: 輸入feature,shape=(batch_size, channels, height, width)

"""

x_reshaped = X.reshape(self.n_x * self.c_x, 1, self.h_x, self.w_x)

self.x_col = self.img2col_indices.img2col(x_reshaped)

self.max_indices = np.argmax(self.x_col, axis=0)

out = self.x_col[self.max_indices, range(self.max_indices.size)]

out = out.reshape(self.h_out, self.w_out, self.n_x, self.c_x).transpose(2, 3, 0, 1)

return out

def backward(self, d_out):

"""

parameters:

d_out: loss多maxpool輸出的梯度,shape=(batch_size, channels, h_out, w_out)

"""

d_x_col = np.zeros_like(self.x_col) # shape=(size*size, h_out*h_out*batch*C)

d_out_flat = d_out.transpose(2, 3, 0, 1).ravel()

d_x_col[self.max_indices, range(self.max_indices.size)] = d_out_flat

# 將d_x由col形態(tài)轉(zhuǎn)換到img形態(tài)

d_x = self.img2col_indices.col2img(d_x_col)

d_x = d_x.reshape(self.n_x, self.c_x, self.h_x, self.w_x)

return d_x當(dāng)然,如果不用Im2col的話,就更好理解了。在平均池化的時候,就不需要進(jìn)行標(biāo)記位置,可以直接用均值代替某一個區(qū)域就好了。

BatchNorm2d前向反向

這里就要安利下這篇文章了~

最全Normalization!建議收藏,面試必問!

class BatchNorm2d():

"""

對卷積層來說,批量歸一化發(fā)生在卷積計算之后、應(yīng)用激活函數(shù)之前。

如果卷積計算輸出多個通道,我們需要對這些通道的輸出分別做批量歸一化,且每個通道都擁有獨立的拉伸和偏移參數(shù),并均為標(biāo)量。

設(shè)小批量中有 m 個樣本。在單個通道上,假設(shè)卷積計算輸出的高和寬分別為 p 和 q 。我們需要對該通道中 m×p×q 個元素同時做批量歸一化。

對這些元素做標(biāo)準(zhǔn)化計算時,我們使用相同的均值和方差,即該通道中 m×p×q 個元素的均值和方差。

將訓(xùn)練好的模型用于預(yù)測時,我們希望模型對于任意輸入都有確定的輸出。

因此,單個樣本的輸出不應(yīng)取決于批量歸一化所需要的隨機(jī)小批量中的均值和方差。

一種常用的方法是通過移動平均估算整個訓(xùn)練數(shù)據(jù)集的樣本均值和方差,并在預(yù)測時使用它們得到確定的輸出。

"""

def __init__(self, n_channel, momentum):

"""

parameters:

n_channel: 輸入feature的通道數(shù)

momentum: moving_mean/moving_var迭代調(diào)整系數(shù)

"""

self.n_channel = n_channel

self.momentum = momentum

# 參與求梯度和迭代的拉伸和偏移參數(shù),分別初始化成1和0

self.gamma = np.ones((1, n_channel, 1, 1))

self.beta = np.zeros((1, n_channel, 1, 1))

# 測試時使用的參數(shù),初始化為0,需在訓(xùn)練時動態(tài)調(diào)整

self.moving_mean = np.zeros((1, n_channel, 1, 1))

self.moving_var = np.zeros((1, n_channel, 1, 1))

self.params = [self.gamma, self.beta]

def __call__(self, X, mode):

"""

X: shape = (N, C, H, W)

mode: 訓(xùn)練階段還是測試階段,train或test, 需要在調(diào)用時傳參

"""

self.X = X # 求gamma的梯度時用

return self.forward(X, mode)

def forward(self, X, mode):

"""

X: shape = (N, C, H, W)

mode: 訓(xùn)練階段還是測試階段,train或test, 需要在調(diào)用時傳參

"""

if mode != 'train':

# 如果是在預(yù)測模式下,直接使用傳入的移動平均所得的均值和方差

self.x_norm = (X - self.moving_mean) / np.sqrt(self.moving_var + 1e-5)

else:

# 使用二維卷積層的情況,計算通道維上(axis=1)的均值和方差。

# 這里我們需要保持X的形狀以便后面可以做廣播運算

mean = X.mean(axis=(0, 2, 3), keepdims=True)

self.var = X.var(axis=(0, 2, 3), keepdims=True) # 設(shè)為self,是因為backward時會用到

# 訓(xùn)練模式下用當(dāng)前的均值和方差做標(biāo)準(zhǔn)化。設(shè)為類實例的屬性,backward時用

self.x_norm = (X - mean) / (np.sqrt(self.var + 1e-5))

# 更新移動平均的均值和方差

self.moving_mean = self.momentum * self.moving_mean + (1 - self.momentum) * mean

self.moving_var = self.momentum * self.moving_var + (1 - self.momentum) * self.var

# 拉伸和偏移

out = self.x_norm * self.gamma + self.beta

return out

def backward(self, d_out):

"""

d_out的形狀與輸入的形狀一樣

"""

d_gamma = (d_out * self.x_norm).sum(axis=(0, 2, 3), keepdims=True)

d_beta = d_out.sum(axis=(0, 2, 3), keepdims=True)

d_x = (d_out * self.gamma) / np.sqrt(self.var + 1e-5)

return d_x, [d_gamma, d_beta]

Flatten層

這個層的作用,主要是進(jìn)行tensor拉直的操作,方便進(jìn)行全連接的進(jìn)行。

class Flatten():

"""

最后的卷積層輸出的feature若要連接全連接層需要將feature拉平

單獨建立一個模塊是為了方便梯度反向傳播

"""

def __init__(self):

pass

def __call__(self, X):

self.x_shape = X.shape # (batch_size, channels, height, width)

return self.forward(X)

def forward(self, X):

out = X.ravel().reshape(self.x_shape[0], -1)

return out

def backward(self, d_out):

d_x = d_out.reshape(self.x_shape)

return d_x

損失函數(shù)

這里以交叉熵?fù)p失函數(shù)為例:

import numpy as np

# 交叉熵?fù)p失

class CrossEntropyLoss():

"""

對最后一層的神經(jīng)元輸出計算交叉熵?fù)p失

"""

def __init__(self):

self.X = None

self.labels = None

def __call__(self, X, labels):

"""

參數(shù):

X: 模型最后fc層輸出

labels: one hot標(biāo)注,shape=(batch_size, num_class)

"""

self.X = X

self.labels = labels

return self.forward(self.X)

def forward(self, X):

"""

計算交叉熵?fù)p失

參數(shù):

X:最后一層神經(jīng)元輸出,shape=(batch_size, C)

label:數(shù)據(jù)onr-hot標(biāo)注,shape=(batch_size, C)

return:

交叉熵loss

"""

self.softmax_x = self.softmax(X)

log_softmax = self.log_softmax(self.softmax_x)

cross_entropy_loss = np.sum(-(self.labels * log_softmax), axis=1).mean()

return cross_entropy_loss

def backward(self):

grad_x = (self.softmax_x - self.labels) # 返回的梯度需要除以batch_size

return grad_x / self.X.shape[0]

def log_softmax(self, softmax_x):

"""

參數(shù):

softmax_x, 在經(jīng)過softmax處理過的X

return:

log_softmax處理后的結(jié)果shape = (m, C)

"""

return np.log(softmax_x + 1e-5)

def softmax(self, X):

"""

根據(jù)輸入,返回softmax

代碼利用softmax函數(shù)的性質(zhì): softmax(x) = softmax(x + c)

"""

batch_size = X.shape[0]

# axis=1 表示在二維數(shù)組中沿著橫軸進(jìn)行取最大值的操作

max_value = X.max(axis=1)

#每一行減去自己本行最大的數(shù)字,防止取指數(shù)后出現(xiàn)inf,性質(zhì):softmax(x) = softmax(x + c)

# 一定要新定義變量,不要用-=,否則會改變輸入X。因為在調(diào)用計算損失時,多次用到了softmax,input不能改變

tmp = X - max_value.reshape(batch_size, 1)

# 對每個數(shù)取指數(shù)

exp_input = np.exp(tmp) # shape=(m, n)

# 求出每一行的和

exp_sum = exp_input.sum(axis=1, keepdims=True) # shape=(m, 1)

return exp_input / exp_sum

優(yōu)化器

一文搞定面試中的優(yōu)化算法

SGD

- END -class SGD():

"""

隨機(jī)梯度下降

parameters: 模型需要訓(xùn)練的參數(shù)

lr: float, 學(xué)習(xí)率

momentum: float, 動量因子,默認(rèn)為None不使用動量梯度下降

"""

def __init__(self, parameters, lr, momentum=None):

self.parameters = parameters

self.lr = lr

self.momentum = momentum

if momentum is not None:

self.velocity = self.velocity_initial()

def update_parameters(self, grads):

"""

grads: 調(diào)用network的backward方法,返回的grads.

"""

if self.momentum == None:

for param, grad in zip(self.parameters, grads):

param -= self.lr * grad

else:

for i in range(len(self.parameters)):

self.velocity[i] = self.momentum * self.velocity[i] - self.lr * grads[i]

self.parameters[i] += self.velocity[i]

def velocity_initial(self):

"""

初始化velocity,按照parameters的參數(shù)順序依次將v初始化為0

"""

velocity = []

for param in self.parameters:

velocity.append(np.zeros_like(param))

return velocity

作者簡介:復(fù)旦在讀博士,94年已婚有娃的前bt算法工程師。雙非材料本科出身,零基礎(chǔ)跨專業(yè)考研到985cs專業(yè)。

往期精彩回顧 本站qq群851320808,加入微信群請掃碼: