數(shù)據(jù)科學(xué)家解決數(shù)據(jù)質(zhì)量問(wèn)題的實(shí)操指南

作者:Arunn Thevapalan

翻譯:陳超

校對(duì):王紫岳

本文介紹了Python中的Ydata-quality庫(kù)如何應(yīng)用于數(shù)據(jù)質(zhì)量診斷,并給出數(shù)據(jù)實(shí)例進(jìn)行詳細(xì)的一步步解釋。

如果你在處理現(xiàn)實(shí)數(shù)據(jù)的AI行業(yè)工作,那么你會(huì)理解這種痛苦。無(wú)論數(shù)據(jù)收集過(guò)程多么精簡(jiǎn) ,我們用于建模的數(shù)據(jù)總是一片狼藉。

就像IBM描述的那樣,80/20規(guī)則在數(shù)據(jù)科學(xué)領(lǐng)域同樣適用。數(shù)據(jù)科學(xué)家80%的寶貴時(shí)間都花費(fèi)在發(fā)現(xiàn)、清洗以及組織數(shù)據(jù)上。僅僅留下了20%的時(shí)間用于真正的數(shù)據(jù)分析。

整理數(shù)據(jù)并不有趣。對(duì)于“垃圾輸入進(jìn)去,垃圾輸出出來(lái)”這句話,我知道它的重要性,但是我真的不能享受清洗空白格,修正正則表達(dá)式,并且解決數(shù)據(jù)中無(wú)法預(yù)料的問(wèn)題的過(guò)程。

根據(jù)谷歌研究:“每個(gè)人都想做建模工作,而不是數(shù)據(jù)工作”——我對(duì)此感到非常愧疚。另外 ,本文介紹了一種叫做數(shù)據(jù)級(jí)聯(lián)(data cascade)的現(xiàn)象,這種現(xiàn)象是指由底層數(shù)據(jù)問(wèn)題引發(fā)的不利的后續(xù)影響的混合事件。實(shí)際上,該問(wèn)題目前有三個(gè)方面 :

絕大多數(shù)數(shù)據(jù)科學(xué)技術(shù)并不喜歡清理和整理數(shù)據(jù); 只有20%的時(shí)間是在做有用的分析; 數(shù)據(jù)質(zhì)量問(wèn)題如果不盡早處理,將會(huì)產(chǎn)生級(jí)聯(lián)現(xiàn)象并影響后續(xù)工作。

只有解決了這些問(wèn)題才能確保清理數(shù)據(jù)是容易,快捷,自然的。我們需要工具和技術(shù)來(lái)幫助我們這些數(shù)據(jù)科學(xué)家快速識(shí)別并解決數(shù)據(jù)質(zhì)量問(wèn)題,并以此將我們寶貴的時(shí)間投入到分析和AI領(lǐng)域——那些我們真正喜歡的工作當(dāng)中。

在本文當(dāng)中,我將呈現(xiàn)一種幫助我們基于預(yù)期優(yōu)先級(jí)來(lái)提前識(shí)別數(shù)據(jù)質(zhì)量問(wèn)題的開(kāi)源工具(https://github.com/ydataai/ydata-quality)。我很慶幸有這個(gè)工具存在,并且我等不及要跟你們分享它。

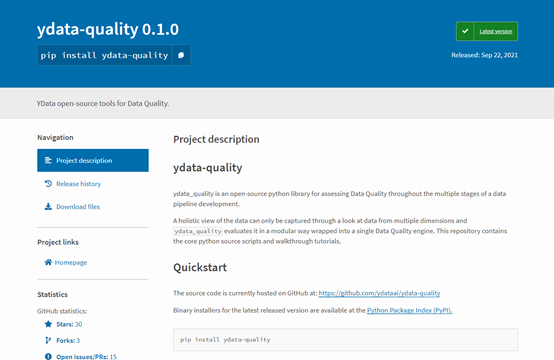

Ydata-quality是一個(gè)開(kāi)源的Python庫(kù),用于數(shù)據(jù)管道發(fā)展的多個(gè)階段來(lái)評(píng)估數(shù)據(jù)質(zhì)量。該庫(kù)是直觀、易用的,并且你可以直接將其整合進(jìn)入你的機(jī)器學(xué)習(xí)工作流。

對(duì)我個(gè)人而言,這個(gè)庫(kù)的好處在于它可以基于數(shù)據(jù)質(zhì)量問(wèn)題(接下來(lái)展開(kāi))的優(yōu)先級(jí)排序。在我們時(shí)間有限時(shí),這是很有幫助的,并且我們也想優(yōu)先處理對(duì)我們數(shù)據(jù)質(zhì)量影響較大的問(wèn)題。

讓我向你們展示一下如何使用一團(tuán)亂麻的數(shù)據(jù)的現(xiàn)實(shí)例子。在這個(gè)例子當(dāng)中,我們將會(huì):

加載一個(gè)混亂的數(shù)據(jù)集; 分析數(shù)據(jù)質(zhì)量問(wèn)題; 進(jìn)一步挖掘警告信息; 應(yīng)用策略來(lái)減輕這些問(wèn)題; 檢查在半清洗過(guò)后的數(shù)據(jù)的最終質(zhì)量分析報(bào)告。

在安裝任何庫(kù)之前,最好使用venv或者conda來(lái)為項(xiàng)目創(chuàng)建虛擬環(huán)境,一旦這一步完成,在你的終端輸入下面這行代碼來(lái)安裝庫(kù):

pip install ydata-quality現(xiàn)實(shí)生活中混亂的數(shù)據(jù)

第一步:加載數(shù)據(jù)集

第一步中,我們將會(huì)加載數(shù)據(jù)集以及必要的庫(kù)。注意,這個(gè)庫(kù)有多個(gè)模塊(偏差&公正,數(shù)據(jù)期望,數(shù)據(jù)關(guān)系,漂移分析,錯(cuò)誤數(shù)據(jù),標(biāo)簽,缺失值)用于單獨(dú)的數(shù)據(jù)質(zhì)量問(wèn)題,但是我們可以從DataQuality引擎開(kāi)始,該引擎把所有的個(gè)體引擎打包成了一個(gè)類(lèi)。

from ydata_quality import DataQualityimport pandas as pddf = pd.read_csv('../datasets/transformed/census_10k.csv')

第二步:分析數(shù)據(jù)質(zhì)量問(wèn)題

這是一個(gè)漫長(zhǎng)的過(guò)程,但是DataQuality引擎在抽取所有細(xì)節(jié)方面確實(shí)做的很好 。只要簡(jiǎn)單地創(chuàng)建主類(lèi)并使用evaluate() 方法。

# create the main class that holds all quality modulesdq = DataQuality(df=df)# run the testsresults = dq.evaluate()

Warnings:TOTAL: 5 warning(s)Priority 1: 1 warning(s)Priority 2: 4 warning(s)Priority 1 - heavy impact expected:* [DUPLICATES - DUPLICATE COLUMNS] Found 1 columns with exactly the same feature values as other columns.Priority 2 - usage allowed, limited human intelligibility:* [DATA RELATIONS - HIGH COLLINEARITY - NUMERICAL] Found 3 numerical variables with high Variance Inflation Factor (VIF>5.0). The variables listed in results are highly collinear with other variables in the dataset. These will make model explainability harder and potentially give way to issues like overfitting. Depending on your end goal you might want to remove the highest VIF variables.* [ERRONEOUS DATA - PREDEFINED ERRONEOUS DATA] Found 1960 ED values in the dataset.* [DATA RELATIONS - HIGH COLLINEARITY - CATEGORICAL] Found 10 categorical variables with significant collinearity (p-value < 0.05). The variables listed in results are highly collinear with other variables in the dataset and sorted descending according to propensity. These will make model explainability harder and potentially give way to issues like overfitting. Depending on your end goal you might want to remove variables following the provided order.* [DUPLICATES - EXACT DUPLICATES] Found 3 instances with exact duplicate feature values.

讓我們來(lái)仔細(xì)分析一下這個(gè)報(bào)告:

警告(Warning):其中包括數(shù)據(jù)質(zhì)量分析過(guò)程中檢測(cè)到的問(wèn)題細(xì)節(jié)。

優(yōu)先級(jí)(Priority):對(duì)每一個(gè)檢測(cè)到的問(wèn)題,基于該問(wèn)題預(yù)期的影響來(lái)分配一個(gè)優(yōu)先級(jí)(越低的值表明越高的優(yōu)先性)。

模塊(Modules):每個(gè)檢測(cè)到的問(wèn)題與某一個(gè)模塊(例如:數(shù)據(jù)關(guān)系,重復(fù)值,等)執(zhí)行的數(shù)據(jù)質(zhì)量檢驗(yàn)相關(guān)聯(lián)。

把所有的東西聯(lián)系在一起,我們注意到有五個(gè)警告被識(shí)別出來(lái),其中之一就是高優(yōu)先級(jí)問(wèn)題。它被“重復(fù)值”模塊被檢測(cè)出來(lái),這意味著我們有一整個(gè)重復(fù)列需要修復(fù)。為了更深入地處理該問(wèn)題,我們使用get_warnings() 方法。

dq.get_warnings(test="DuplicateColumns")[QualityWarning(category='Duplicates', test='Duplicate Columns', description='Found 1 columns with exactly the same feature values as other columns.', priority=<Priority.P1: 1>, data={'workclass': ['workclass2']})]第三步:使用特定的模塊分析數(shù)據(jù)質(zhì)量問(wèn)題

數(shù)據(jù)質(zhì)量的全貌需要多個(gè)角度分析,因此我們需要八個(gè)不同的模塊。雖然它們被封裝在DataQuality 類(lèi)當(dāng)中,但一些模塊并不會(huì)運(yùn)行,除非我們提供特定的參數(shù)。

from ydata_quality.bias_fairness import BiasFairness#create the main class that holds all quality modulesbf = BiasFairness(df=df, sensitive_features=['race', 'sex'], label='income')# run the testsbf_results = bf.evaluate()

Warnings:TOTAL: 2 warning(s)Priority 2: 2 warning(s)Priority 2 - usage allowed, limited human intelligibility:* [BIAS&FAIRNESS - PROXY IDENTIFICATION] Found 1 feature pairs of correlation to sensitive attributes with values higher than defined threshold (0.5).* [BIAS&FAIRNESS - SENSITIVE ATTRIBUTE REPRESENTATIVITY] Found 2 values of 'race' sensitive attribute with low representativity in the dataset (below 1.00%).

bf.get_warnings(test='Proxy Identification')[QualityWarning(category='Bias&Fairness', test='Proxy Identification', description='Found 1 feature pairs of correlation to sensitive attributes with values higher than defined threshold (0.5).', priority=<Priority.P2: 2>, data=featuresrelationship_sex 0.650656Name: association, dtype: float64)]

第四步:解決識(shí)別的問(wèn)題

def improve_quality(df: pd.DataFrame):"""Clean the data based on the Data Quality issues found previously."""# Bias & Fairnessdf = df.replace({'relationship': {'Husband': 'Married', 'Wife': 'Married'}}) # Substitute gender-based 'Husband'/'Wife' for generic 'Married'# Duplicatesdf = df.drop(columns=['workclass2']) # Remove the duplicated columndf = df.drop_duplicates() # Remove exact feature value duplicatesreturn dfclean_df = improve_quality(df.copy())

第五步:運(yùn)行最后的質(zhì)量檢驗(yàn)

*DataQuality Engine Report:*Warnings:TOTAL: 3 warning(s)Priority 2: 3 warning(s)Priority 2 - usage allowed, limited human intelligibility:* [ERRONEOUS DATA - PREDEFINED ERRONEOUS DATA] Found 1360 ED values in the dataset.* [DATA RELATIONS - HIGH COLLINEARITY - NUMERICAL] Found 3 numerical variables with high Variance Inflation Factor (VIF>5.0). The variables listed in results are highly collinear with other variables in the dataset. These will make model explainability harder and potentially give way to issues like overfitting. Depending on your end goal you might want to remove the highest VIF variables.* [DATA RELATIONS - HIGH COLLINEARITY - CATEGORICAL] Found 9 categorical variables with significant collinearity (p-value < 0.05). The variables listed in results are highly collinear with other variables in the dataset and sorted descending according to propensity. These will make model explainability harder and potentially give way to issues like overfitting. Depending on your end goal you might want to remove variables following the provided order.*Bias & Fairness Report:*Warnings:TOTAL: 1 warning(s)Priority 2: 1 warning(s)Priority 2 - usage allowed, limited human intelligibility:* [BIAS&FAIRNESS - SENSITIVE ATTRIBUTE REPRESENTATIVITY] Found 2 values of 'race' sensitive attribute with low representativity in the dataset (below 1.00%).

結(jié)束寄語(yǔ)

譯者簡(jiǎn)介

陳超,北京大學(xué)應(yīng)用心理碩士在讀。本科曾混跡于計(jì)算機(jī)專(zhuān)業(yè),后又在心理學(xué)的道路上不懈求索。越來(lái)越發(fā)現(xiàn)數(shù)據(jù)分析和編程已然成為了兩門(mén)必修的生存技能,因此在日常生活中盡一切努力更好地去接觸和了解相關(guān)知識(shí),但前路漫漫,我仍在路上。

思路+步驟,手把手教你如何快速構(gòu)建用戶畫(huà)像?

????關(guān)注后回復(fù)【大數(shù)據(jù)資料】領(lǐng)取數(shù)據(jù)分析學(xué)習(xí)資料包

評(píng)論

圖片

表情