主要用到requests和bf4兩個(gè)庫(kù)將獲得的信息保存在d://hotsearch.txt下importrequests;importbs4mylist=[]r=requests.get(ur...

主要用到requests和bf4兩個(gè)庫(kù)

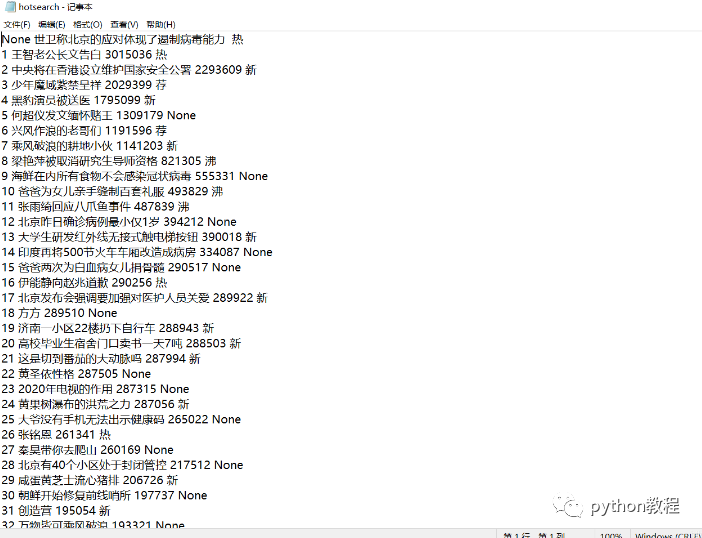

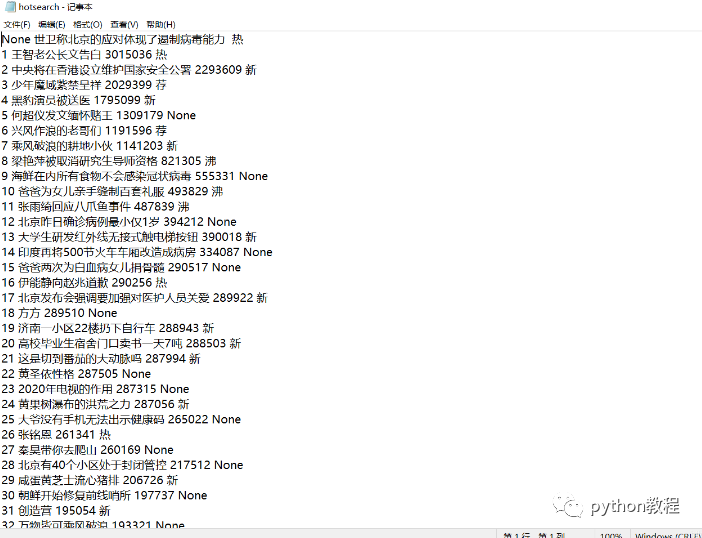

將獲得的信息保存在d://hotsearch.txt下

import?requests;

import?bs4

mylist=[]

r?=?requests.get(url='https://s.weibo.com/top/summary?Refer=top_hot&topnav=1&wvr=6',timeout=10)

print(r.status_code)?# 獲取返回狀態(tài)

r.encoding=r.apparent_encoding

demo?=?r.text

from?bs4?import?BeautifulSoup

soup?=?BeautifulSoup(demo,"html.parser")

for?link?in?soup.find('tbody')?:

hotnumber=''

if?isinstance(link,bs4.element.Tag):

# print(link('td'))

lis=link('td')

hotrank=lis[1]('a')[0].string#熱搜排名

hotname=lis[1].find('span')#熱搜名稱

if?isinstance(hotname,bs4.element.Tag):

hotnumber=hotname.string#熱搜指數(shù)

pass

mylist.append([lis[0].string,hotrank,hotnumber,lis[2].string])

f=open("d://hotsearch.txt","w+")

for?line?in?mylist:

f.write('%s %s %s %s\n'%(line[0],line[1],line[2],line[3]))

知識(shí)點(diǎn)擴(kuò)展:利用python爬取微博熱搜并進(jìn)行數(shù)據(jù)分析

爬取微博熱搜

import?schedule

import?pandas?as?pd

from?datetime?import?datetime

import?requests

from?bs4?import?BeautifulSoup

?

url?=?"https://s.weibo.com/top/summary?cate=realtimehot&sudaref=s.weibo.com&display=0&retcode=6102"

get_info_dict?=?{}

count?=?0

?

def?main():

global?url,?get_info_dict,?count

get_info_list?=?[]

print("正在爬取數(shù)據(jù)~~~")

html?=?requests.get(url).text

soup?=?BeautifulSoup(html,?'lxml')

for?tr?in?soup.find_all(name='tr',?class_=''):

get_info?=?get_info_dict.copy()

get_info['title']?=?tr.find(class_='td-02').find(name='a').text

try:

get_info['num']?=?eval(tr.find(class_='td-02').find(name='span').text)

except?AttributeError:

get_info['num']?=?None

get_info['time']?=?datetime.now().strftime("%Y/%m/%d %H:%M")

get_info_list.append(get_info)

get_info_list?=?get_info_list[1:16]

df?=?pd.DataFrame(get_info_list)

if?count?==?0:

df.to_csv('datas.csv',?mode='a+',?index=False,?encoding='gbk')

count?+=?1

else:

df.to_csv('datas.csv',?mode='a+',?index=False,?header=False,?encoding='gbk')

?

# 定時(shí)爬蟲(chóng)

schedule.every(1).minutes.do(main)

?

while?True:

schedule.run_pending()

pyecharts數(shù)據(jù)分析

import?pandas?as?pd

from?pyecharts?import?options?as?opts

from?pyecharts.charts?import?Bar,?Timeline,?Grid

from?pyecharts.globals?import?ThemeType,?CurrentConfig

?

df?=?pd.read_csv('datas.csv',?encoding='gbk')

print(df)

t?=?Timeline(init_opts=opts.InitOpts(theme=ThemeType.MACARONS))?# 定制主題

for?i?in?range(int(df.shape[0]/15)):

bar?=?(

Bar()

.add_xaxis(list(df['title'][i*15:?i*15+15][::-1]))?# x軸數(shù)據(jù)

.add_yaxis('num',?list(df['num'][i*15:?i*15+15][::-1]))?# y軸數(shù)據(jù)

.reversal_axis()?# 翻轉(zhuǎn)

.set_global_opts(?# 全局配置項(xiàng)

title_opts=opts.TitleOpts(?# 標(biāo)題配置項(xiàng)

title=f"{list(df['time'])[i * 15]}",

pos_right="5%",?pos_bottom="15%",

title_textstyle_opts=opts.TextStyleOpts(

font_family='KaiTi',?font_size=24,?color='#FF1493'

)

),

xaxis_opts=opts.AxisOpts(?# x軸配置項(xiàng)

splitline_opts=opts.SplitLineOpts(is_show=True),

),

yaxis_opts=opts.AxisOpts(?# y軸配置項(xiàng)

splitline_opts=opts.SplitLineOpts(is_show=True),

axislabel_opts=opts.LabelOpts(color='#DC143C')

)

)

.set_series_opts(?# 系列配置項(xiàng)

label_opts=opts.LabelOpts(?# 標(biāo)簽配置

position="right",?color='#9400D3')

)

)

grid?=?(

Grid()

.add(bar,?grid_opts=opts.GridOpts(pos_left="24%"))

)

t.add(grid,?"")

t.add_schema(

play_interval=1000,?# 輪播速度

is_timeline_show=False,?# 是否顯示 timeline 組件

is_auto_play=True,?# 是否自動(dòng)播放

)

?

t.render('時(shí)間輪播圖.html')

到此這篇關(guān)于如何用python爬取微博熱搜數(shù)據(jù)并保存的文章就介紹到這了!

掃下方二維碼加老師微信

或是搜索老師微信號(hào):XTUOL1988【切記備注:學(xué)習(xí)Python】

領(lǐng)取Python web開(kāi)發(fā),Python爬蟲(chóng),Python數(shù)據(jù)分析,人工智能等學(xué)習(xí)教程。帶你從零基礎(chǔ)系統(tǒng)性的學(xué)好Python!

也可以加老師建的Python技術(shù)學(xué)習(xí)教程qq裙:245345507,二者加一個(gè)就可以!

歡迎大家點(diǎn)贊,留言,轉(zhuǎn)發(fā),轉(zhuǎn)載,感謝大家的相伴與支持

萬(wàn)水千山總是情,點(diǎn)個(gè)【在看】行不行

*聲明:本文于網(wǎng)絡(luò)整理,版權(quán)歸原作者所有,如來(lái)源信息有誤或侵犯權(quán)益,請(qǐng)聯(lián)系我們刪除或授權(quán)事宜