OpenVINO部署Mask-RCNN實(shí)例分割網(wǎng)絡(luò)

極市導(dǎo)讀

OpenVINO是英特爾推出的一款全面的工具套件,用于快速部署應(yīng)用和解決方案,支持計(jì)算機(jī)視覺(jué)的CNN網(wǎng)絡(luò)結(jié)構(gòu)超過(guò)150余種。本文展示了用OpenVINO部署Mask-RCNN實(shí)例分割網(wǎng)絡(luò)的詳細(xì)過(guò)程及代碼演示。 >>加入極市CV技術(shù)交流群,走在計(jì)算機(jī)視覺(jué)的最前沿

模型介紹

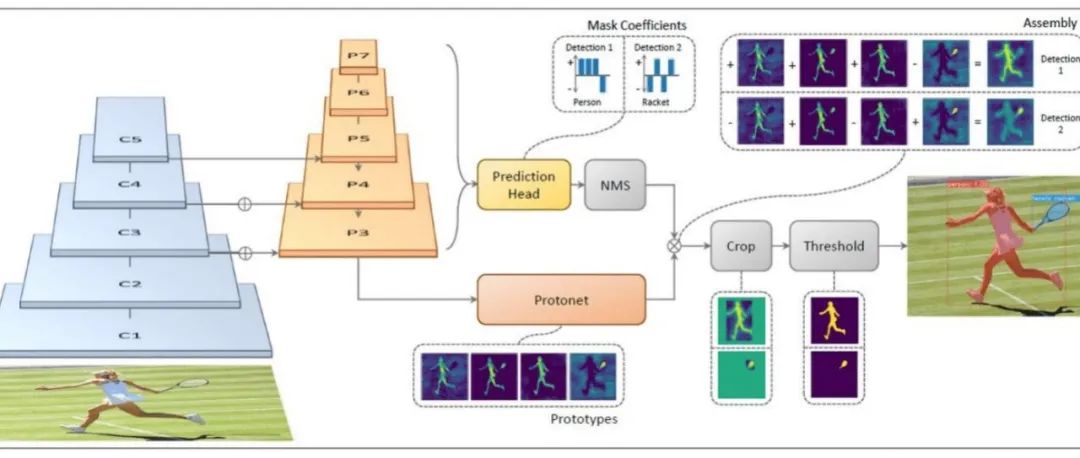

OpenVINO支持Mask-RCNN與yolact兩種實(shí)例分割模型的部署,其中Mask-RCNN系列的實(shí)例分割網(wǎng)絡(luò)是OpenVINO官方自帶的,直接下載即可,yolact是來(lái)自第三方的公開(kāi)模型庫(kù)。

這里以instance-segmentation-security-0050模型為例說(shuō)明,該模型基于COCO數(shù)據(jù)集訓(xùn)練,支持80個(gè)類別的實(shí)例分割,加上背景為81個(gè)類別。

OpenVINO支持部署Faster-RCNN與Mask-RCNN網(wǎng)絡(luò)時(shí)候輸入的解析都是基于兩個(gè)輸入層,它們分別是:

im_data : NCHW=[1x3x480x480]im_info: 1x3 三個(gè)值分別是H、W、Scale=1.0

輸出有四個(gè),名稱與輸出格式及解釋如下:

name: classes, shape: [100, ]

預(yù)測(cè)的100個(gè)類別可能性,值在[0~1]之間name: scores: shape: [100, ]

預(yù)測(cè)的100個(gè)Box可能性,值在[0~1]之間name: boxes, shape: [100, 4]

預(yù)測(cè)的100個(gè)Box坐標(biāo),左上角與右下角,基于輸入的480x480name: raw_masks, shape: [100, 81, 28, 28]

Box ROI區(qū)域的實(shí)例分割輸出,81表示類別(包含背景),28x28表示ROI大小。

上面都是官方文檔給我的關(guān)于模型的相關(guān)信息,但是我發(fā)現(xiàn)該模型的實(shí)際推理輸raw_masks輸出格式大小為:100x81x14x14,這個(gè)算文檔沒(méi)更新嗎?

代碼演示

這邊的代碼輸出層跟輸入層都不止一個(gè),所以為了簡(jiǎn)化,我用了兩個(gè)for循環(huán)設(shè)置了輸入與輸出數(shù)據(jù)精度,然后直接通過(guò)hardcode來(lái)獲取推理之后各個(gè)輸出層對(duì)應(yīng)的數(shù)據(jù)部分,首先獲取類別,根據(jù)類別ID與Box的索引,直接獲取實(shí)例分割mask,然后隨機(jī)生成顏色,基于mask實(shí)現(xiàn)與原圖BOX ROI的疊加,產(chǎn)生了實(shí)例分割之后的效果輸出。完整的演示代碼分為下面幾步:IE引擎初始化與模型加載

InferenceEngine::Core ie;std::vector<std::string> coco_labels;read_coco_labels(coco_labels);cv::RNG rng(12345);cv::Mat src = cv::imread("D:/images/sport-girls.png");cv::namedWindow("input", cv::WINDOW_AUTOSIZE);int im_h = src.rows;int im_w = src.cols;InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin);InferenceEngine::InputsDataMap inputs = network.getInputsInfo();InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo();

設(shè)置輸入與輸出數(shù)據(jù)格式

std::string image_input_name = "";std::string image_info_name = "";int in_index = 0;for (auto item : inputs) {if (in_index == 0) {image_input_name = item.first;auto input_data = item.second;input_data->setPrecision(Precision::U8);input_data->setLayout(Layout::NCHW);}else {image_info_name = item.first;auto input_data = item.second;input_data->setPrecision(Precision::FP32);}in_index++;}for (auto item : outputs) {std::string output_name = item.first;auto output_data = item.second;output_data->setPrecision(Precision::FP32);std::cout << "output name: " << output_name << std::endl;}

設(shè)置blob輸入數(shù)據(jù)與推理

auto executable_network = ie.LoadNetwork(network, "CPU");auto infer_request = executable_network.CreateInferRequest();auto input = infer_request.GetBlob(image_input_name);matU8ToBlob<uchar>(src, input);auto input2 = infer_request.GetBlob(image_info_name);auto imInfoDim = inputs.find(image_info_name)->second->getTensorDesc().getDims()[1];InferenceEngine::MemoryBlob::Ptr minput2 = InferenceEngine::as<InferenceEngine::MemoryBlob>(input2);auto minput2Holder = minput2->wmap();float *p = minput2Holder.as<InferenceEngine::PrecisionTrait<InferenceEngine::Precision::FP32>::value_type *>();p[0] = static_cast<float>(inputs[image_input_name]->getTensorDesc().getDims()[2]);p[1] = static_cast<float>(inputs[image_input_name]->getTensorDesc().getDims()[3]);p[2] = 1.0f;infer_request.Infer();

解析輸出結(jié)果

auto scores = infer_request.GetBlob("scores");auto boxes = infer_request.GetBlob("boxes");auto clazzes = infer_request.GetBlob("classes");auto raw_masks = infer_request.GetBlob("raw_masks");const float* score_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(scores->buffer());const float* boxes_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(boxes->buffer());const float* clazzes_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(clazzes->buffer());const auto raw_masks_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(raw_masks->buffer());const SizeVector scores_outputDims = scores->getTensorDesc().getDims();const SizeVector boxes_outputDims = boxes->getTensorDesc().getDims();const SizeVector mask_outputDims = raw_masks->getTensorDesc().getDims();const int max_count = scores_outputDims[0];const int object_size = boxes_outputDims[1];printf("mask NCHW=[%d, %d, %d, %d]\n", mask_outputDims[0], mask_outputDims[1], mask_outputDims[2], mask_outputDims[3]);int mask_h = mask_outputDims[2];int mask_w = mask_outputDims[3];size_t box_stride = mask_h * mask_w * mask_outputDims[1];for (int n = 0; n < max_count; n++) {float confidence = score_data[n];float xmin = boxes_data[n*object_size] * w_rate;float ymin = boxes_data[n*object_size + 1] * h_rate;float xmax = boxes_data[n*object_size + 2] * w_rate;float ymax = boxes_data[n*object_size + 3] * h_rate;if (confidence > 0.5) {cv::Scalar color(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255));cv::Rect box;float x1 = std::min(std::max(0.0f, xmin), static_cast<float>(im_w));float y1 = std::min(std::max(0.0f,ymin), static_cast<float>(im_h));float x2 = std::min(std::max(0.0f, xmax), static_cast<float>(im_w));float y2 = std::min(std::max(0.0f, ymax), static_cast<float>(im_h));box.x = static_cast<int>(x1);box.y = static_cast<int>(y1);box.width = static_cast<int>(x2 - x1);box.height = static_cast<int>(y2 - y1);int label = static_cast<int>(clazzes_data[n]);std::cout <<"confidence: "<< confidence<<" class name: "<< coco_labels[label] << std::endl;// 解析maskfloat* mask_arr = raw_masks_data + box_stride * n + mask_h * mask_w * label;cv::Mat mask_mat(mask_h, mask_w, CV_32FC1, mask_arr);cv::Mat roi_img = src(box);cv::Mat resized_mask_mat(box.height, box.width, CV_32FC1);cv::resize(mask_mat, resized_mask_mat, cv::Size(box.width, box.height));cv::Mat uchar_resized_mask(box.height, box.width, CV_8UC3,color);roi_img.copyTo(uchar_resized_mask, resized_mask_mat <= 0.5);cv::addWeighted(uchar_resized_mask, 0.7, roi_img, 0.3, 0.0f, roi_img);cv::putText(src, coco_labels[label].c_str(), box.tl()+(box.br()-box.tl())/2, cv::FONT_HERSHEY_PLAIN, 1.0, cv::Scalar(0, 0, 255), 1, 8);}}

最終程序測(cè)試結(jié)果:

推薦閱讀

2021-03-05

2021-01-30

2020-12-19

# CV技術(shù)社群邀請(qǐng)函 #

備注:姓名-學(xué)校/公司-研究方向-城市(如:小極-北大-目標(biāo)檢測(cè)-深圳)

即可申請(qǐng)加入極市目標(biāo)檢測(cè)/圖像分割/工業(yè)檢測(cè)/人臉/醫(yī)學(xué)影像/3D/SLAM/自動(dòng)駕駛/超分辨率/姿態(tài)估計(jì)/ReID/GAN/圖像增強(qiáng)/OCR/視頻理解等技術(shù)交流群

每月大咖直播分享、真實(shí)項(xiàng)目需求對(duì)接、求職內(nèi)推、算法競(jìng)賽、干貨資訊匯總、與 10000+來(lái)自港科大、北大、清華、中科院、CMU、騰訊、百度等名校名企視覺(jué)開(kāi)發(fā)者互動(dòng)交流~