【機(jī)器學(xué)習(xí)】機(jī)器學(xué)習(xí)建模調(diào)參方法總結(jié)

導(dǎo)讀

對(duì)于數(shù)據(jù)挖掘項(xiàng)目,本文將學(xué)習(xí)如何建模調(diào)參?從簡(jiǎn)單的模型開(kāi)始,如何去建立一個(gè)模型;如何進(jìn)行交叉驗(yàn)證;如何調(diào)節(jié)參數(shù)優(yōu)化等。

前言

數(shù)據(jù)及背景

理論簡(jiǎn)介

知識(shí)總結(jié)

回歸分析

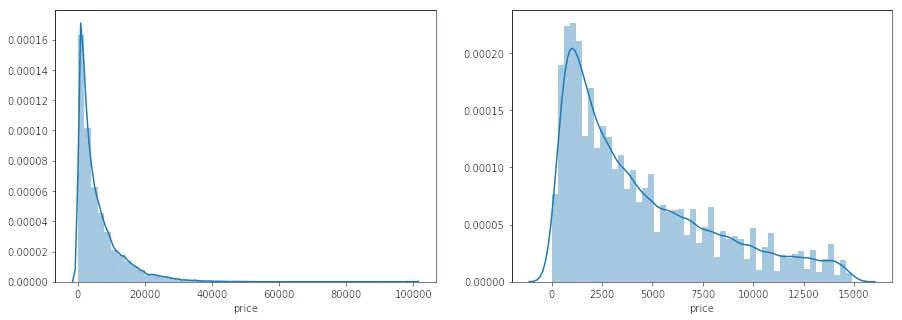

長(zhǎng)尾分布

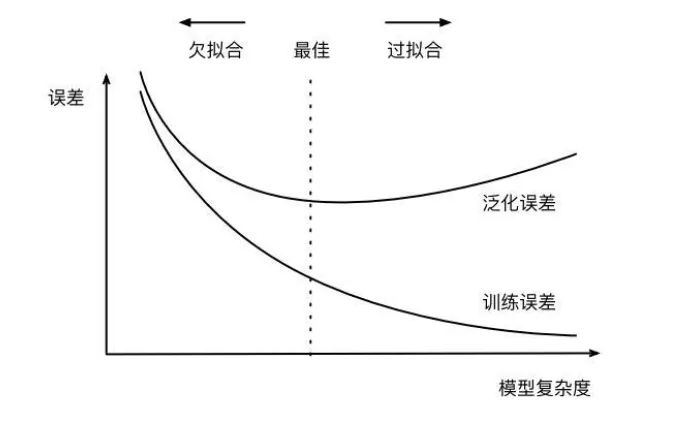

欠擬合與過(guò)擬合

模型沒(méi)有很好或足夠數(shù)量的訓(xùn)練訓(xùn)練集

模型的訓(xùn)練特征過(guò)于簡(jiǎn)單

模型沒(méi)有很好或足夠數(shù)量的訓(xùn)練訓(xùn)練集

訓(xùn)練數(shù)據(jù)和測(cè)試數(shù)據(jù)有偏差

模型的訓(xùn)練過(guò)度,過(guò)于復(fù)雜,沒(méi)有學(xué)到主要的特征

正則化

L1正則化是指權(quán)值向量 中各個(gè)元素的絕對(duì)值之和,通常表示為 L2正則化是指權(quán)值向量 中各個(gè)元素的平方和然后再求平方根(可以看到Ridge回歸的L2正 則化項(xiàng)有平方符號(hào))

L1正則化可以產(chǎn)生稀疏權(quán)值矩陣,即產(chǎn)生一個(gè)稀疏模型,可以用于特征選擇 L2正則化可以防止模型過(guò)擬合 (overfitting)

調(diào)參方法

建模與調(diào)參

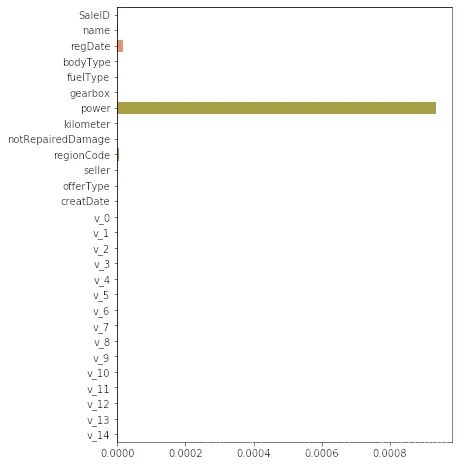

線性回歸

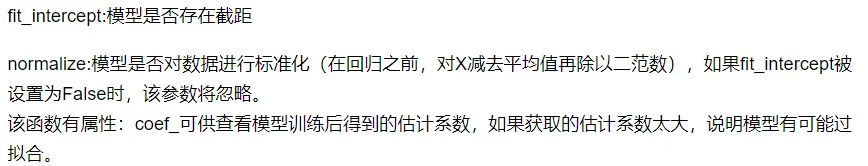

sklearn.linear_model.LinearRegression(fit_intercept=True,normalize=False,copy_X=True,n_jobs=1

model = LinearRegression(normalize=True)

model.fit(data_x, data_y)

model.intercept_, model.coef_

'intercept:'+ str(model.intercept_)

sorted(dict(zip(continuous_feature_names, model.coef_)).items(), key=lambda x:x[1], reverse=True)

## output

data_y = np.log(data_y + 1)

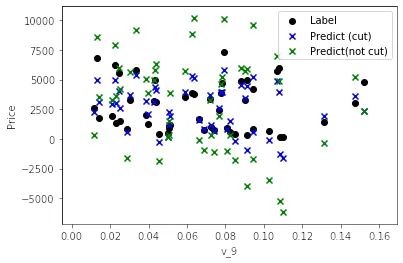

# 交叉驗(yàn)證

scores = cross_val_score(LinearRegression(normalize=True), X=data_x, \

y=data_y, cv=5, scoring=make_scorer(mean_absolute_error))

np.mean(scores)

import datetime

sample_feature = sample_feature.reset_index(drop=True)

split_point = len(sample_feature) // 5 * 4

train = sample_feature.loc[:split_point].dropna()

val = sample_feature.loc[split_point:].dropna()

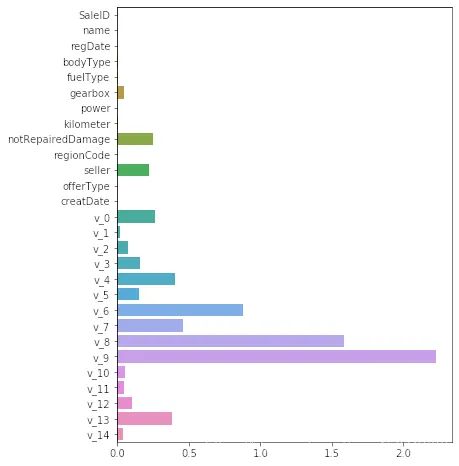

train_X = train[continuous_feature_names]

train_y_ln = np.log(train['price'] + 1)

val_X = val[continuous_feature_names]

val_y_ln = np.log(val['price'] + 1)

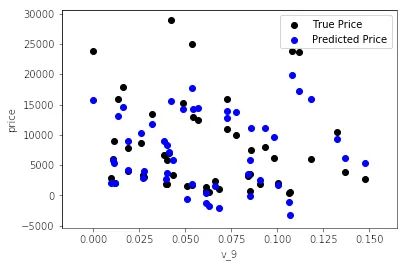

model = model.fit(train_X, train_y_ln)

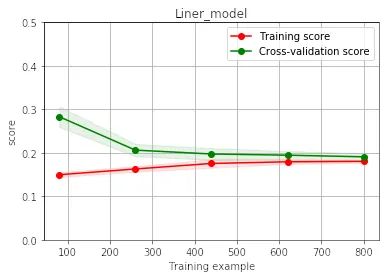

fill_between()

train_sizes - 第一個(gè)參數(shù)表示覆蓋的區(qū)域

train_scores_mean - train_scores_std - 第二個(gè)參數(shù)表示覆蓋的下限

train_scores_mean + train_scores_std - 第三個(gè)參數(shù)表示覆蓋的上限

color - 表示覆蓋區(qū)域的顏色

alpha - 覆蓋區(qū)域的透明度,越大越不透明 [0,1]

mean_absolute_error(val_y_ln, model.predict(val_X))

0.19443858353490887

線性模型

models = [LinearRegression(),

Ridge(),

Lasso()]

result = dict()

for model in models:

model_name = str(model).split('(')[0]

scores = cross_val_score(model, X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error))

result[model_name] = scores

print(model_name + ' is finished')

result = pd.DataFrame(result)

result.index = ['cv' + str(x) for x in range(1, 6)]

result

非線性模型

SVR:用于標(biāo)簽連續(xù)值的回歸問(wèn)題

SVC:用于分類標(biāo)簽的分類問(wèn)題

loss - 選擇損失函數(shù),默認(rèn)值為ls(least squres),即最小二乘法,對(duì)函數(shù)擬合 learning_rate - 學(xué)習(xí)率 n_estimators - 弱學(xué)習(xí)器的數(shù)目,默認(rèn)值100 max_depth - 每一個(gè)學(xué)習(xí)器的最大深度,限制回歸樹(shù)的節(jié)點(diǎn)數(shù)目,默認(rèn)為3 min_samples_split - 可以劃分為內(nèi)部節(jié)點(diǎn)的最小樣本數(shù),默認(rèn)為2 min_samples_leaf - 葉節(jié)點(diǎn)所需的最小樣本數(shù),默認(rèn)為1

hidden_layer_sizes - hidden_layer_sizes=(50, 50),表示有兩層隱藏層,第一層隱藏層有50個(gè)神經(jīng)元,第二層也有50個(gè)神經(jīng)元 activation - 激活函數(shù) {‘identity’, ‘logistic’, ‘tanh’, ‘relu’},默認(rèn)relu identity - f(x) = x logistic - 其實(shí)就是sigmod函數(shù),f(x) = 1 / (1 + exp(-x)) tanh - f(x) = tanh(x) relu - f(x) = max(0, x) solver - 用來(lái)優(yōu)化權(quán)重 {‘lbfgs’, ‘sgd’, ‘a(chǎn)dam’},默認(rèn)adam lbfgs - quasi-Newton方法的優(yōu)化器:對(duì)小數(shù)據(jù)集來(lái)說(shuō),lbfgs收斂更快效果也更好 sgd - 隨機(jī)梯度下降 adam - 機(jī)遇隨機(jī)梯度的優(yōu)化器 alpha - 正則化項(xiàng)參數(shù),可選的,默認(rèn)0.0001 learning_rate - 學(xué)習(xí)率,用于權(quán)重更新,只有當(dāng)solver為’sgd’時(shí)使用 max_iter - 最大迭代次數(shù),默認(rèn)200 shuffle - 判斷是否在每次迭代時(shí)對(duì)樣本進(jìn)行清洗,默認(rèn)True,只有當(dāng)solver=’sgd’或者‘a(chǎn)dam’時(shí)使用

采用連續(xù)的方式構(gòu)造樹(shù),每棵樹(shù)都試圖糾正前一棵樹(shù)的錯(cuò)誤 與隨機(jī)森林不同,梯度提升回歸樹(shù)沒(méi)有使用隨機(jī)化,而是用到了強(qiáng)預(yù)剪枝 從而使得梯度提升樹(shù)往往深度很小,這樣模型占用的內(nèi)存少,預(yù)測(cè)的速度也快

from sklearn.linear_model import LinearRegression

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import RandomForestRegressor

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.neural_network import MLPRegressor

from xgboost.sklearn import XGBRegressor

from lightgbm.sklearn import LGBMRegressor

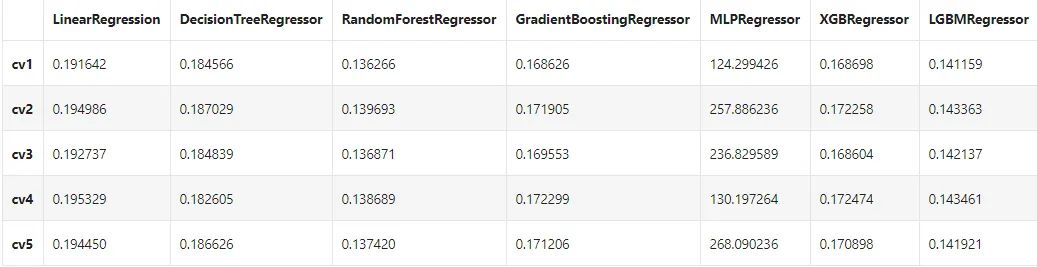

models = [LinearRegression(),

DecisionTreeRegressor(),

RandomForestRegressor(),

GradientBoostingRegressor(),

MLPRegressor(solver='lbfgs', max_iter=100),

XGBRegressor(n_estimators = 100, objective='reg:squarederror'),

LGBMRegressor(n_estimators = 100)]

result = dict()

for model in models:

model_name = str(model).split('(')[0]

scores = cross_val_score(model, X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error))

result[model_name] = scores

print(model_name + ' is finished')

result = pd.DataFrame(result)

result.index = ['cv' + str(x) for x in range(1, 6)]

result

num_leaves - 控制了葉節(jié)點(diǎn)的數(shù)目,它是控制樹(shù)模型復(fù)雜度的主要參數(shù),取值應(yīng) <= 2 ^(max_depth) bagging_fraction - 每次迭代時(shí)用的數(shù)據(jù)比例,用于加快訓(xùn)練速度和減小過(guò)擬合 feature_fraction - 每次迭代時(shí)用的特征比例,例如為0.8時(shí),意味著在每次迭代中隨機(jī)選擇80%的參數(shù)來(lái)建樹(shù),boosting為random forest時(shí)用 min_data_in_leaf - 每個(gè)葉節(jié)點(diǎn)的最少樣本數(shù)量。它是處理leaf-wise樹(shù)的過(guò)擬合的重要參數(shù)。將它設(shè)為較大的值,可以避免生成一個(gè)過(guò)深的樹(shù)。但是也可能導(dǎo)致欠擬合 max_depth - 控制了樹(shù)的最大深度,該參數(shù)可以顯式的限制樹(shù)的深度 n_estimators - 分多少顆決策樹(shù)(總共迭代的次數(shù)) objective - 問(wèn)題類型 regression - 回歸任務(wù),使用L2損失函數(shù) regression_l1 - 回歸任務(wù),使用L1損失函數(shù) huber - 回歸任務(wù),使用huber損失函數(shù) fair - 回歸任務(wù),使用fair損失函數(shù) mape (mean_absolute_precentage_error) - 回歸任務(wù),使用MAPE損失函數(shù)

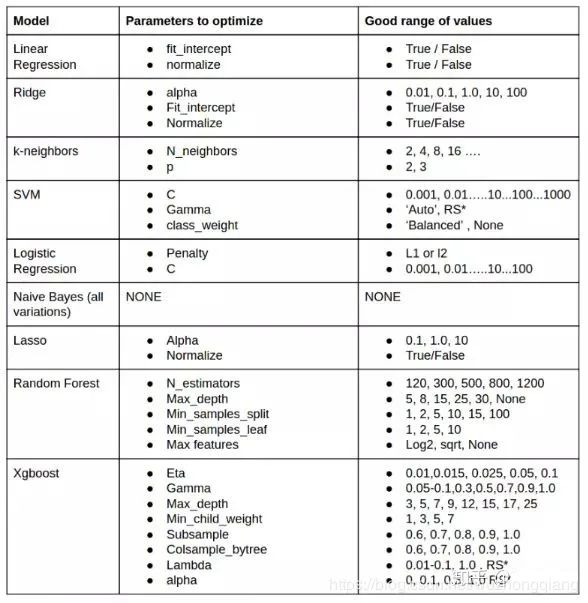

模型調(diào)參

貪心調(diào)參 GridSearchCV調(diào)參 貝葉斯調(diào)參

objectives = ["rank:map", "reg:gamma", "count:poisson", "reg:tweedie", "reg:squaredlogerror"]

max_depths = [1, 3, 5, 10, 15]

lambdas = [.1, 1, 2, 3, 4]

best_obj = dict()

for obj in objective:

model = LGBMRegressor(objective=obj)

score = np.mean(cross_val_score(model, X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error)))

best_obj[obj] = score

best_leaves = dict()

for leaves in num_leaves:

model = LGBMRegressor(objective=min(best_obj.items(), key=lambda x:x[1])[0], num_leaves=leaves)

score = np.mean(cross_val_score(model, X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error)))

best_leaves[leaves] = score

best_depth = dict()

for depth in max_depth:

model = LGBMRegressor(objective=min(best_obj.items(), key=lambda x:x[1])[0],

num_leaves=min(best_leaves.items(), key=lambda x:x[1])[0],

max_depth=depth)

score = np.mean(cross_val_score(model, X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error)))

best_depth[depth] = score

parameters = {'objective': objective , 'num_leaves': num_leaves, 'max_depth': max_depth}

model = LGBMRegressor()

clf = GridSearchCV(model, parameters, cv=5)

clf = clf.fit(train_X, train_y)

clf.best_params_

model = LGBMRegressor(objective='regression',

num_leaves=55,

max_depth=15)

np.mean(cross_val_score(model, X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error)))

0.13626164479243302貝葉斯調(diào)參采用高斯過(guò)程,考慮之前的參數(shù)信息,不斷地更新先驗(yàn); 網(wǎng)格搜索未考慮之前的參數(shù)信息貝葉斯調(diào)參迭代次數(shù)少,速度快;網(wǎng)格搜索速度慢,參數(shù)多時(shí)易導(dǎo)致維度爆炸 貝葉斯調(diào)參針對(duì)非凸問(wèn)題依然穩(wěn)健;網(wǎng)格搜索針對(duì)非凸問(wèn)題易得到局部最優(yōu)

定義優(yōu)化函數(shù)(rf_cv, 在里面把優(yōu)化的參數(shù)傳入,然后建立模型, 返回要優(yōu)化的分?jǐn)?shù)指標(biāo)) 定義優(yōu)化參數(shù) 開(kāi)始優(yōu)化(最大化分?jǐn)?shù)還是最小化分?jǐn)?shù)等) 得到優(yōu)化結(jié)果

from bayes_opt import BayesianOptimization

def rf_cv(num_leaves, max_depth, subsample, min_child_samples):

val = cross_val_score(

LGBMRegressor(objective = 'regression_l1',

num_leaves=int(num_leaves),

max_depth=int(max_depth),

subsample = subsample,

min_child_samples = int(min_child_samples)

),

X=train_X, y=train_y_ln, verbose=0, cv = 5, scoring=make_scorer(mean_absolute_error)

).mean()

return 1 - val

rf_bo = BayesianOptimization(

rf_cv,

{

'num_leaves': (2, 100),

'max_depth': (2, 100),

'subsample': (0.1, 1),

'min_child_samples' : (2, 100)

}

)

rf_bo.maximize()

往期精彩回顧

評(píng)論

圖片

表情