機(jī)器學(xué)習(xí)特征選擇方法總結(jié)

收集的數(shù)據(jù)格式不對(duì)(如 SQL 數(shù)據(jù)庫(kù)、JSON、CSV 等)

缺失值和異常值

標(biāo)準(zhǔn)化

減少數(shù)據(jù)集中存在的固有噪聲(部分存儲(chǔ)數(shù)據(jù)可能已損壞)

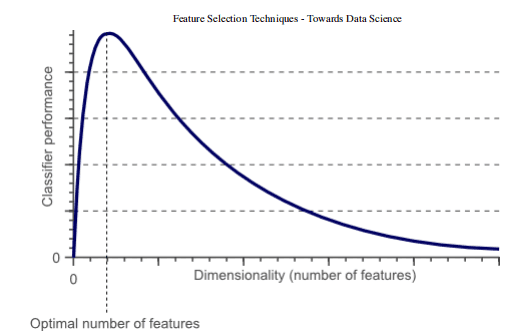

數(shù)據(jù)集中的某些功能可能無(wú)法收集任何信息以供分析

提高精度

降低過(guò)擬合風(fēng)險(xiǎn)

加快訓(xùn)練速度

改進(jìn)數(shù)據(jù)可視化

增加我們模型的可解釋性

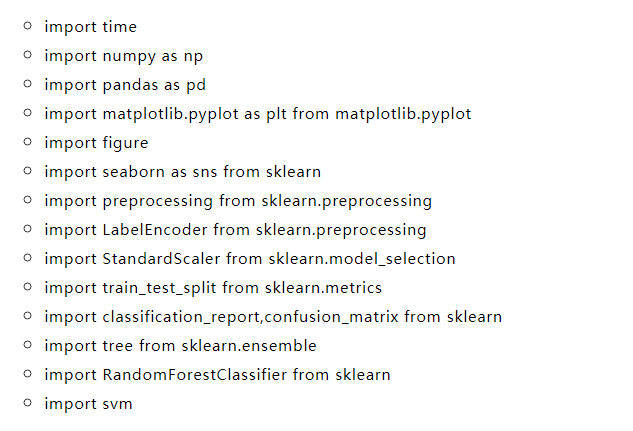

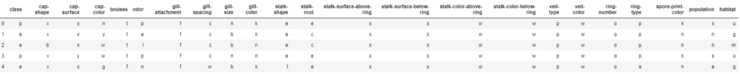

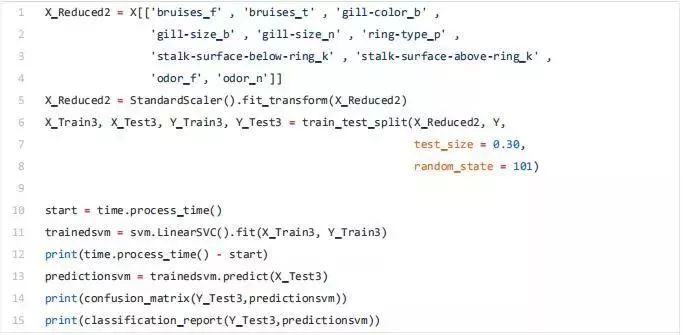

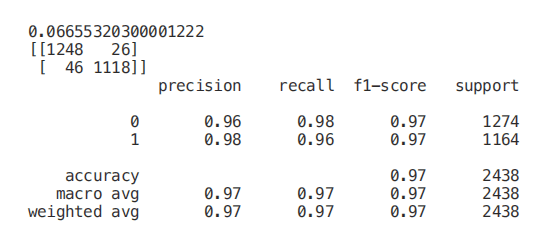

X?=?df.drop(['class'],?axis?=?1)Y?=?df['class']X?=?pd.get_dummies(X,?prefix_sep='_')Y?=?LabelEncoder().fit_transform(Y)X2?=?StandardScaler().fit_transform(X)X_Train, X_Test, Y_Train, Y_Test = train_test_split(X2, Y, test_size = 0.30, random_state = 101)

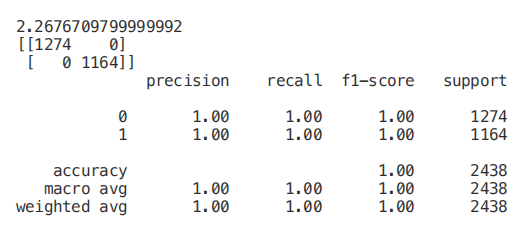

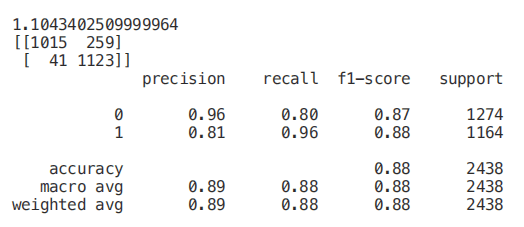

start = time.process_time()trainedforest = RandomForestClassifier(n_estimators=700).fit(X_Train,Y_Train)print(time.process_time() - start)predictionforest = trainedforest.predict(X_Test)print(confusion_matrix(Y_Test,predictionforest))print(classification_report(Y_Test,predictionforest))

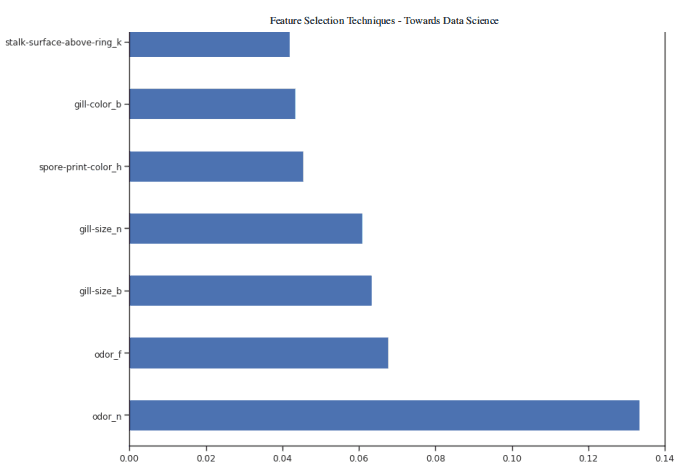

figure(num=None, figsize=(20, 22), dpi=80, facecolor='w', edgecolor='k')feat_importances = pd.Series(trainedforest.feature_importances_, index= X.columns)feat_importances.nlargest(7).plot(kind='barh')

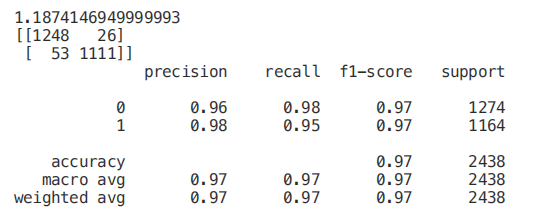

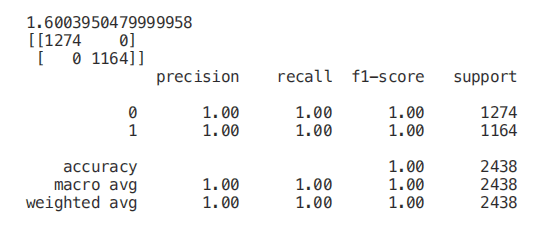

X_Reduced = X[['odor_n','odor_f', 'gill-size_n','gill-size_b']]X_Reduced = StandardScaler().fit_transform(X_Reduced)X_Train2, X_Test2, Y_Train2, Y_Test2 = train_test_split(X_Reduced, Y, test_size = 0.30, random_state = 101)start = time.process_time()trainedforest = RandomForestClassifier(n_estimators=700).fit(X_Train2,Y_Train2)print(time.process_time() - start)predictionforest = trainedforest.predict(X_Test2)print(confusion_matrix(Y_Test2,predictionforest))print(classification_report(Y_Test2,predictionforest))

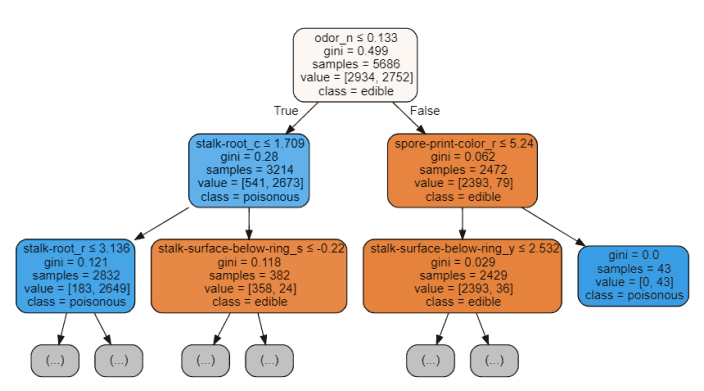

start = time.process_time()trainedtree = tree.DecisionTreeClassifier().fit(X_Train, Y_Train)print(time.process_time() - start)predictionstree = trainedtree.predict(X_Test)print(confusion_matrix(Y_Test,predictionstree))print(classification_report(Y_Test,predictionstree))

import graphvizfrom sklearn.tree import DecisionTreeClassifier, export_graphvizdata = export_graphviz(trainedtree,out_file=None,feature_names= X.columns,class_names=['edible', 'poisonous'],filled=True, rounded=True,max_depth=2,special_characters=True)graph = graphviz.Source(data)graph

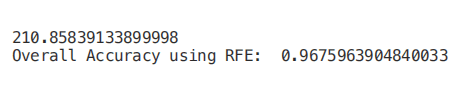

from?sklearn.feature_selection?import?RFEmodel = RandomForestClassifier(n_estimators=700)rfe = RFE(model, 4)start = time.process_time()RFE_X_Train = rfe.fit_transform(X_Train,Y_Train)RFE_X_Test = rfe.transform(X_Test)rfe = rfe.fit(RFE_X_Train,Y_Train)print(time.process_time() - start)print("Overall?Accuracy?using?RFE:?",?rfe.score(RFE_X_Test,Y_Test))

SelecFromModel

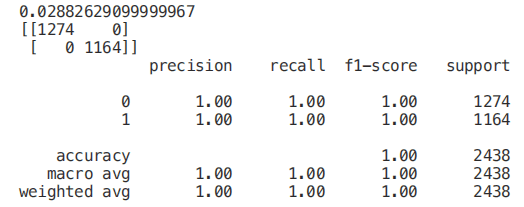

from sklearn.ensemble import ExtraTreesClassifierfrom sklearn.feature_selection import SelectFromModelmodel = ExtraTreesClassifier()start = time.process_time()model = model.fit(X_Train,Y_Train)model = SelectFromModel(model, prefit=True)print(time.process_time() - start)Selected_X = model.transform(X_Train)start = time.process_time()trainedforest = RandomForestClassifier(n_estimators=700).fit(Selected_X, Y_Train)print(time.process_time() - start)Selected_X_Test = model.transform(X_Test)predictionforest = trainedforest.predict(Selected_X_Test)print(confusion_matrix(Y_Test,predictionforest))print(classification_report(Y_Test,predictionforest))

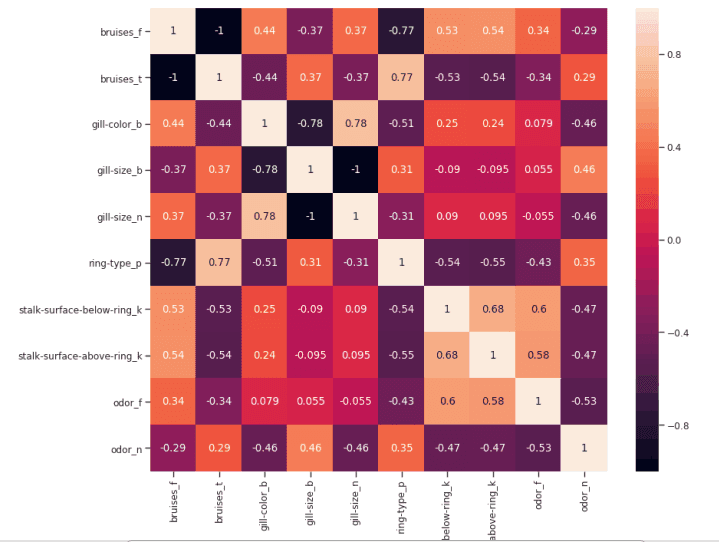

如果兩個(gè)特征之間的相關(guān)性為 0,則意味著更改這兩個(gè)特征中的任何一個(gè)都不會(huì)影響另一個(gè)。

如果兩個(gè)特征之間的相關(guān)性大于 0,這意味著增加一個(gè)特征中的值也會(huì)增加另一個(gè)特征中的值(相關(guān)系數(shù)越接近 1,兩個(gè)不同特征之間的這種聯(lián)系就越強(qiáng))。

如果兩個(gè)特征之間的相關(guān)性小于 0,這意味著增加一個(gè)特征中的值將使減少另一個(gè)特征中的值(相關(guān)性系數(shù)越接近-1,兩個(gè)不同特征之間的這種關(guān)系將越強(qiáng))。

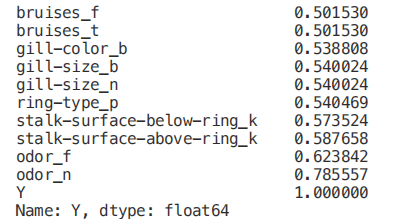

Numeric_df = pd.DataFrame(X)Numeric_df['Y'] = Ycorr= Numeric_df.corr()corr_y = abs(corr["Y"])highest_corr = corr_y[corr_y >0.5]highest_corr.sort_values(ascending=True)

figure(num=None, figsize=(12, 10), dpi=80, facecolor='w', edgecolor='k')corr2 = Numeric_df[['bruises_f' , 'bruises_t' , 'gill-color_b' , 'gill-size_b' , 'gill-size_n' , 'ring-type_p' , 'stalk-surface-below-ring_k' , 'stalk-surface-above-ring_k' , 'odor_f', 'odor_n']].corr()sns.heatmap(corr2, annot=True, fmt=".2g")

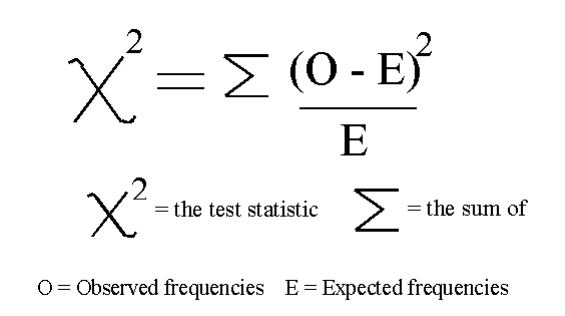

單變量選擇

Classification = chi2, f_classif, mutual_info_classif

Regression = f_regression, mutual_info_regression

from sklearn.feature_selection import SelectKBestfrom sklearn.feature_selection import chi2min_max_scaler = preprocessing.MinMaxScaler()Scaled_X?=?min_max_scaler.fit_transform(X2)X_new = SelectKBest(chi2, k=2).fit_transform(Scaled_X, Y)X_Train3, X_Test3, Y_Train3, Y_Test3 = train_test_split(X_new, Y, test_size = 0.30, random_state = 101)start = time.process_time()trainedforest = RandomForestClassifier(n_estimators=700).fit(X_Train3,Y_Train3)print(time.process_time() - start)predictionforest = trainedforest.predict(X_Test3)print(confusion_matrix(Y_Test3,predictionforest))print(classification_report(Y_Test3,predictionforest))

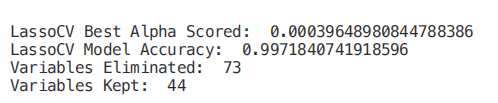

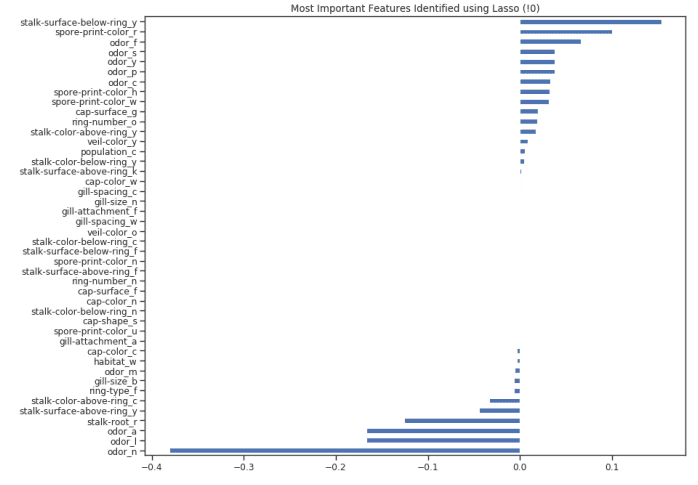

from sklearn.linear_model import LassoCVregr = LassoCV(cv=5, random_state=101)regr.fit(X_Train,Y_Train)print("LassoCV Best Alpha Scored: ", regr.alpha_)print("LassoCV Model Accuracy: ", regr.score(X_Test, Y_Test))model_coef = pd.Series(regr.coef_, index = list(X.columns[:-1]))print("Variables Eliminated: ", str(sum(model_coef == 0)))print("Variables Kept: ", str(sum(model_coef != 0)))

figure(num=None, figsize=(12, 10), dpi=80, facecolor='w', edgecolor='k')top_coef = model_coef.sort_values()top_coef[top_coef != 0].plot(kind = "barh")plt.title("Most?Important?Features?Identified?using?Lasso?(!0)")

9.9元秒殺【特征工程與模型優(yōu)化特訓(xùn)】,兩大實(shí)戰(zhàn)項(xiàng)目,學(xué)習(xí)多種優(yōu)化方法 掌握比賽上分利器。優(yōu)秀學(xué)員還可獲得1V1簡(jiǎn)歷優(yōu)化及內(nèi)推名額!在售價(jià)199.9元,限時(shí)9.9元秒!

課程詳情如下???

參與方式:

掃描上方海報(bào)二維碼

回復(fù)“7”

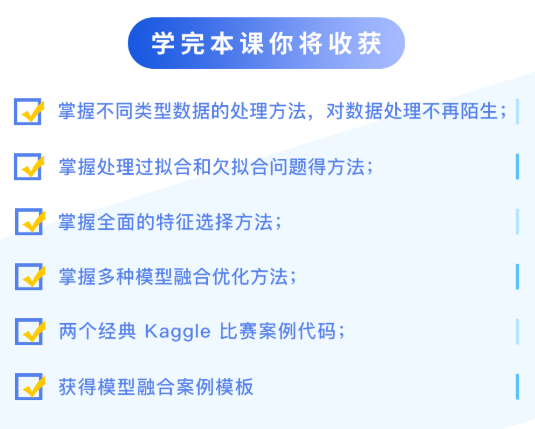

課程從數(shù)據(jù)采集到數(shù)據(jù)處理、到特征選擇、再到模型調(diào)優(yōu),帶你掌握一套完整的機(jī)器學(xué)習(xí)流程,對(duì)于不同類(lèi)型的數(shù)據(jù),不同場(chǎng)景下的問(wèn)題,選擇合適的特征工程方法和模型優(yōu)化方法進(jìn)行處理尤為重要。

本次課程還會(huì)提供兩個(gè)經(jīng)典的 Kaggle 比賽案例和詳細(xì)的模型融合模板,帶你更容易地理解機(jī)器學(xué)習(xí),掌握比賽上分利器。

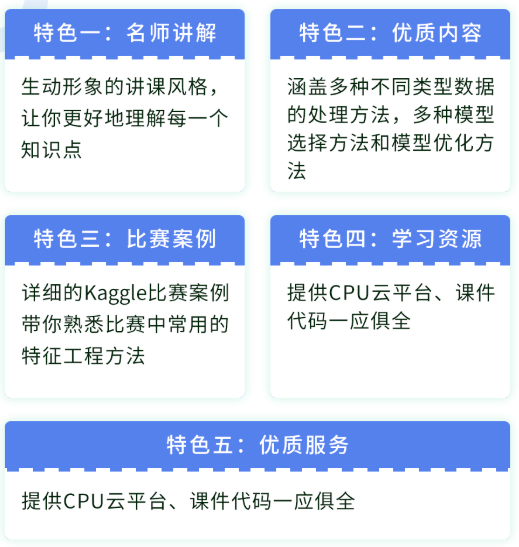

五大課程特色

參與方式:

掃描上方海報(bào)二維碼

回復(fù)“7”

戳↓↓“閱讀原文”查看課程詳情!(機(jī)器學(xué)習(xí)集訓(xùn)營(yíng)預(yù)習(xí)課之一)