【學(xué)術(shù)相關(guān)】CVPR2021最新接收論文合集!22個(gè)方向100+篇論文匯總|持續(xù)更新

導(dǎo)讀

CVPR2021結(jié)果已出,本文為CVPR最新接收論文的資源匯總貼,附有相關(guān)文章與代碼鏈接。

https://github.com/extreme-assistant/CVPR2021-Paper-Code-Interpretation/blob/master/CVPR2021.md

官網(wǎng)鏈接:http://cvpr2021.thecvf.com

時(shí)間:2021年6月19日-6月25日

論文接收公布時(shí)間:2021年2月28日

1.CVPR2021接受論文/代碼分方向整理

分類目錄:

檢測(cè)

圖像目標(biāo)檢測(cè)(Image Object Detection)

[7] Semantic Relation Reasoning for Shot-Stable Few-Shot Object Detection(小樣本目標(biāo)檢測(cè)的語(yǔ)義關(guān)系推理)

paper:https://arxiv.org/abs/2103.01903

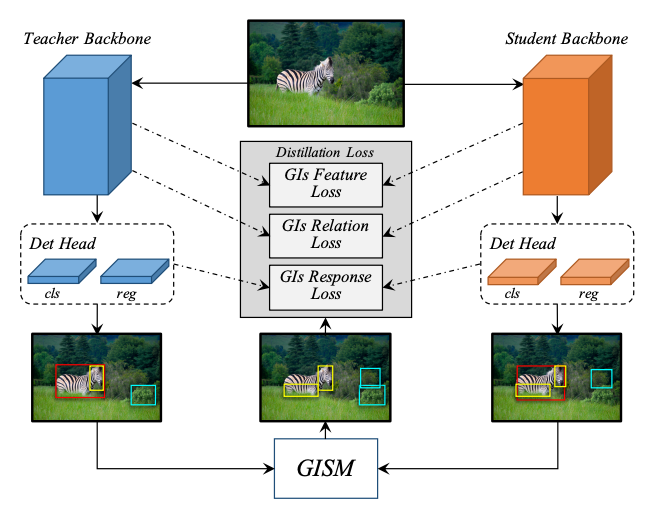

[6] General Instance Distillation for Object Detection(通用實(shí)例蒸餾技術(shù)在目標(biāo)檢測(cè)中的應(yīng)用)

paper:https://arxiv.org/abs/2103.02340

[5] Instance Localization for Self-supervised Detection Pretraining(自監(jiān)督檢測(cè)預(yù)訓(xùn)練的實(shí)例定位)

paper:https://arxiv.org/pdf/2102.08318.pdf

code:https://github.com/limbo0000/InstanceLoc

[4] Multiple Instance Active Learning for Object Detection(用于對(duì)象檢測(cè)的多實(shí)例主動(dòng)學(xué)習(xí))

paper:https://github.com/yuantn/MIAL/raw/master/paper.pdf

code:https://github.com/yuantn/MIAL

[3] Towards Open World Object Detection(開放世界中的目標(biāo)檢測(cè))

paper:Towards Open World Object Detection

code:https://github.com/JosephKJ/OWOD

[2] Positive-Unlabeled Data Purification in the Wild for Object Detection(野外檢測(cè)對(duì)象的陽(yáng)性無標(biāo)簽數(shù)據(jù)提純)

[1] UP-DETR: Unsupervised Pre-training for Object Detection with Transformers

paper:https://arxiv.org/pdf/2011.09094.pdf

解讀:

無監(jiān)督預(yù)訓(xùn)練檢測(cè)器:https://www.zhihu.com/question/432321109/answer/1606004872

視頻目標(biāo)檢測(cè)(Video Object Detection)

[3] Depth from Camera Motion and Object Detection(相機(jī)運(yùn)動(dòng)和物體檢測(cè)的深度)

paper:https://arxiv.org/abs/2103.01468

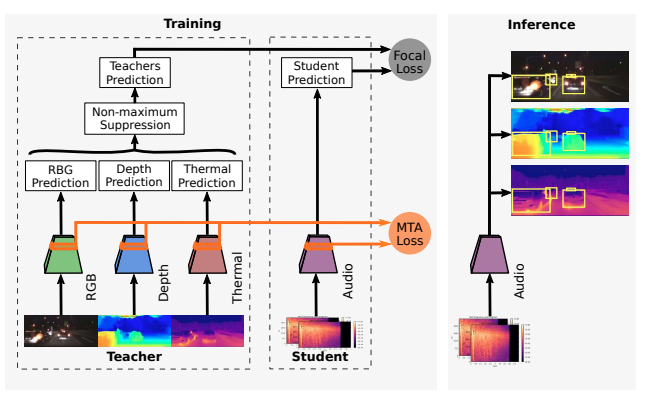

[2] There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge(多模態(tài)知識(shí)提取的自監(jiān)督多目標(biāo)檢測(cè)與有聲跟蹤)

paper:https://arxiv.org/abs/2103.01353

project:http://rl.uni-freiburg.de/research/multimodal-distill

[1] Dogfight: Detecting Drones from Drone Videos(從無人機(jī)視頻中檢測(cè)無人機(jī))

三維目標(biāo)檢測(cè)(3D object detection)

[2] 3DIoUMatch: Leveraging IoU Prediction for Semi-Supervised 3D Object Detection(利用IoU預(yù)測(cè)進(jìn)行半監(jiān)督3D對(duì)象檢測(cè))

paper:https://arxiv.org/pdf/2012.04355.pdf

code:https://github.com/THU17cyz/3DIoUMatch

project:https://thu17cyz.github.io/3DIoUMatch/

video:https://youtu.be/nuARjhkQN2U

[1] Categorical Depth Distribution Network for Monocular 3D Object Detection(用于單目三維目標(biāo)檢測(cè)的分類深度分布網(wǎng)絡(luò))

paper:https://arxiv.org/abs/2103.01100

動(dòng)作檢測(cè)(Activity Detection)

[1] Coarse-Fine Networks for Temporal Activity Detection in Videos

paper:https://arxiv.org/abs/2103.01302

異常檢測(cè)(Anomally Detetion)

[1] Multiresolution Knowledge Distillation for Anomaly Detection(用于異常檢測(cè)的多分辨率知識(shí)蒸餾)

paper:https://arxiv.org/abs/2011.11108

圖像分割(Image Segmentation)

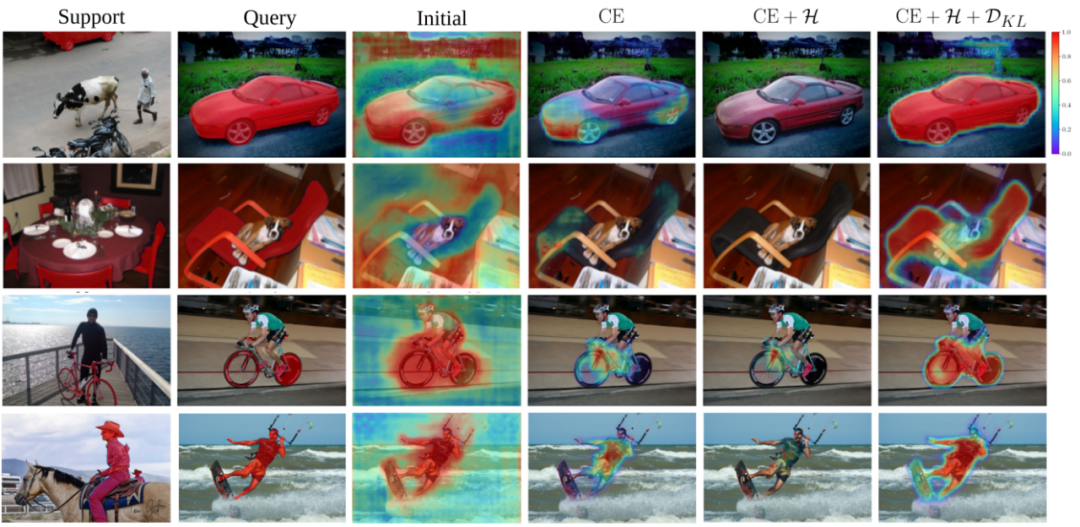

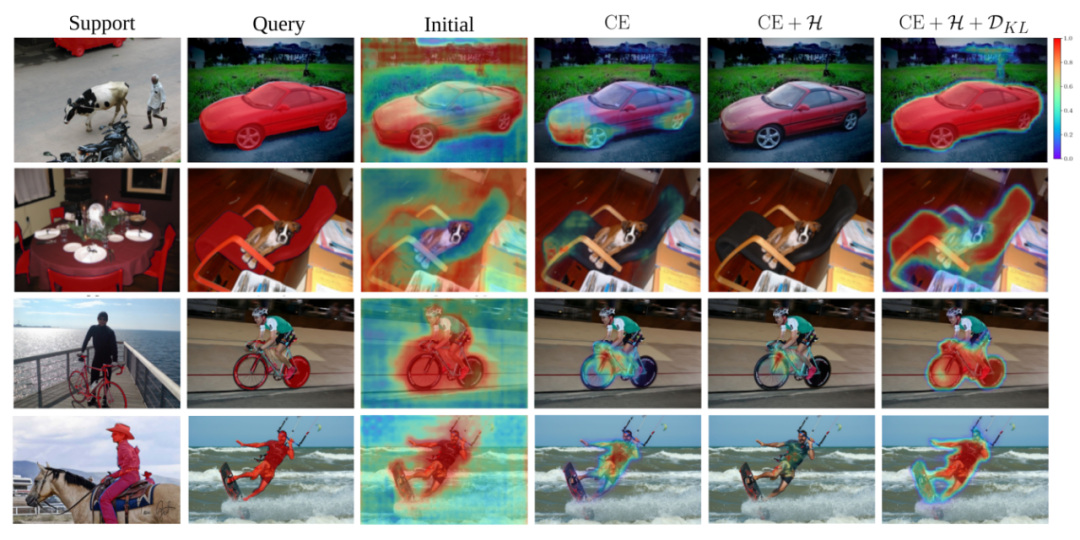

[2] Few-Shot Segmentation Without Meta-Learning: A Good Transductive Inference Is All You Need?

paper:https://arxiv.org/abs/2012.06166

code:https://github.com/mboudiaf/RePRI-for-Few-Shot-Segmentation

[1] PointFlow: Flowing Semantics Through Points for Aerial Image Segmentation(語(yǔ)義流經(jīng)點(diǎn)以進(jìn)行航空?qǐng)D像分割)

全景分割(Panoptic Segmentation)

[2] Cross-View Regularization for Domain Adaptive Panoptic Segmentation(用于域自適應(yīng)全景分割的跨視圖正則化)

paper:https://arxiv.org/abs/2103.02584

[1] 4D Panoptic LiDAR Segmentation(4D全景LiDAR分割)

paper:https://arxiv.org/abs/2102.12472

語(yǔ)義分割(Semantic Segmentation)

[2] Towards Semantic Segmentation of Urban-Scale 3D Point Clouds: A Dataset, Benchmarks and Challenges(走向城市規(guī)模3D點(diǎn)云的語(yǔ)義分割:數(shù)據(jù)集,基準(zhǔn)和挑戰(zhàn))

paper:https://arxiv.org/abs/2009.03137

code:https://github.com/QingyongHu/SensatUrban

[1] PLOP: Learning without Forgetting for Continual Semantic Segmentation(PLOP:學(xué)習(xí)而不會(huì)忘記連續(xù)的語(yǔ)義分割)

paper:https://arxiv.org/abs/2011.11390

實(shí)例分割(Instance Segmentation)

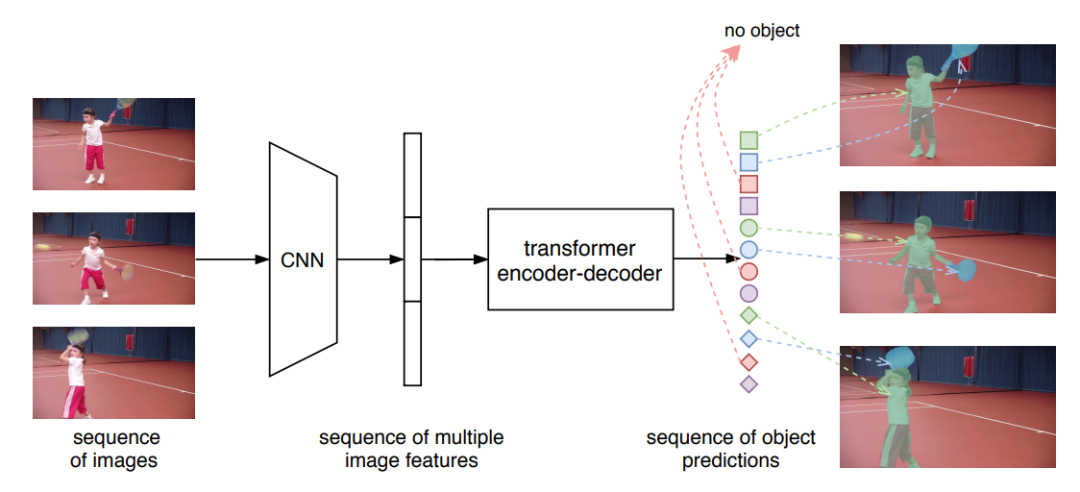

[1] End-to-End Video Instance Segmentation with Transformers(使用Transformer的端到端視頻實(shí)例分割)

paper:https://arxiv.org/abs/2011.14503

摳圖(Matting)

[1] Real-Time High Resolution Background Matting

paper:https://arxiv.org/abs/2012.07810

code:https://github.com/PeterL1n/BackgroundMattingV2

project:https://grail.cs.washington.edu/projects/background-matting-v2/

video:https://youtu.be/oMfPTeYDF9g

9. 估計(jì)(Estimation)

人體姿態(tài)估計(jì)(Human Pose Estimation)

[2] CanonPose: Self-supervised Monocular 3D Human Pose Estimation in the Wild(野外自監(jiān)督的單眼3D人類姿態(tài)估計(jì))

[1] PCLs: Geometry-aware Neural Reconstruction of 3D Pose with Perspective Crop Layers(具有透視作物層的3D姿勢(shì)的幾何感知神經(jīng)重建)

paper:https://arxiv.org/abs/2011.13607

光流/位姿/運(yùn)動(dòng)估計(jì)(Flow/Pose/Motion Estimation)

[3] GDR-Net: Geometry-Guided Direct Regression Network for Monocular 6D Object Pose Estimation(用于單眼6D對(duì)象姿態(tài)估計(jì)的幾何引導(dǎo)直接回歸網(wǎng)絡(luò))

paper:http://arxiv.org/abs/2102.12145

code:https://github.com/THU-DA-6D-Pose-Group/GDR-Net

[2] Robust Neural Routing Through Space Partitions for Camera Relocalization in Dynamic Indoor Environments(在動(dòng)態(tài)室內(nèi)環(huán)境中,通過空間劃分的魯棒神經(jīng)路由可實(shí)現(xiàn)攝像機(jī)的重新定位)

paper:https://arxiv.org/abs/2012.04746

project:https://ai.stanford.edu/~hewang/

[1] MultiBodySync: Multi-Body Segmentation and Motion Estimation via 3D Scan Synchronization(通過3D掃描同步進(jìn)行多主體分割和運(yùn)動(dòng)估計(jì))

paper:https://arxiv.org/pdf/2101.06605.pdf

code:https://github.com/huangjh-pub/multibody-sync

深度估計(jì)(Depth Estimation)

圖像處理(Image Processing)

圖像復(fù)原(Image Restoration)/超分辨率(Super Resolution)

[3] Multi-Stage Progressive Image Restoration(多階段漸進(jìn)式圖像復(fù)原)

paper:https://arxiv.org/abs/2102.02808

code:https://github.com/swz30/MPRNet

[2] Data-Free Knowledge Distillation For Image Super-Resolution(DAFL算法的SR版本)

[1] AdderSR: Towards Energy Efficient Image Super-Resolution(將加法網(wǎng)路應(yīng)用到圖像超分辨率中)

paper:https://arxiv.org/pdf/2009.08891.pdf

code:https://github.com/huawei-noah/AdderNet

解讀:華為開源加法神經(jīng)網(wǎng)絡(luò)

圖像陰影去除(Image Shadow Removal)

[1] Auto-Exposure Fusion for Single-Image Shadow Removal(用于單幅圖像陰影去除的自動(dòng)曝光融合)

paper:https://arxiv.org/abs/2103.01255

code:https://github.com/tsingqguo/exposure-fusion-shadow-removal

圖像去噪/去模糊/去雨去霧(Image Denoising)

[1] DeFMO: Deblurring and Shape Recovery of Fast Moving Objects(快速移動(dòng)物體的去模糊和形狀恢復(fù))

paper:https://arxiv.org/abs/2012.00595

code:https://github.com/rozumden/DeFMO

video:https://www.youtube.com/watch?v=pmAynZvaaQ4

圖像編輯(Image Edit)

[1] Exploiting Spatial Dimensions of Latent in GAN for Real-time Image Editing(利用GAN中潛在的空間維度進(jìn)行實(shí)時(shí)圖像編輯)

圖像翻譯(Image Translation)

[2] Image-to-image Translation via Hierarchical Style Disentanglement

paper:https://arxiv.org/abs/2103.01456

code:https://github.com/imlixinyang/HiSD

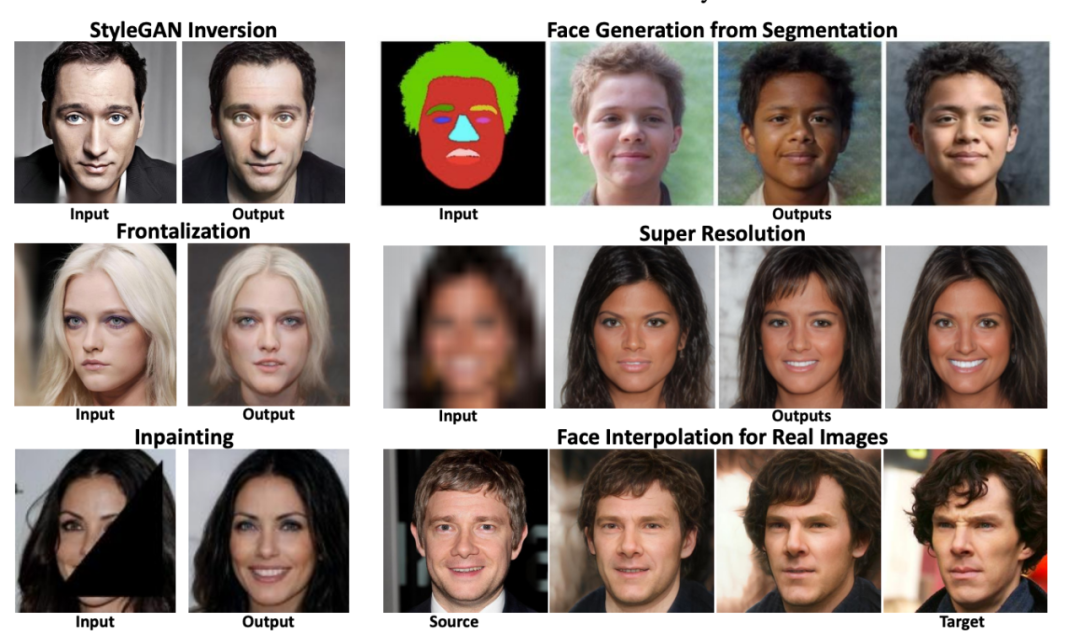

[1] Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation(樣式編碼:用于圖像到圖像翻譯的StyleGAN編碼器)

paper:https://arxiv.org/abs/2008.00951

code:https://github.com/eladrich/pixel2style2pixel

project:https://eladrich.github.io/pixel2style2pixel/

人臉(Face)

[5] Cross Modal Focal Loss for RGBD Face Anti-Spoofing(Cross Modal Focal Loss for RGBD Face Anti-Spoofing)

paper:https://arxiv.org/abs/2103.00948

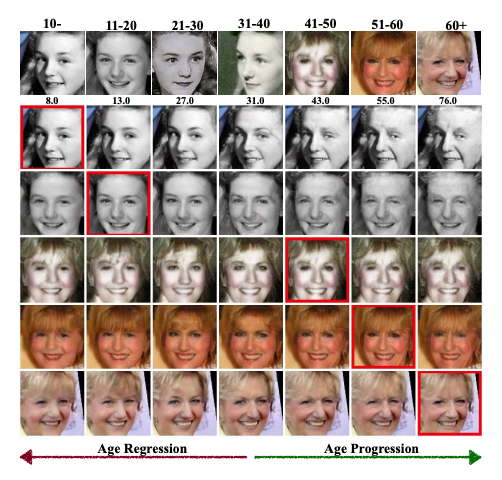

[4] When Age-Invariant Face Recognition Meets Face Age Synthesis: A Multi-Task Learning Framework(當(dāng)年齡不變的人臉識(shí)別遇到人臉年齡合成時(shí):一個(gè)多任務(wù)學(xué)習(xí)框架)

paper:https://arxiv.org/abs/2103.01520

code:https://github.com/Hzzone/MTLFace

[3] Multi-attentional Deepfake Detection(多注意的深偽檢測(cè))

paper:https://arxiv.org/abs/2103.02406

[2] Image-to-image Translation via Hierarchical Style Disentanglement

paper:https://arxiv.org/abs/2103.01456

code:https://github.com/imlixinyang/HiSD

[1] A 3D GAN for Improved Large-pose Facial Recognition(用于改善大姿勢(shì)面部識(shí)別的3D GAN)

paper:https://arxiv.org/pdf/2012.10545.pdf

目標(biāo)跟蹤(Object Tracking)

[4] HPS: localizing and tracking people in large 3D scenes from wearable sensors(通過可穿戴式傳感器對(duì)大型3D場(chǎng)景中的人進(jìn)行定位和跟蹤)

[3] Track to Detect and Segment: An Online Multi-Object Tracker(跟蹤檢測(cè)和分段:在線多對(duì)象跟蹤器)

project:https://jialianwu.com/projects/TraDeS.html

video:https://www.youtube.com/watch?v=oGNtSFHRZJA

[2] Probabilistic Tracklet Scoring and Inpainting for Multiple Object Tracking(多目標(biāo)跟蹤的概率小波計(jì)分和修復(fù))

paper:https://arxiv.org/abs/2012.02337

[1] Rotation Equivariant Siamese Networks for Tracking(旋轉(zhuǎn)等距連體網(wǎng)絡(luò)進(jìn)行跟蹤)

paper:https://arxiv.org/abs/2012.13078

重識(shí)別

[1] Meta Batch-Instance Normalization for Generalizable Person Re-Identification(通用批處理人員重新標(biāo)識(shí)的元批實(shí)例規(guī)范化)

paper:https://arxiv.org/abs/2011.14670

醫(yī)學(xué)影像(Medical Imaging)

[4] Multi-institutional Collaborations for Improving Deep Learning-based Magnetic Resonance Image Reconstruction Using Federated Learning(多機(jī)構(gòu)協(xié)作改進(jìn)基于深度學(xué)習(xí)的聯(lián)合學(xué)習(xí)磁共振圖像重建)

paper:https://arxiv.org/abs/2103.02148

code:https://github.com/guopengf/FLMRCM

[3] 3D Graph Anatomy Geometry-Integrated Network for Pancreatic Mass Segmentation, Diagnosis, and Quantitative Patient Management(用于胰腺腫塊分割,診斷和定量患者管理的3D圖形解剖學(xué)幾何集成網(wǎng)絡(luò))

[2] Deep Lesion Tracker: Monitoring Lesions in 4D Longitudinal Imaging Studies(深部病變追蹤器:在4D縱向成像研究中監(jiān)控病變)

paper:https://arxiv.org/abs/2012.04872

[1] Automatic Vertebra Localization and Identification in CT by Spine Rectification and Anatomically-constrained Optimization(通過脊柱矯正和解剖學(xué)約束優(yōu)化在CT中自動(dòng)進(jìn)行椎骨定位和識(shí)別)

paper:https://arxiv.org/abs/2012.07947

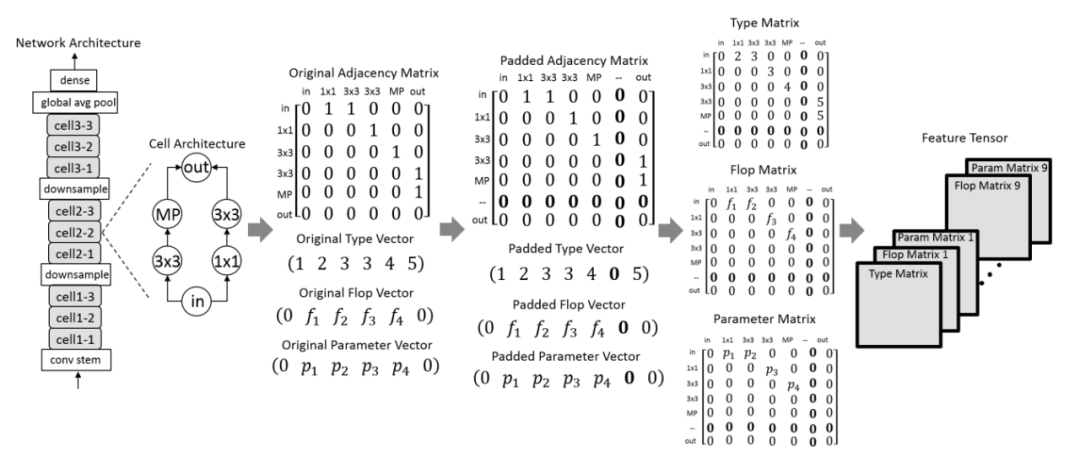

神經(jīng)網(wǎng)絡(luò)架構(gòu)搜索(NAS)

[3] AttentiveNAS: Improving Neural Architecture Search via Attentive(通過注意力改善神經(jīng)架構(gòu)搜索)

paper:https://arxiv.org/pdf/2011.09011.pdf

[2] ReNAS: Relativistic Evaluation of Neural Architecture Search(NAS predictor當(dāng)中ranking loss的重要性)

paper:https://arxiv.org/pdf/1910.01523.pdf

[1] HourNAS: Extremely Fast Neural Architecture Search Through an Hourglass Lens(降低NAS的成本)

paper:https://arxiv.org/pdf/2005.14446.pdf

GAN/生成式/對(duì)抗式(GAN/Generative/Adversarial)

[5] Efficient Conditional GAN Transfer with Knowledge Propagation across Classes(高效的有條件GAN轉(zhuǎn)移以及跨課程的知識(shí)傳播)

paper:https://arxiv.org/abs/2102.06696

code:http://github.com/mshahbazi72/cGANTransfer

[4] Exploiting Spatial Dimensions of Latent in GAN for Real-time Image Editing(利用GAN中潛在的空間維度進(jìn)行實(shí)時(shí)圖像編輯)

[3] Hijack-GAN: Unintended-Use of Pretrained, Black-Box GANs(Hijack-GAN:意外使用經(jīng)過預(yù)訓(xùn)練的黑匣子GAN)

paper:https://arxiv.org/pdf/2011.14107.pdf

[2] Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation(樣式編碼:用于圖像到圖像翻譯的StyleGAN編碼器)

paper:https://arxiv.org/abs/2008.00951

code:https://github.com/eladrich/pixel2style2pixel

project:https://eladrich.github.io/pixel2style2pixel/

[1] A 3D GAN for Improved Large-pose Facial Recognition(用于改善大姿勢(shì)面部識(shí)別的3D GAN)

paper:https://arxiv.org/pdf/2012.10545.pdf

三維視覺(3D Vision)

[2] A Deep Emulator for Secondary Motion of 3D Characters(三維角色二次運(yùn)動(dòng)的深度仿真器)

paper:https://arxiv.org/abs/2103.01261

[1] 3D CNNs with Adaptive Temporal Feature Resolutions(具有自適應(yīng)時(shí)間特征分辨率的3D CNN)

paper:https://arxiv.org/abs/2011.08652

三維點(diǎn)云(3D Point Cloud)

[6] Towards Semantic Segmentation of Urban-Scale 3D Point Clouds: A Dataset, Benchmarks and Challenges(走向城市規(guī)模3D點(diǎn)云的語(yǔ)義分割:數(shù)據(jù)集,基準(zhǔn)和挑戰(zhàn))

paper:https://arxiv.org/abs/2009.03137

code:https://github.com/QingyongHu/SensatUrban

[5] SpinNet: Learning a General Surface Descriptor for 3D Point Cloud Registration(SpinNet:學(xué)習(xí)用于3D點(diǎn)云注冊(cè)的通用表面描述符)

paper:https://t.co/xIAWVGQeB2?amp=1

code:https://github.com/QingyongHu/SpinNet

[4] MultiBodySync: Multi-Body Segmentation and Motion Estimation via 3D Scan Synchronization(通過3D掃描同步進(jìn)行多主體分割和運(yùn)動(dòng)估計(jì))

paper:https://arxiv.org/pdf/2101.06605.pdf

code:https://github.com/huangjh-pub/multibody-sync

[3] Diffusion Probabilistic Models for 3D Point Cloud Generation(三維點(diǎn)云生成的擴(kuò)散概率模型)

paper:https://arxiv.org/abs/2103.01458

code:https://github.com/luost26/diffusion-point-cloud

[2] Style-based Point Generator with Adversarial Rendering for Point Cloud Completion(用于點(diǎn)云補(bǔ)全的對(duì)抗性渲染基于樣式的點(diǎn)生成器)

paper:https://arxiv.org/abs/2103.02535

[1] PREDATOR: Registration of 3D Point Clouds with Low Overlap(預(yù)測(cè)器:低重疊的3D點(diǎn)云的注冊(cè))

paper:https://arxiv.org/pdf/2011.13005.pdf

code:https://github.com/ShengyuH/OverlapPredator

project:https://overlappredator.github.io/

三維重建(3D Reconstruction)

[1] PCLs: Geometry-aware Neural Reconstruction of 3D Pose with Perspective Crop Layers(具有透視作物層的3D姿勢(shì)的幾何感知神經(jīng)重建)

paper:https://arxiv.org/abs/2011.13607

模型壓縮(Model Compression)

[2] Manifold Regularized Dynamic Network Pruning(動(dòng)態(tài)剪枝的過程中考慮樣本復(fù)雜度與網(wǎng)絡(luò)復(fù)雜度的約束)

[1] Learning Student Networks in the Wild(一種不需要原始訓(xùn)練數(shù)據(jù)的模型壓縮和加速技術(shù))

paper:https://arxiv.org/pdf/1904.01186.pdf

code:https://github.com/huawei-noah/DAFL

解讀:

華為諾亞方舟實(shí)驗(yàn)室提出無需數(shù)據(jù)網(wǎng)絡(luò)壓縮技術(shù):https://zhuanlan.zhihu.com/p/81277796

知識(shí)蒸餾(Knowledge Distillation)

[3] General Instance Distillation for Object Detection(通用實(shí)例蒸餾技術(shù)在目標(biāo)檢測(cè)中的應(yīng)用)

paper:https://arxiv.org/abs/2103.02340

[2] Multiresolution Knowledge Distillation for Anomaly Detection(用于異常檢測(cè)的多分辨率知識(shí)蒸餾)

paper:https://arxiv.org/abs/2011.11108

[1] Distilling Object Detectors via Decoupled Features(前景背景分離的蒸餾技術(shù))

神經(jīng)網(wǎng)絡(luò)架構(gòu)(Neural Network Structure)

[3] Rethinking Channel Dimensions for Efficient Model Design(重新考慮通道尺寸以進(jìn)行有效的模型設(shè)計(jì))

paper:https://arxiv.org/abs/2007.00992

code:https://github.com/clovaai/rexnet

[2] Inverting the Inherence of Convolution for Visual Recognition(顛倒卷積的固有性以進(jìn)行視覺識(shí)別)

[1] RepVGG: Making VGG-style ConvNets Great Again

paper:https://arxiv.org/abs/2101.03697

code:https://github.com/megvii-model/RepVGG

解讀:

RepVGG:極簡(jiǎn)架構(gòu),SOTA性能,讓VGG式模型再次偉大:https://zhuanlan.zhihu.com/p/344324470

Transformer

[3] Transformer Interpretability Beyond Attention Visualization(注意力可視化之外的Transformer可解釋性)

paper:https://arxiv.org/pdf/2012.09838.pdf

code:https://github.com/hila-chefer/Transformer-Explainability

[2] UP-DETR: Unsupervised Pre-training for Object Detection with Transformers

paper:https://arxiv.org/pdf/2011.09094.pdf

解讀:無監(jiān)督預(yù)訓(xùn)練檢測(cè)器:https://www.zhihu.com/question/432321109/answer/1606004872

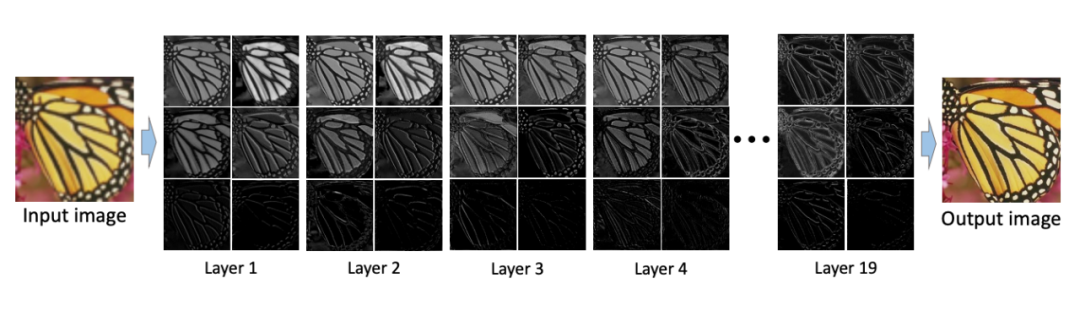

[1] Pre-Trained Image Processing Transformer(底層視覺預(yù)訓(xùn)練模型)

paper:https://arxiv.org/pdf/2012.00364.pdf

圖神經(jīng)網(wǎng)絡(luò)(GNN)

[2] Quantifying Explainers of Graph Neural Networks in Computational Pathology(計(jì)算病理學(xué)中圖神經(jīng)網(wǎng)絡(luò)的量化解釋器)

paper:https://arxiv.org/pdf/2011.12646.pdf

[1] Sequential Graph Convolutional Network for Active Learning(主動(dòng)學(xué)習(xí)的順序圖卷積網(wǎng)絡(luò))

paper:https://arxiv.org/pdf/2006.10219.pdf

數(shù)據(jù)處理(Data Processing)

數(shù)據(jù)增廣(Data Augmentation)

[1] KeepAugment: A Simple Information-Preserving Data Augmentation(一種簡(jiǎn)單的保存信息的數(shù)據(jù)擴(kuò)充)

paper:https://arxiv.org/pdf/2011.11778.pdf

歸一化/正則化(Batch Normalization)

[3] Adaptive Consistency Regularization for Semi-Supervised Transfer Learning(半監(jiān)督轉(zhuǎn)移學(xué)習(xí)的自適應(yīng)一致性正則化)

paper:https://arxiv.org/abs/2103.02193

code:https://github.com/SHI-Labs/Semi-Supervised-Transfer-Learning

[2] Meta Batch-Instance Normalization for Generalizable Person Re-Identification(通用批處理人員重新標(biāo)識(shí)的元批實(shí)例規(guī)范化)

paper:https://arxiv.org/abs/2011.14670

[1] Representative Batch Normalization with Feature Calibration(具有特征校準(zhǔn)功能的代表性批量歸一化)

圖像聚類(Image Clustering)

[2] Improving Unsupervised Image Clustering With Robust Learning(通過魯棒學(xué)習(xí)改善無監(jiān)督圖像聚類)

paper:https://arxiv.org/abs/2012.11150

code:https://github.com/deu30303/RUC

[1] Reconsidering Representation Alignment for Multi-view Clustering(重新考慮多視圖聚類的表示對(duì)齊方式)

模型評(píng)估(Model Evaluation)

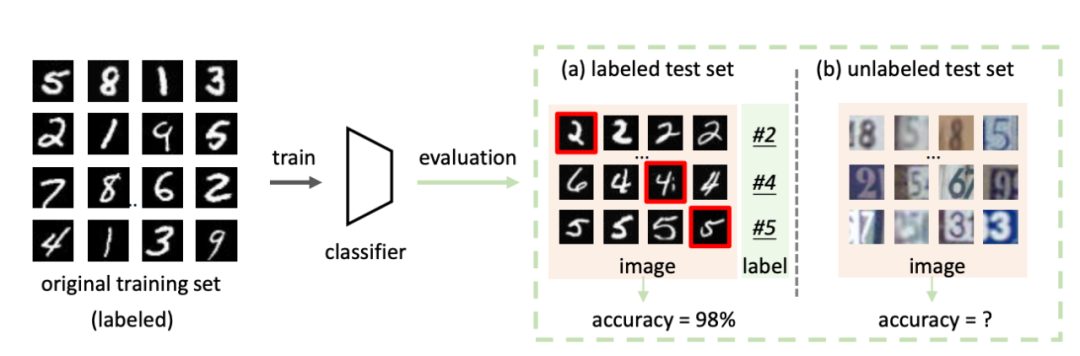

[1] Are Labels Necessary for Classifier Accuracy Evaluation?(測(cè)試集沒有標(biāo)簽,我們可以拿來測(cè)試模型嗎?)

paper:https://arxiv.org/abs/2007.02915

解讀:https://zhuanlan.zhihu.com/p/328686799

數(shù)據(jù)集(Database)

[2] Towards Semantic Segmentation of Urban-Scale 3D Point Clouds: A Dataset, Benchmarks and Challenges(走向城市規(guī)模3D點(diǎn)云的語(yǔ)義分割:數(shù)據(jù)集,基準(zhǔn)和挑戰(zhàn))

paper:https://arxiv.org/abs/2009.03137

code:https://github.com/QingyongHu/SensatUrban

[1] Re-labeling ImageNet: from Single to Multi-Labels, from Global to Localized Labels(重新標(biāo)記ImageNet:從單標(biāo)簽到多標(biāo)簽,從全局標(biāo)簽到本地標(biāo)簽)

paper:https://arxiv.org/abs/2101.05022

code:https://github.com/naver-ai/relabel_imagenet

主動(dòng)學(xué)習(xí)(Active Learning)

[3] Vab-AL: Incorporating Class Imbalance and Difficulty with Variational Bayes for Active Learning

paper:https://github.com/yuantn/MIAL/raw/master/paper.pdf

code:https://github.com/yuantn/MIAL

[2] Multiple Instance Active Learning for Object Detection(用于對(duì)象檢測(cè)的多實(shí)例主動(dòng)學(xué)習(xí))

paper:https://github.com/yuantn/MIAL/raw/master/paper.pdf

code:https://github.com/yuantn/MIAL

[1] Sequential Graph Convolutional Network for Active Learning(主動(dòng)學(xué)習(xí)的順序圖卷積網(wǎng)絡(luò))

paper:https://arxiv.org/pdf/2006.10219.pdf

小樣本學(xué)習(xí)(Few-shot Learning)/零樣本

[5] Few-Shot Segmentation Without Meta-Learning: A Good Transductive Inference Is All You Need?

paper:https://arxiv.org/abs/2012.06166

code:https://github.com/mboudiaf/RePRI-for-Few-Shot-Segmentation

[4] Counterfactual Zero-Shot and Open-Set Visual Recognition(反事實(shí)零射和開集視覺識(shí)別)

paper:https://arxiv.org/abs/2103.00887

code:https://github.com/yue-zhongqi/gcm-cf

[3] Semantic Relation Reasoning for Shot-Stable Few-Shot Object Detection(小樣本目標(biāo)檢測(cè)的語(yǔ)義關(guān)系推理)

paper:https://arxiv.org/abs/2103.01903

[2] Few-shot Open-set Recognition by Transformation Consistency(轉(zhuǎn)換一致性很少的開放集識(shí)別)

[1] Exploring Complementary Strengths of Invariant and Equivariant Representations for Few-Shot Learning(探索少量學(xué)習(xí)的不變表示形式和等變表示形式的互補(bǔ)強(qiáng)度)

paper:https://arxiv.org/abs/2103.01315

持續(xù)學(xué)習(xí)(Continual Learning/Life-long Learning)

[2] Rainbow Memory: Continual Learning with a Memory of Diverse Samples(不斷學(xué)習(xí)與多樣本的記憶)

[1] Learning the Superpixel in a Non-iterative and Lifelong Manner(以非迭代和終身的方式學(xué)習(xí)超像素)

視覺推理(Visual Reasoning)

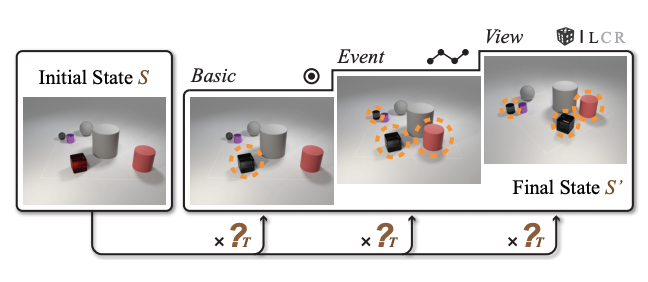

[1] Transformation Driven Visual Reasoning(轉(zhuǎn)型驅(qū)動(dòng)的視覺推理)

paper:https://arxiv.org/pdf/2011.13160.pdf

code:https://github.com/hughplay/TVR

project:https://hongxin2019.github.io/TVR/

遷移學(xué)習(xí)/domain/自適應(yīng)](#domain)

[4] Continual Adaptation of Visual Representations via Domain Randomization and Meta-learning(通過域隨機(jī)化和元學(xué)習(xí)對(duì)視覺表示進(jìn)行連續(xù)調(diào)整)

paper:https://arxiv.org/abs/2012.04324

[3] Domain Generalization via Inference-time Label-Preserving Target Projections(基于推理時(shí)間保標(biāo)目標(biāo)投影的區(qū)域泛化)

paper:https://arxiv.org/abs/2103.01134

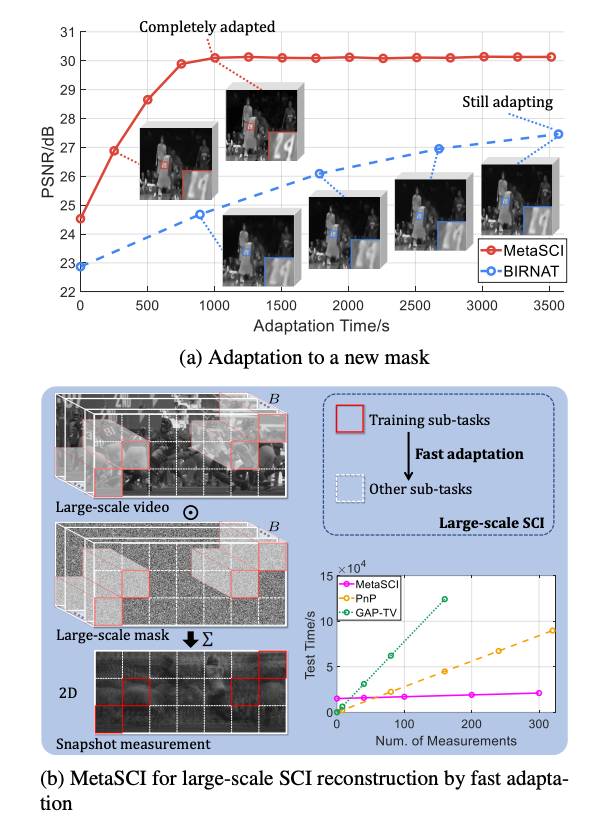

[2] MetaSCI: Scalable and Adaptive Reconstruction for Video Compressive Sensing(可伸縮的自適應(yīng)視頻壓縮傳感重建)

paper:https://arxiv.org/abs/2103.01786

code:https://github.com/xyvirtualgroup/MetaSCI-CVPR2021

[1] FSDR: Frequency Space Domain Randomization for Domain Generalization(用于域推廣的頻域隨機(jī)化)

paper:https://arxiv.org/abs/2103.02370

對(duì)比學(xué)習(xí)(Contrastive Learning)

[1] Fine-grained Angular Contrastive Learning with Coarse Labels(粗標(biāo)簽的細(xì)粒度角度對(duì)比學(xué)習(xí))

paper:https://arxiv.org/abs/2012.03515

暫無分類

Quantifying Explainers of Graph Neural Networks in Computational Pathology(計(jì)算病理學(xué)中圖神經(jīng)網(wǎng)絡(luò)的量化解釋器)

paper:https://arxiv.org/pdf/2011.12646.pdf

Exploring Data-Efficient 3D Scene Understanding with Contrastive Scene Contexts(探索具有對(duì)比場(chǎng)景上下文的數(shù)據(jù)高效3D場(chǎng)景理解)

paper:http://arxiv.org/abs/2012.09165

project:http://sekunde.github.io/project_efficient

video:http://youtu.be/E70xToZLgs4

Data-Free Model Extraction(無數(shù)據(jù)模型提取)

paper:https://arxiv.org/abs/2011.14779

Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition(用于【位置識(shí)別】的局部全局描述符的【多尺度融合】)

paper:https://arxiv.org/pdf/2103.01486.pdf

code:https://github.com/QVPR/Patch-NetVLAD

Right for the Right Concept: Revising Neuro-Symbolic Concepts by Interacting with their Explanations(適用于正確概念的權(quán)利:通過可解釋性來修正神經(jīng)符號(hào)概念)

paper:https://arxiv.org/abs/2011.12854

Multi-Objective Interpolation Training for Robustness to Label Noise(多目標(biāo)插值訓(xùn)練的魯棒性)

paper:https://arxiv.org/abs/2012.04462

code:https://git.io/JI40X

VX2TEXT: End-to-End Learning of Video-Based Text Generation From Multimodal Inputs(【文本生成】VX2TEXT:基于視頻的文本生成的端到端學(xué)習(xí)來自多模式輸入)

paper:https://arxiv.org/pdf/2101.12059.pdf

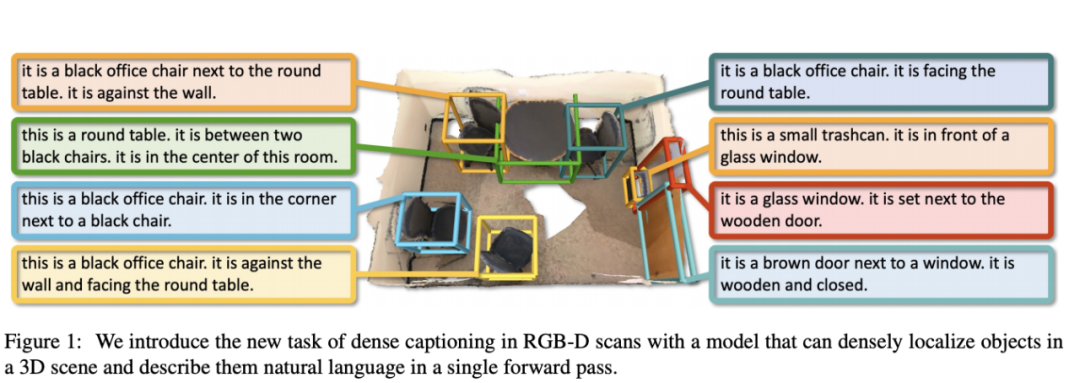

Scan2Cap: Context-aware Dense Captioning in RGB-D Scans(【圖像字幕】Scan2Cap:RGB-D掃描中的上下文感知密集字幕)

paper:https://arxiv.org/abs/2012.02206

code:https://github.com/daveredrum/Scan2Cap

project:https://daveredrum.github.io/Scan2Cap/

video:https://youtu.be/AgmIpDbwTCY

Hierarchical and Partially Observable Goal-driven Policy Learning with Goals Relational Graph(基于目標(biāo)關(guān)系圖的分層部分可觀測(cè)目標(biāo)驅(qū)動(dòng)策略學(xué)習(xí))

paper:https://arxiv.org/abs/2103.01350

ID-Unet: Iterative Soft and Hard Deformation for View Synthesis(視圖合成的迭代軟硬變形)

paper:https://arxiv.org/abs/2103.02264

PML: Progressive Margin Loss for Long-tailed Age Classification(【長(zhǎng)尾分布】【圖像分類】長(zhǎng)尾年齡分類的累進(jìn)邊際損失)

paper:https://arxiv.org/abs/2103.02140

Diversifying Sample Generation for Data-Free Quantization(【圖像生成】多樣化的樣本生成,實(shí)現(xiàn)無數(shù)據(jù)量化)

paper:https://arxiv.org/abs/2103.01049

Domain Generalization via Inference-time Label-Preserving Target Projections(通過保留推理時(shí)間的目標(biāo)投影進(jìn)行域泛化)

paper:https://arxiv.org/pdf/2103.01134.pdf

DeRF: Decomposed Radiance Fields(分解的輻射場(chǎng))

project:https://ubc-vision.github.io/derf/

Densely connected multidilated convolutional networks for dense prediction tasks(【密集預(yù)測(cè)】密集連接的多重卷積網(wǎng)絡(luò),用于密集的預(yù)測(cè)任務(wù))

paper:https://arxiv.org/abs/2011.11844

VirTex: Learning Visual Representations from Textual Annotations(【表示學(xué)習(xí)】從文本注釋中學(xué)習(xí)視覺表示)

paper:https://arxiv.org/abs/2006.06666

code:https://github.com/kdexd/virtex

Weakly-supervised Grounded Visual Question Answering using Capsules(使用膠囊進(jìn)行弱監(jiān)督的地面視覺問答)

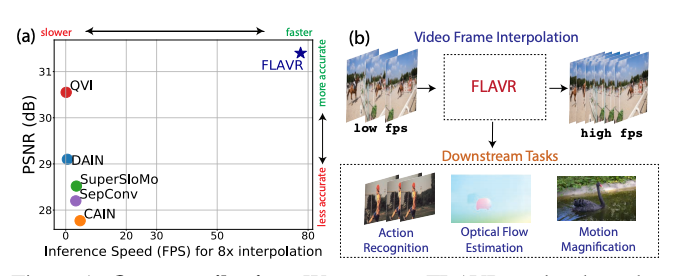

FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation(【視頻插幀】FLAVR:用于快速幀插值的與流無關(guān)的視頻表示)

paper:https://arxiv.org/pdf/2012.08512.pdf

code:https://tarun005.github.io/FLAVR/Code

project:https://tarun005.github.io/FLAVR/

Probabilistic Embeddings for Cross-Modal Retrieval(跨模態(tài)檢索的概率嵌入)

paper:https://arxiv.org/abs/2101.05068

Self-supervised Simultaneous Multi-Step Prediction of Road Dynamics and Cost Map(道路動(dòng)力學(xué)和成本圖的自監(jiān)督式多步同時(shí)預(yù)測(cè))

IIRC: Incremental Implicitly-Refined Classification(增量式隱式定義的分類)

paper:https://arxiv.org/abs/2012.12477

project:https://chandar-lab.github.io/IIRC/

Fair Attribute Classification through Latent Space De-biasing(通過潛在空間去偏的公平屬性分類)

paper:https://arxiv.org/abs/2012.01469

code:https://github.com/princetonvisualai/gan-debiasing

project:https://princetonvisualai.github.io/gan-debiasing/

Information-Theoretic Segmentation by Inpainting Error Maximization(修復(fù)誤差最大化的信息理論分割)

paper:https://arxiv.org/abs/2012.07287

UC2: Universal Cross-lingual Cross-modal Vision-and-Language Pretraining(【視頻語(yǔ)言學(xué)習(xí)】UC2:通用跨語(yǔ)言跨模態(tài)視覺和語(yǔ)言預(yù)培訓(xùn))

Less is More: CLIPBERT for Video-and-Language Learning via Sparse Sampling(通過稀疏采樣進(jìn)行視頻和語(yǔ)言學(xué)習(xí))

paper:https://arxiv.org/pdf/2102.06183.pdf

code:https://github.com/jayleicn/ClipBERT

D-NeRF: Neural Radiance Fields for Dynamic Scenes(D-NeRF:動(dòng)態(tài)場(chǎng)景的神經(jīng)輻射場(chǎng))

paper:https://arxiv.org/abs/2011.13961

project:https://www.albertpumarola.com/research/D-NeRF/index.html

Weakly Supervised Learning of Rigid 3D Scene Flow(剛性3D場(chǎng)景流的弱監(jiān)督學(xué)習(xí))

paper:https://arxiv.org/pdf/2102.08945.pdf

code:https://arxiv.org/pdf/2102.08945.pdf

project:https://3dsceneflow.github.io/

往期精彩回顧

本站qq群704220115,加入微信群請(qǐng)掃碼: