詳盡 | PyTorch動(dòng)態(tài)圖解析

點(diǎn)擊上方“小白學(xué)視覺(jué)”,選擇加"星標(biāo)"或“置頂”

重磅干貨,第一時(shí)間送達(dá)

本文轉(zhuǎn)自:深度學(xué)習(xí)這件小事

void THPAutograd_initFunctions(){THPObjectPtr module(PyModule_New("torch._C._functions"));......generated::initialize_autogenerated_functions();auto c_module = THPObjectPtr(PyImport_ImportModule("torch._C"));}

static std::unordered_map<std::type_index, THPObjectPtr> cpp_function_types

> gemfield = torch.empty([2,2],requires_grad=True)> syszux = gemfield * gemfield> syszux.grad_fn<ThMulBackward object at 0x7f111621c350>

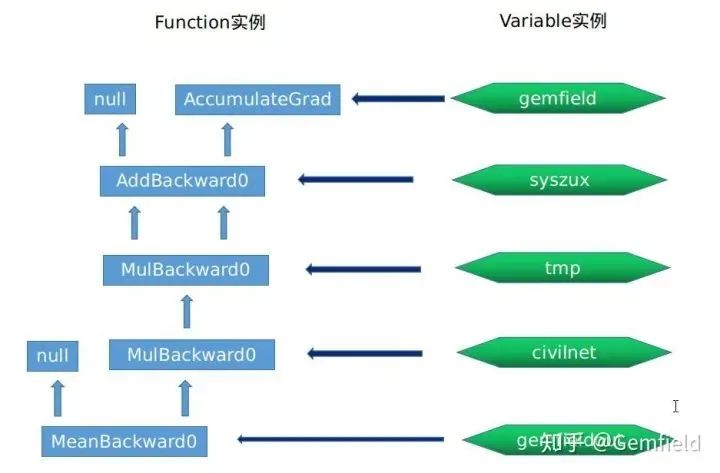

gemfield = torch.ones(2, 2, requires_grad=True)syszux = gemfield + 2civilnet = syszux * syszux * 3gemfieldout = civilnet.mean()gemfieldout.backward()

#Variable實(shí)例gemfield --> grad_fn_ (Function實(shí)例)= None--> grad_accumulator_ (Function實(shí)例)= AccumulateGrad實(shí)例0x55ca7f304500--> output_nr_ = 0#Function實(shí)例, 0x55ca7f872e90AddBackward0實(shí)例 --> sequence_nr_ (uint64_t) = 0--> next_edges_ (edge_list) --> std::vector<Edge> = [(AccumulateGrad實(shí)例, 0),(0, 0)]--> input_metadata_ --> [(type, shape, device)...] = [(CPUFloatType, [2, 2],cpu])]--> alpha (Scalar) = 1--> apply() --> 使用 AddBackward0 的apply#Variable實(shí)例syszux --> grad_fn_ (Function實(shí)例)= AddBackward0實(shí)例0x55ca7f872e90--> output_nr_ = 0#Function實(shí)例, 0x55ca7ebba2a0MulBackward0 --> sequence_nr_ (uint64_t) = 1--> next_edges_ (edge_list) = [(AddBackward0實(shí)例0x55ca7f872e90,0),(AddBackward0實(shí)例0x55ca7f872e90,0)]--> input_metadata_ --> [(type, shape, device)...] = [(CPUFloatType, [2, 2],cpu])]--> alpha (Scalar) = 1--> apply() --> 使用 MulBackward0 的apply# #Variable實(shí)例,syszux * syszux得到的tmptmp --> grad_fn_ (Function實(shí)例)= MulBackward0實(shí)例0x55ca7ebba2a0--> output_nr_ = 0#Function實(shí)例,0x55ca7fada2f0MulBackward0 --> sequence_nr_ (uint64_t) = 2 (每個(gè)線(xiàn)程內(nèi)自增)--> next_edges_ (edge_list) = [(MulBackward0實(shí)例0x55ca7ebba2a0,0),(0,0)]--> input_metadata_ --> [(type, shape, device)...] = [(CPUFloatType, [2, 2],cpu])]--> self_ (SavedVariable) = tmp的淺拷貝--> other_ (SavedVariable) = 3的淺拷貝--> apply() --> 使用 MulBackward0 的apply#Variable實(shí)例civilnet --> grad_fn_ (Function實(shí)例)= MulBackward0實(shí)例0x55ca7fada2f0 -#Function實(shí)例,0x55ca7eb358b0MeanBackward0 --> sequence_nr_ (uint64_t) = 3 (每個(gè)線(xiàn)程內(nèi)自增)--> next_edges_ (edge_list) = [(MulBackward0實(shí)例0x55ca7fada2f0,0)]--> input_metadata_ --> [(type, shape, device)...] = [(CPUFloatType|[]|cpu])]--> self_sizes (std::vector<int64_t>) = (2, 2)--> self_numel = 4--> apply() --> 使用 MulBackward0 的apply#Variable實(shí)例gemfieldout --> grad_fn_ (Function實(shí)例)= MeanBackward0實(shí)例0x55ca7eb358b0--> output_nr_ = 0

using edge_list = std::vector<Edge>;using variable_list = std::vector<Variable>;struct TORCH_API Function {...virtual variable_list apply(variable_list&& inputs) = 0;...const uint64_t sequence_nr_;edge_list next_edges_;PyObject* pyobj_ = nullptr; // weak referencestd::unique_ptr<AnomalyMetadata> anomaly_metadata_ = nullptr;std::vector<std::unique_ptr<FunctionPreHook>> pre_hooks_;std::vector<std::unique_ptr<FunctionPostHook>> post_hooks_;at::SmallVector<InputMetadata, 2> input_metadata_;};

variable_list operator()(variable_list&& inputs) {

return apply(std::move(inputs));

}

struct InputMetadata {...const at::Type* type_ = nullptr;at::DimVector shape_;at::Device device_ = at::kCPU;};

struct Edge {...std::shared_ptr<Function> function;uint32_t input_nr;};

CopySlices : public FunctionDelayedError : public FunctionError : public FunctionGather : public FunctionGraphRoot : public FunctionScatter : public FunctionAccumulateGrad : public FunctionAliasBackward : public FunctionAsStridedBackward : public FunctionCopyBackwards : public FunctionDiagonalBackward : public FunctionExpandBackward : public FunctionIndicesBackward0 : public FunctionIndicesBackward1 : public FunctionPermuteBackward : public FunctionSelectBackward : public FunctionSliceBackward : public FunctionSqueezeBackward0 : public FunctionSqueezeBackward1 : public FunctionTBackward : public FunctionTransposeBackward0 : public FunctionUnbindBackward : public FunctionUnfoldBackward : public FunctionUnsqueezeBackward0 : public FunctionValuesBackward0 : public FunctionValuesBackward1 : public FunctionViewBackward : public FunctionPyFunction : public Function

struct AccumulateGrad : public Function {explicit AccumulateGrad(Variable variable_);variable_list apply(variable_list&& grads) override;Variable variable;};

struct GraphRoot : public Function {GraphRoot(edge_list functions, variable_list inputs): Function(std::move(functions)),outputs(std::move(inputs)) {}variable_list apply(variable_list&& inputs) override {return outputs;}variable_list outputs;};

struct TraceableFunction : public Function {

using Function::Function;

bool is_traceable() final {

return true;

}

};

AbsBackward : public TraceableFunctionAcosBackward : public TraceableFunctionAdaptiveAvgPool2DBackwardBackward : public TraceableFunctionAdaptiveAvgPool2DBackward : public TraceableFunctionAdaptiveAvgPool3DBackwardBackward : public TraceableFunctionAdaptiveAvgPool3DBackward : public TraceableFunctionAdaptiveMaxPool2DBackwardBackward : public TraceableFunctionAdaptiveMaxPool2DBackward : public TraceableFunctionAdaptiveMaxPool3DBackwardBackward : public TraceableFunctionAdaptiveMaxPool3DBackward : public TraceableFunctionAddBackward0 : public TraceableFunctionAddBackward1 : public TraceableFunctionAddbmmBackward : public TraceableFunctionAddcdivBackward : public TraceableFunctionAddcmulBackward : public TraceableFunctionAddmmBackward : public TraceableFunctionAddmvBackward : public TraceableFunctionAddrBackward : public TraceableFunction......SoftmaxBackwardDataBackward : public TraceableFunctionSoftmaxBackward : public TraceableFunction......UpsampleBicubic2DBackwardBackward : public TraceableFunctionUpsampleBicubic2DBackward : public TraceableFunctionUpsampleBilinear2DBackwardBackward : public TraceableFunctionUpsampleBilinear2DBackward : public TraceableFunctionUpsampleLinear1DBackwardBackward : public TraceableFunctionUpsampleLinear1DBackward : public TraceableFunctionUpsampleNearest1DBackwardBackward : public TraceableFunctionUpsampleNearest1DBackward : public TraceableFunctionUpsampleNearest2DBackwardBackward : public TraceableFunctionUpsampleNearest2DBackward : public TraceableFunctionUpsampleNearest3DBackwardBackward : public TraceableFunctionUpsampleNearest3DBackward : public TraceableFunctionUpsampleTrilinear3DBackwardBackward : public TraceableFunctionUpsampleTrilinear3DBackward : public TraceableFunction......

struct AddBackward0 : public TraceableFunction {using TraceableFunction::TraceableFunction;variable_list apply(variable_list&& grads) override;Scalar alpha;};

gemfield = torch.ones(2, 2, requires_grad=True)syszux = gemfield + 2civilnet = syszux * syszux * 3gemfieldout = civilnet.mean()gemfieldout.backward()

struct Engine {using ready_queue_type = std::deque<std::pair<std::shared_ptr<Function>, InputBuffer>>;using dependencies_type = std::unordered_map<Function*, int>;virtual variable_list execute(const edge_list& roots,const variable_list& inputs,...const edge_list& outputs = {});void queue_callback(std::function<void()> callback);protected:void compute_dependencies(Function* root, GraphTask& task);void evaluate_function(FunctionTask& task);void start_threads();virtual void thread_init(int device);virtual void thread_main(GraphTask *graph_task);std::vector<std::shared_ptr<ReadyQueue>> ready_queues;};

struct PythonEngine : public Engine

#torch/tensor.py,self is gemfieldoutdef backward(self, gradient=None, retain_graph=None, create_graph=False)|V#torch.autograd.backward(self, gradient, retain_graph, create_graph)#torch/autograd/__init__.pydef backward(tensors, grad_tensors=None, retain_graph=None, create_graph=False, grad_variables=None)|VVariable._execution_engine.run_backward(tensors, grad_tensors, retain_graph, create_graph,allow_unreachable=True)#轉(zhuǎn)化為Variable._execution_engine.run_backward((gemfieldout,), (tensor(1.),), False, False,True)|V#torch/csrc/autograd/python_engine.cppPyObject *THPEngine_run_backward(THPEngine *self, PyObject *args, PyObject *kwargs)|V#torch/csrc/autograd/python_engine.cppvariable_list PythonEngine::execute(const edge_list& roots, const variable_list& inputs, bool keep_graph, bool create_graph, const edge_list& outputs)|V#torch/csrc/autograd/engine.cpp

總結(jié)

交流群

歡迎加入公眾號(hào)讀者群一起和同行交流,目前有SLAM、三維視覺(jué)、傳感器、自動(dòng)駕駛、計(jì)算攝影、檢測(cè)、分割、識(shí)別、醫(yī)學(xué)影像、GAN、算法競(jìng)賽等微信群(以后會(huì)逐漸細(xì)分),請(qǐng)掃描下面微信號(hào)加群,備注:”昵稱(chēng)+學(xué)校/公司+研究方向“,例如:”張三 + 上海交大 + 視覺(jué)SLAM“。請(qǐng)按照格式備注,否則不予通過(guò)。添加成功后會(huì)根據(jù)研究方向邀請(qǐng)進(jìn)入相關(guān)微信群。請(qǐng)勿在群內(nèi)發(fā)送廣告,否則會(huì)請(qǐng)出群,謝謝理解~

評(píng)論

圖片

表情