手把手帶你爬天貓,獲取杜蕾斯評(píng)論數(shù)據(jù)

聽(tīng)說(shuō)大家最近對(duì)爬蟲(chóng)感興趣,所以今天手把手帶你爬天貓。

爬蟲(chóng)爬什么呢?

因?yàn)?span style="color: rgb(93, 93, 93);font-size: 15px;letter-spacing: 1px;text-align: left;background-color: rgb(255, 255, 255);">海報(bào)出圈的杜蕾斯,真的是家喻戶(hù)曉。

不如就它吧

1、登錄天貓網(wǎng)站

??對(duì)于有些網(wǎng)站,需要登陸后才有可能獲取到網(wǎng)頁(yè)中的數(shù)據(jù)。天貓網(wǎng)站就是其中的網(wǎng)站之一。

2、搜索指定網(wǎng)頁(yè)

??這里我想要爬取的是杜蕾斯。因此我們直接搜索“杜蕾斯”。由于“杜蕾斯”的賣(mài)家有很多,這里我們只選取頁(yè)面的第一個(gè)圖片,進(jìn)行其中的“評(píng)論數(shù)據(jù)”的爬取。

?

?點(diǎn)擊第一個(gè)圖片,進(jìn)入到我們最終想要爬取數(shù)據(jù)的網(wǎng)頁(yè)。可以看到該頁(yè)面有很多評(píng)論信息,這也是我們想要抓取的信息。

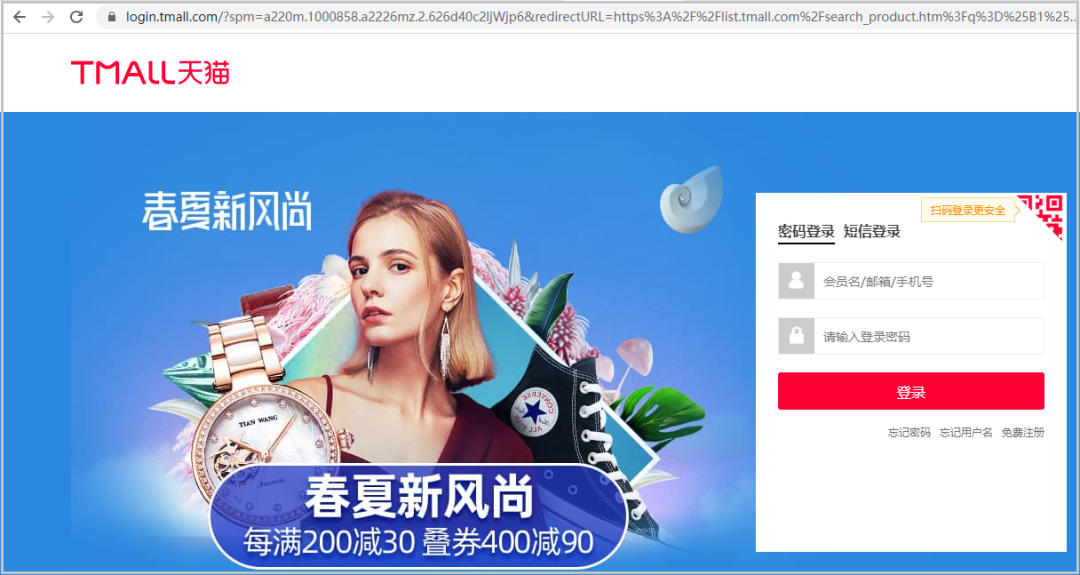

3、進(jìn)行第一次請(qǐng)求測(cè)試

import pandas as pd

import requests

import re

import time

url = "https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.626d40c2tp5mYQ&id=43751299764&skuId=4493124079453&areaId=421300&user_id=2380958892&cat_id=2&is_b=1&rn=cc519a17bf9cefb59ac94f0351791648"

headers ={

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36'

}

data = requests.get(url,headers=headers).text

data

結(jié)果如下:

結(jié)果分析:明明評(píng)論信息就是在這個(gè)頁(yè)面里面,我們這樣請(qǐng)求,怎么得不到數(shù)據(jù)呢?難道是沒(méi)有帶著cookies發(fā)送請(qǐng)求?我們接下來(lái)嘗試帶著cookies發(fā)送請(qǐng)求。

4、進(jìn)行第二次請(qǐng)求測(cè)試

import pandas as pd

import requests

import re

import time

url = "https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.626d40c2tp5mYQ&id=43751299764&skuId=4493124079453&areaId=421300&user_id=2380958892&cat_id=2&is_b=1&rn=cc519a17bf9cefb59ac94f0351791648"

headers ={

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36'

}

data = requests.get(url,headers=headers).text

data

結(jié)果如下:

結(jié)果分析:不科學(xué)哈!這次我已經(jīng)帶著cookies發(fā)送了請(qǐng)求呀,為什么還是獲取不到我們想要的數(shù)據(jù),會(huì)不會(huì)“評(píng)論數(shù)據(jù)”根本就不再這個(gè)url中呢?那么真正的true_url究竟在哪里呢?下面我們慢慢解密。

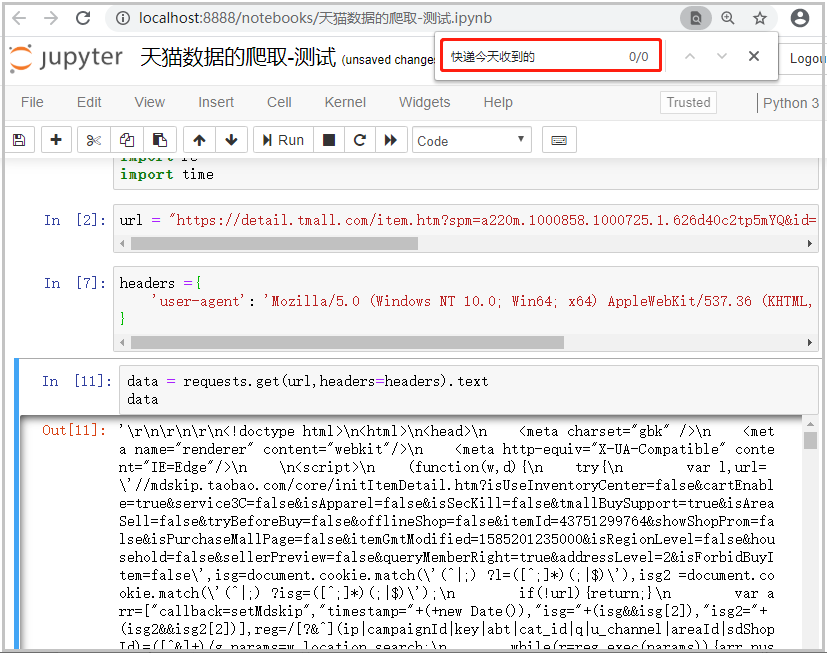

5、怎么找到真正的true_url?

1)點(diǎn)擊【鼠標(biāo)右鍵】–>點(diǎn)擊【檢查】

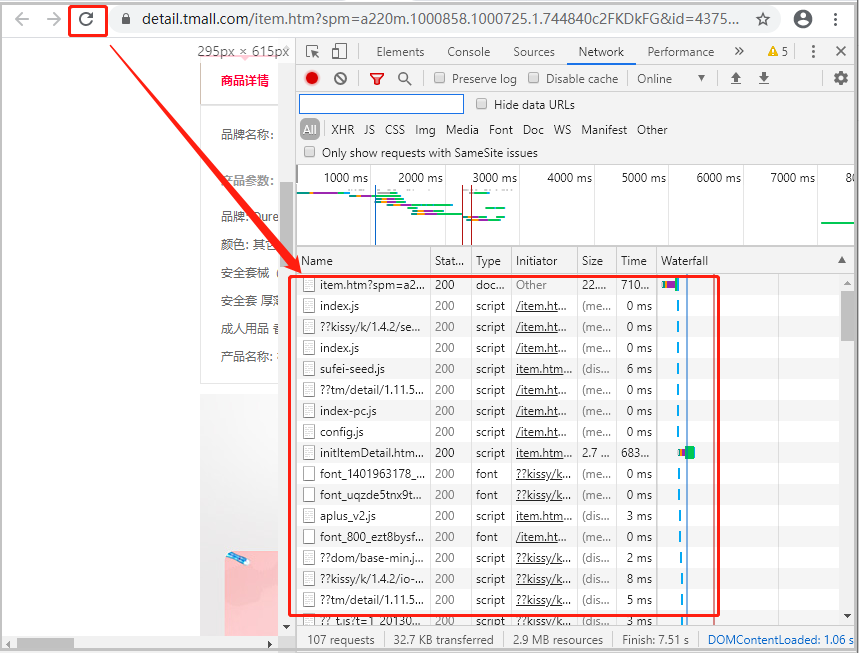

2)點(diǎn)擊【Network】

3)刷新網(wǎng)頁(yè)

刷新網(wǎng)頁(yè)以后,可以發(fā)現(xiàn)【紅色方框】中,多了很多請(qǐng)求的url。

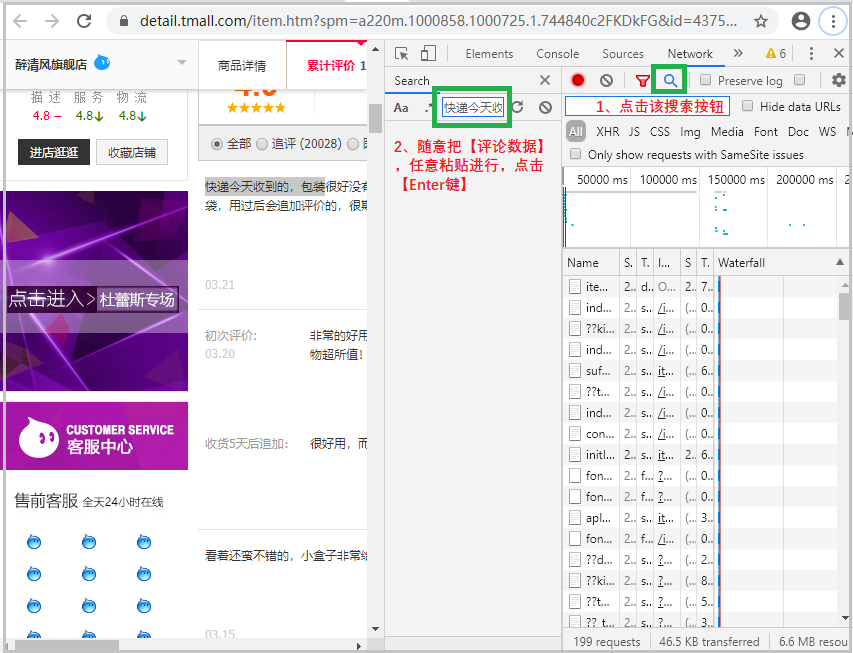

4)點(diǎn)擊【搜索按鈕】,進(jìn)行評(píng)論數(shù)據(jù)搜索,尋找trul_url

??當(dāng)出現(xiàn)如下界面后,按照如圖所示操作即可。

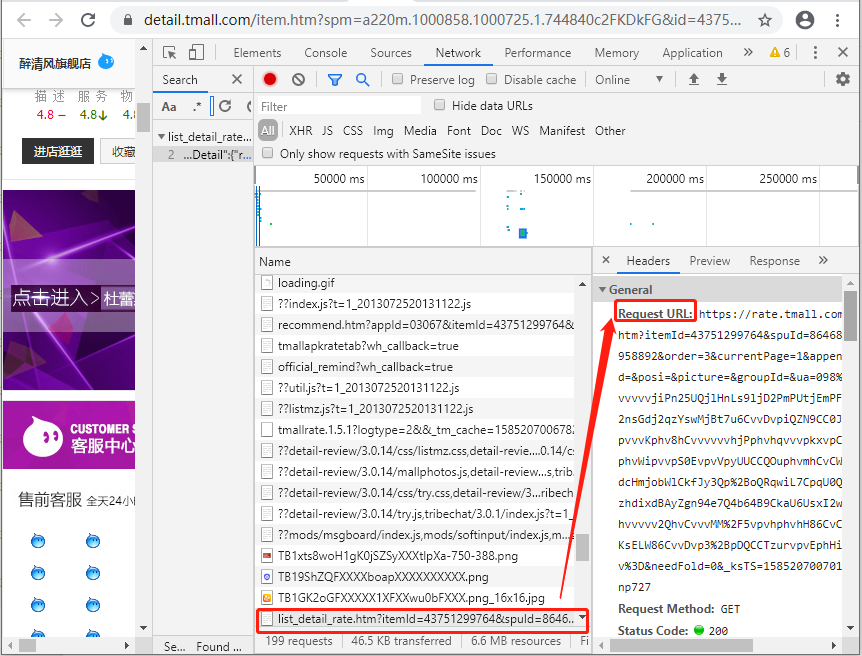

??緊接著,查看該請(qǐng)求所對(duì)應(yīng)的Request URL,就是我們最終要找的

true_url。信不信嗎?下面可以試試。

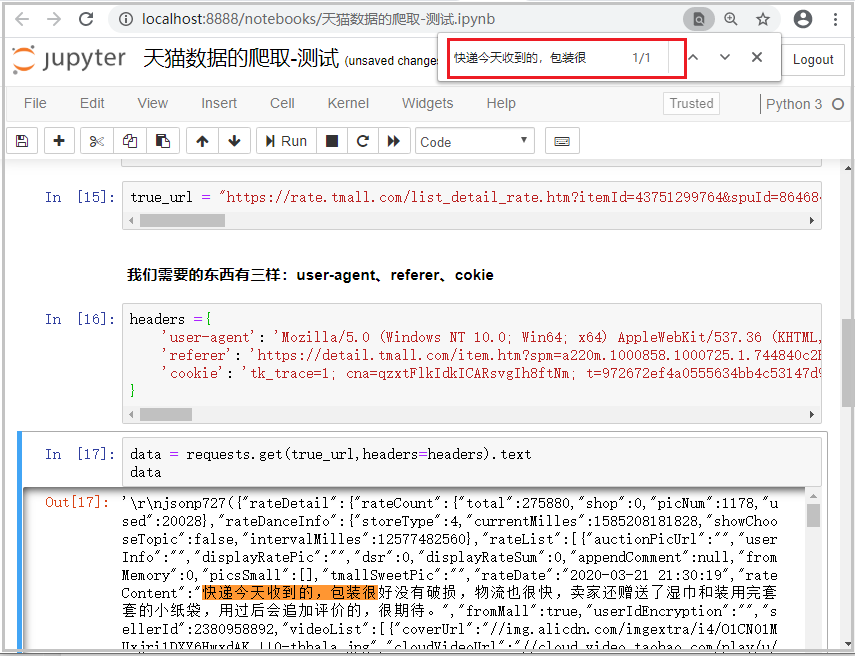

6、進(jìn)行第三次請(qǐng)求測(cè)試

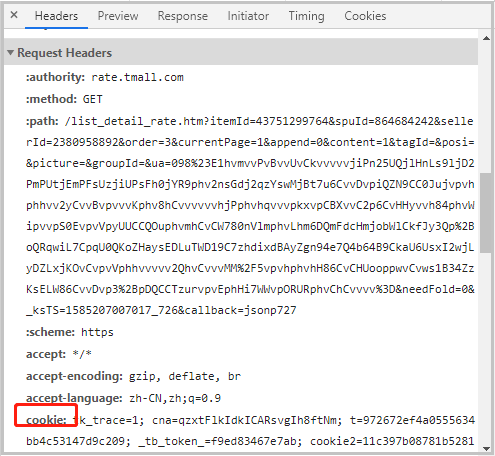

??首先我們?cè)谏鲜鰣D的基礎(chǔ)上,順著Request URL往下面找,獲取Request Headers中user-agent、referer、cookie這3樣?xùn)|西。

??反正都在Request Headers中,我們將這3樣?xùn)|西,一一粘貼到headers中,形成一個(gè)字典格式的鍵值對(duì)。然后我們發(fā)起其三次請(qǐng)求。

true_url = "https://rate.tmall.com/list_detail_rate.htm?itemId=43751299764&spuId=864684242&sellerId=2380958892&order=3¤tPage=1&append=0&content=1&tagId=&posi=&picture=&groupId=&ua=098%23E1hvmvvPvBvvUvCkvvvvvjiPn25UQjlHnLs9ljD2PmPUtjEmPFsUzjiUPsFh0jYR9phv2nsGdj2qzYswMjBt7u6CvvDvpiQZN9CC0Jujvpvhphhvv2yCvvBvpvvvKphv8hCvvvvvvhjPphvhqvvvpkxvpCBXvvC2p6CvHHyvvh84phvWipvvpS0EvpvVpyUUCCQOuphvmhCvCW780nVlmphvLhm6DQmFdcHmjobWlCkfJy3Qp%2BoQRqwiL7CpqU0QKoZHaysEDLuTWD19C7zhdixdBAyZgn94e7Q4b64B9CkaU6UsxI2wjLyDZLxjKOvCvpvVphhvvvvv2QhvCvvvMM%2F5vpvhphvhH86CvCHUooppwvCvws1B34ZzKsELW86CvvDvp3%2BpDQCCTzurvpvEphHi7WWvpORURphvChCvvvv%3D&needFold=0&_ksTS=1585207007017_726&callback=jsonp727"

headers ={

# 用的哪個(gè)瀏覽器

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36',

# 從哪個(gè)頁(yè)面發(fā)出的數(shù)據(jù)申請(qǐng),每個(gè)網(wǎng)站可能略有不同

'referer': 'https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.744840c2FKDkFG&id=43751299764&skuId=4493124079453&areaId=421300&user_id=2380958892&cat_id=2&is_b=1&rn=388ceadeefb8d85e5bae2d83bd0b732a',

# 哪個(gè)用戶(hù)想要看數(shù)據(jù),是游客還是注冊(cè)用戶(hù),建議使用登錄后的cookie

'cookie': 'tk_trace=1; cna=qzxtFlkIdkICARsvgIh8ftNm; t=972672ef4a0555634bb4c53147d9c209; _tb_token_=f9ed83467e7ab; cookie2=11c397b08781b52815002215ea5d1ad4; dnk=huang%5Cu81F3%5Cu5C0A; tracknick=huang%5Cu81F3%5Cu5C0A; lid=huang%E8%87%B3%E5%B0%8A; lgc=huang%5Cu81F3%5Cu5C0A; uc1=cookie16=UIHiLt3xCS3yM2h4eKHS9lpEOw%3D%3D&pas=0&existShop=false&cookie15=UtASsssmOIJ0bQ%3D%3D&cookie14=UoTUP2D4F2IHjA%3D%3D&cookie21=VFC%2FuZ9aiKCaj7AzMHh1; uc3=id2=UU8BrRJJcs7Z0Q%3D%3D&lg2=VT5L2FSpMGV7TQ%3D%3D&vt3=F8dBxd9hhEzOWS%2BU9Dk%3D&nk2=CzhMCY1UcRnL; _l_g_=Ug%3D%3D; uc4=id4=0%40U22GV4QHIgHvC14BqrCleMrzYb3K&nk4=0%40CX8JzNJ900MInLAoQ2Z33x1zsSo%3D; unb=2791663324; cookie1=BxeNCqlvVZOUgnKrsmThRXrLiXfQF7m%2FKvrURubODpk%3D; login=true; cookie17=UU8BrRJJcs7Z0Q%3D%3D; _nk_=huang%5Cu81F3%5Cu5C0A; sgcookie=E53NoUsJWtrYT7Pyx14Px; sg=%E5%B0%8A41; csg=8d6d2aae; enc=VZMEO%2BOI3U59DBFwyF9LE3kQNM84gfIKeZFLokEQSzC5TubpmVCJlS8olhYmgHiBe15Rvd8rsOeqeC1Em9GfWA%3D%3D; l=dBLKMV6rQcVJihfaBOfgSVrsTkQ9UIRb8sPrQGutMICP9ZCwNsyFWZ4Kb-8eCnGVHsMvR3oGfmN0BDTHXyIVokb4d_BkdlkmndC..; isg=BK2tcrfNj3CNMWubo5GaxlajvEknCuHcPbxLgO-yO8QhZswYt1ujrPVwUDqAZvmU'

}

data = requests.get(true_url,headers=headers).text

data

結(jié)果如下:

結(jié)果分析:經(jīng)過(guò)一番波折,我們最終找到了我們想要獲取的數(shù)據(jù),接下來(lái)的話(huà),就是我們進(jìn)行頁(yè)面解析的工作了。

其實(shí)在真實(shí)的爬蟲(chóng)環(huán)境中,可能會(huì)遇到更多的反爬措施,真正難得不是解析網(wǎng)頁(yè),而是分析網(wǎng)頁(yè)和反爬。

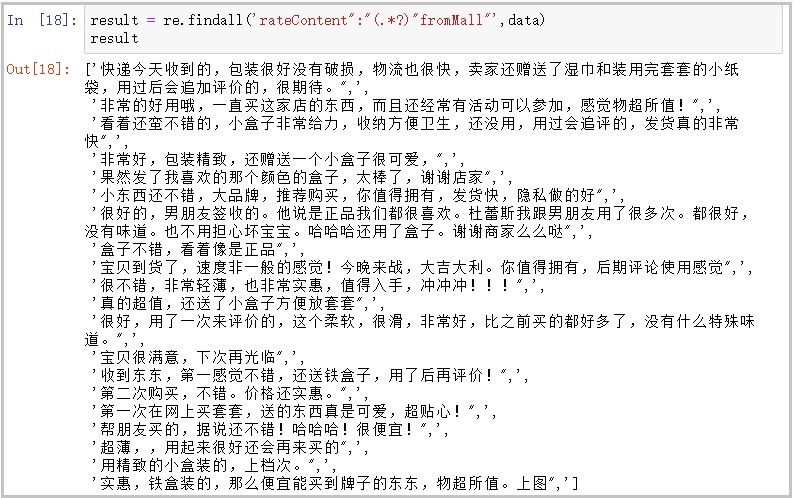

7、獲取網(wǎng)頁(yè)中的評(píng)論數(shù)據(jù)

result = re.findall('rateContent":"(.*?)"fromMall"',data)

result

結(jié)果如下:

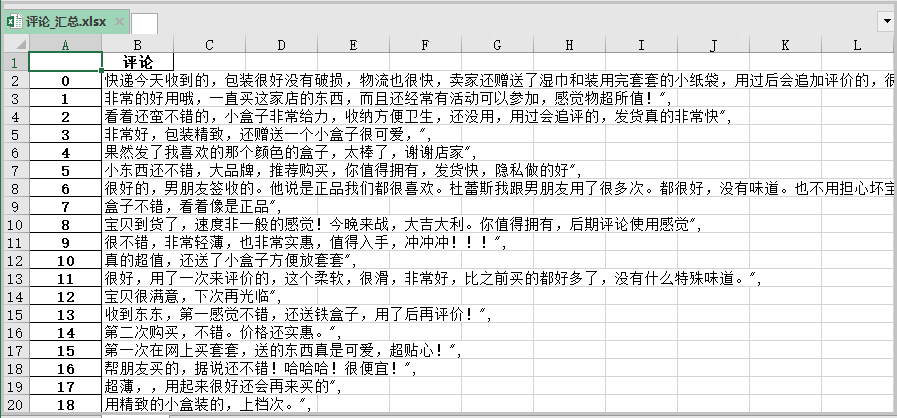

8、翻頁(yè)爬取(最終代碼)

??我們的目的肯定不只是爬取一個(gè)頁(yè)面的評(píng)論數(shù)據(jù),而是進(jìn)行翻頁(yè)爬取,我們需要仔細(xì)觀察true_url中,有一個(gè)【currentPage=1】參數(shù),當(dāng)這個(gè)數(shù)字變化的時(shí)候,對(duì)應(yīng)的頁(yè)面也就發(fā)生的變化,基于此,我們將完整的爬蟲(chóng)代碼寫(xiě)在下面。

import pandas as pd

import requests

import re

import time

data_list = []

for i in range(1,300,1):

print("正在爬取第" + str(i) + "頁(yè)")

url = first + str(i) + last

headers ={

# 用的哪個(gè)瀏覽器

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36',

# 從哪個(gè)頁(yè)面發(fā)出的數(shù)據(jù)申請(qǐng),每個(gè)網(wǎng)站可能略有不同

'referer': 'https://detail.tmall.com/item.htm?spm=a220m.1000858.1000725.1.744840c2FKDkFG&id=43751299764&skuId=4493124079453&areaId=421300&user_id=2380958892&cat_id=2&is_b=1&rn=388ceadeefb8d85e5bae2d83bd0b732a',

# 哪個(gè)用戶(hù)想要看數(shù)據(jù),是游客還是注冊(cè)用戶(hù),建議使用登錄后的cookie

'cookie': 'tk_trace=1; cna=qzxtFlkIdkICARsvgIh8ftNm; t=972672ef4a0555634bb4c53147d9c209; _tb_token_=f9ed83467e7ab; cookie2=11c397b08781b52815002215ea5d1ad4; dnk=huang%5Cu81F3%5Cu5C0A; tracknick=huang%5Cu81F3%5Cu5C0A; lid=huang%E8%87%B3%E5%B0%8A; lgc=huang%5Cu81F3%5Cu5C0A; uc1=cookie16=UIHiLt3xCS3yM2h4eKHS9lpEOw%3D%3D&pas=0&existShop=false&cookie15=UtASsssmOIJ0bQ%3D%3D&cookie14=UoTUP2D4F2IHjA%3D%3D&cookie21=VFC%2FuZ9aiKCaj7AzMHh1; uc3=id2=UU8BrRJJcs7Z0Q%3D%3D&lg2=VT5L2FSpMGV7TQ%3D%3D&vt3=F8dBxd9hhEzOWS%2BU9Dk%3D&nk2=CzhMCY1UcRnL; _l_g_=Ug%3D%3D; uc4=id4=0%40U22GV4QHIgHvC14BqrCleMrzYb3K&nk4=0%40CX8JzNJ900MInLAoQ2Z33x1zsSo%3D; unb=2791663324; cookie1=BxeNCqlvVZOUgnKrsmThRXrLiXfQF7m%2FKvrURubODpk%3D; login=true; cookie17=UU8BrRJJcs7Z0Q%3D%3D; _nk_=huang%5Cu81F3%5Cu5C0A; sgcookie=E53NoUsJWtrYT7Pyx14Px; sg=%E5%B0%8A41; csg=8d6d2aae; enc=VZMEO%2BOI3U59DBFwyF9LE3kQNM84gfIKeZFLokEQSzC5TubpmVCJlS8olhYmgHiBe15Rvd8rsOeqeC1Em9GfWA%3D%3D; l=dBLKMV6rQcVJihfaBOfgSVrsTkQ9UIRb8sPrQGutMICP9ZCwNsyFWZ4Kb-8eCnGVHsMvR3oGfmN0BDTHXyIVokb4d_BkdlkmndC..; isg=BK2tcrfNj3CNMWubo5GaxlajvEknCuHcPbxLgO-yO8QhZswYt1ujrPVwUDqAZvmU'

}

try:

data = requests.get(url,headers = headers).text

time.sleep(10)

result = re.findall('rateContent":"(.*?)"fromMall"',data)

data_list.extend(result)

except:

print("本頁(yè)爬取失敗")

df = pd.DataFrame()

df["評(píng)論"] = data_list

df.to_excel("評(píng)論_匯總.xlsx")

結(jié)果如下:

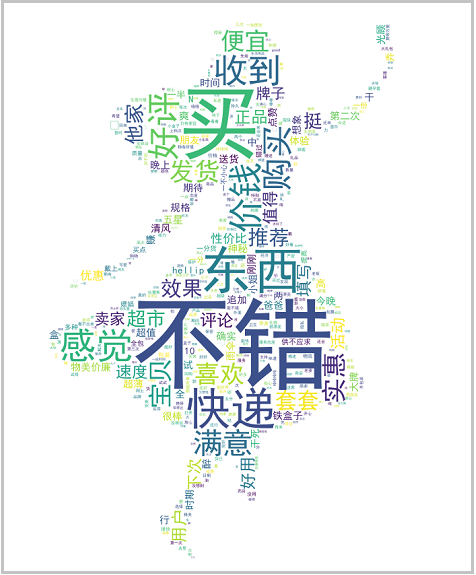

9、詞云圖的制作

import numpy as np

import pandas as pd

import jieba

from wordcloud import WordCloud

import matplotlib.pyplot as plt

from imageio import imread

import warnings

warnings.filterwarnings("ignore")

# 讀取數(shù)據(jù)

df = pd.read_excel("評(píng)論_匯總.xlsx")

df.head()

# 利用jieba進(jìn)行分析操作

df["評(píng)論"] = df["評(píng)論"].apply(jieba.lcut)

df.head()

# 去除停用詞操作

with open("stopword.txt","r",encoding="gbk") as f:

stop = f.read() # 返回的是一個(gè)字符串

stop = stop.split() # 這里得到的是一個(gè)列表.split()會(huì)將空格,\n,\t進(jìn)行切分,因此我們可以將這些加到停用詞當(dāng)中

stop = stop + [" ","\n","\t"]

df_after = df["評(píng)論"].apply(lambda x: [i for i in x if i not in stop])

df_after.head()

# 詞頻統(tǒng)計(jì)

all_words = []

for i in df_after:

all_words.extend(i)

word_count = pd.Series(all_words).value_counts()

word_count[:10]

# 繪制詞云圖

# 1、讀取背景圖片

back_picture = imread(r"G:\6Tipdm\wordcloud\alice_color.png")

# 2、設(shè)置詞云參數(shù)

wc = WordCloud(font_path="G:\\6Tipdm\\wordcloud\\simhei.ttf",

background_color="white",

max_words=2000,

mask=back_picture,

max_font_size=200,

random_state=42

)

wc2 = wc.fit_words(word_count)

# 3、繪制詞云圖

plt.figure(figsize=(16,8))

plt.imshow(wc2)

plt.axis("off")

plt.show()

wc.to_file("ciyun.png")

結(jié)果如下: