手把手教你使用OpenVINO部署NanoDet模型

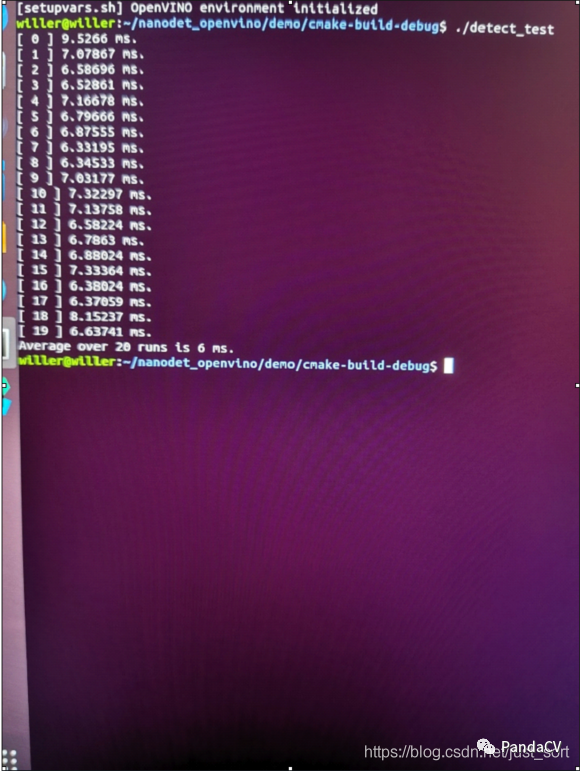

【GiantPandaCV】本文為大家介紹了一個手把手使用OpenVINO部署NanoDet的教程,并開源部署的全部代碼,在Intel i7-7700HQ CPU做到了6ms一幀的速度。

0x0. nanodet簡介

NanoDet (https://github.com/RangiLyu/nanodet)是一個速度超快和輕量級的Anchor-free 目標(biāo)檢測模型。想了解算法本身的可以去搜一搜之前機(jī)器之心的介紹。

0x1. 環(huán)境配置

Ubuntu:18.04

OpenVINO:2020.4

OpenCV:3.4.2

OpenVINO和OpenCV安裝包(編譯好了,也可以自己從官網(wǎng)下載自己編譯)可以從鏈接: https://pan.baidu.com/s/1zxtPKm-Q48Is5mzKbjGHeg 密碼: gw5c下載

OpenVINO安裝

tar?-xvzf?l_openvino_toolkit_p_2020.4.287.tgz

cd?l_openvino_toolkit_p_2020.4.287

sudo?./install_GUI.sh?一路next安裝

cd?/opt/intel/openvino/install_dependencies

sudo?./install_openvino_dependencies.sh

vi?~/.bashrc

把如下兩行放置到bashrc文件尾

source?/opt/intel/openvino/bin/setupvars.sh

source?/opt/intel/openvino/opencv/setupvars.sh

source ~/.bashrc 激活環(huán)境

模型優(yōu)化配置步驟

cd?/opt/intel/openvino/deployment_tools/model_optimizer/install_prerequisites

sudo?./install_prerequisites_onnx.sh(模型是從onnx轉(zhuǎn)為IR文件,只需配置onnx依賴)

OpenCV配置

tar -xvzf opencv-3.4.2.zip 解壓OpenCV到用戶根目錄即可,以便后續(xù)調(diào)用。(這是我編譯好的版本,有需要可以自己編譯)

0x2. NanoDet模型訓(xùn)練和轉(zhuǎn)換ONNX

git clone https://github.com/Wulingtian/nanodet.git

cd nanodet

cd config 配置模型文件,訓(xùn)練模型

定位到nanodet目錄,進(jìn)入tools目錄,打開export.py文件,配置cfg_path model_path out_path三個參數(shù)

定位到nanodet目錄,運(yùn)行 python tools/export.py 得到轉(zhuǎn)換后的onnx模型

python /opt/intel/openvino/deployment_tools/model_optimizer/mo_onnx.py --input_model onnx模型 --output_dir 期望模型輸出的路徑。得到IR文件

0x3. NanoDet模型部署

sudo apt install cmake 安裝cmake

git clone https://github.com/Wulingtian/nanodet_openvino.git (求star!)

cd nanodet_openvino 打開CMakeLists.txt文件,修改OpenCV_INCLUDE_DIRS和OpenCV_LIBS_DIR,之前已經(jīng)把OpenCV解壓到根目錄了,所以按照你自己的路徑指定

定位到nanodet_openvino,cd models 把之前生成的IR模型(包括bin和xml文件)文件放到該目錄下

定位到nanodet_openvino, cd test_imgs 把需要測試的圖片放到該目錄下

定位到nanodet_openvino,編輯main.cpp,xml_path參數(shù)修改為"../models/你的模型名稱.xml"

編輯 num_class 設(shè)置類別數(shù),例如:我訓(xùn)練的模型是安全帽檢測,只有1類,那么設(shè)置為1

編輯 src 設(shè)置測試圖片路徑,src參數(shù)修改為"../test_imgs/你的測試圖片"

定位到nanodet_openvino

mkdir build; cd build; cmake .. ;make

./detect_test 輸出平均推理時間,以及保存預(yù)測圖片到當(dāng)前目錄下,至此,部署完成!

0x4. 核心代碼一覽

//主要對圖片進(jìn)行預(yù)處理,包括resize和歸一化

std::vector<float>?Detector::prepareImage(cv::Mat?&src_img){

????std::vector<float>?result(INPUT_W?*?INPUT_H?*?3);

????float?*data?=?result.data();

????float?ratio?=?float(INPUT_W)?/?float(src_img.cols)?float(INPUT_H)?/?float(src_img.rows)???float(INPUT_W)?/?float(src_img.cols)?:?float(INPUT_H)?/?float(src_img.rows);

????cv::Mat?flt_img?=?cv::Mat::zeros(cv::Size(INPUT_W,?INPUT_H),?CV_8UC3);

????cv::Mat?rsz_img?=?cv::Mat::zeros(cv::Size(src_img.cols*ratio,?src_img.rows*ratio),?CV_8UC3);

????cv::resize(src_img,?rsz_img,?cv::Size(),?ratio,?ratio);

????rsz_img.copyTo(flt_img(cv::Rect(0,?0,?rsz_img.cols,?rsz_img.rows)));

????flt_img.convertTo(flt_img,?CV_32FC3);

????int?channelLength?=?INPUT_W?*?INPUT_H;

????std::vector?split_img?=?{

????????????cv::Mat(INPUT_W,?INPUT_H,?CV_32FC1,?data?+?channelLength?*?2),

????????????cv::Mat(INPUT_W,?INPUT_H,?CV_32FC1,?data?+?channelLength),

????????????cv::Mat(INPUT_W,?INPUT_H,?CV_32FC1,?data)

????};

????cv::split(flt_img,?split_img);

????for?(int?i?=?0;?i?3;?i++)?{

????????split_img[i]?=?(split_img[i]?-?img_mean[i])?/?img_std[i];

????}

????return?result;

}

//加載IR模型,初始化網(wǎng)絡(luò)

bool?Detector::init(string?xml_path,double?cof_threshold,double?nms_area_threshold,int?input_w,?int?input_h,?int?num_class,?int?r_rows,?int?r_cols,?std::vector<int>?s,?std::vector<float>?i_mean,std::vector<float>?i_std){

????_xml_path?=?xml_path;

????_cof_threshold?=?cof_threshold;

????_nms_area_threshold?=?nms_area_threshold;

????INPUT_W?=?input_w;

????INPUT_H?=?input_h;

????NUM_CLASS?=?num_class;

????refer_rows?=?r_rows;

????refer_cols?=?r_cols;

????strides?=?s;

????img_mean?=?i_mean;

????img_std?=?i_std;

????Core?ie;

????auto?cnnNetwork?=?ie.ReadNetwork(_xml_path);?

????InputsDataMap?inputInfo(cnnNetwork.getInputsInfo());

????InputInfo::Ptr&?input?=?inputInfo.begin()->second;

????_input_name?=?inputInfo.begin()->first;

????input->setPrecision(Precision::FP32);

????input->getInputData()->setLayout(Layout::NCHW);

????ICNNNetwork::InputShapes?inputShapes?=?cnnNetwork.getInputShapes();

????SizeVector&?inSizeVector?=?inputShapes.begin()->second;

????cnnNetwork.reshape(inputShapes);

????_outputinfo?=?OutputsDataMap(cnnNetwork.getOutputsInfo());

????for?(auto?&output?:?_outputinfo)?{

????????output.second->setPrecision(Precision::FP32);

????}

????_network?=??ie.LoadNetwork(cnnNetwork,?"CPU");

????return?true;

}

//模型推理及獲取輸出結(jié)果

vector?Detector::process_frame(Mat&?inframe) {

????cv::Mat?showImage?=?inframe.clone();

????std::vector<float>?pr_img?=?prepareImage(inframe);

????InferRequest::Ptr?infer_request?=?_network.CreateInferRequestPtr();

????Blob::Ptr?frameBlob?=?infer_request->GetBlob(_input_name);

????InferenceEngine::LockedMemory<void>?blobMapped?=?InferenceEngine::as(frameBlob)->wmap();

????float*?blob_data?=?blobMapped.as<float*>();

????memcpy(blob_data,?pr_img.data(),?3?*?INPUT_H?*?INPUT_W?*?sizeof(float));

????infer_request->Infer();

????vector?origin_rect;

????vector<float>?origin_rect_cof;

????int?i=0;

????vector?bboxes;

????for?(auto?&output?:?_outputinfo)?{

????????auto?output_name?=?output.first;

????????Blob::Ptr?blob?=?infer_request->GetBlob(output_name);

????????LockedMemory<const?void>?blobMapped?=?as(blob)->rmap();

????????float?*output_blob?=?blobMapped.as<float?*>();

????????bboxes?=?postProcess(showImage,output_blob);

????????++i;

????}

????return?bboxes;

}

//對模型輸出結(jié)果進(jìn)行解碼及nms

std::vector?Detector::postProcess(const?cv::Mat?&src_img,

??????????????????????????????float?*output)? {

????GenerateReferMatrix();

????std::vector?result;

????float?*out?=?output;

????float?ratio?=?std::max(float(src_img.cols)?/?float(INPUT_W),?float(src_img.rows)?/?float(INPUT_H));

????cv::Mat?result_matrix?=?cv::Mat(refer_rows,?NUM_CLASS?+?4,?CV_32FC1,?out);

????for?(int?row_num?=?0;?row_num?????????Detector::Bbox?box;

????????auto?*row?=?result_matrix.ptr<float>(row_num);

????????auto?max_pos?=?std::max_element(row?+?4,?row?+?NUM_CLASS?+?4);

????????box.prob?=?row[max_pos?-?row];

????????if?(box.prob?????????????continue;

????????box.classes?=?max_pos?-?row?-?4;

????????auto?*anchor?=?refer_matrix.ptr<float>(row_num);

????????box.x?=?(anchor[0]?-?row[0]?*?anchor[2]?+?anchor[0]?+?row[2]?*?anchor[2])?/?2?*?ratio;

????????box.y?=?(anchor[1]?-?row[1]?*?anchor[2]?+?anchor[1]?+?row[3]?*?anchor[2])?/?2?*?ratio;

????????box.w?=?(row[2]?+?row[0])?*?anchor[2]?*?ratio;

????????box.h?=?(row[3]?+?row[1])?*?anchor[2]?*?ratio;

????????result.push_back(box);

????}

????NmsDetect(result);

????return?result;

}//主要對圖片進(jìn)行預(yù)處理,包括resize和歸一化

std::vector<float>?Detector::prepareImage(cv::Mat?&src_img){

????std::vector<float>?result(INPUT_W?*?INPUT_H?*?3);

????float?*data?=?result.data();

????float?ratio?=?float(INPUT_W)?/?float(src_img.cols)?float(INPUT_H)?/?float(src_img.rows)???float(INPUT_W)?/?float(src_img.cols)?:?float(INPUT_H)?/?float(src_img.rows);

????cv::Mat?flt_img?=?cv::Mat::zeros(cv::Size(INPUT_W,?INPUT_H),?CV_8UC3);

????cv::Mat?rsz_img?=?cv::Mat::zeros(cv::Size(src_img.cols*ratio,?src_img.rows*ratio),?CV_8UC3);

????cv::resize(src_img,?rsz_img,?cv::Size(),?ratio,?ratio);

????rsz_img.copyTo(flt_img(cv::Rect(0,?0,?rsz_img.cols,?rsz_img.rows)));

????flt_img.convertTo(flt_img,?CV_32FC3);

????int?channelLength?=?INPUT_W?*?INPUT_H;

????std::vector?split_img?=?{

????????????cv::Mat(INPUT_W,?INPUT_H,?CV_32FC1,?data?+?channelLength?*?2),

????????????cv::Mat(INPUT_W,?INPUT_H,?CV_32FC1,?data?+?channelLength),

????????????cv::Mat(INPUT_W,?INPUT_H,?CV_32FC1,?data)

????};

????cv::split(flt_img,?split_img);

????for?(int?i?=?0;?i?3;?i++)?{

????????split_img[i]?=?(split_img[i]?-?img_mean[i])?/?img_std[i];

????}

????return?result;

}

//加載IR模型,初始化網(wǎng)絡(luò)

bool?Detector::init(string?xml_path,double?cof_threshold,double?nms_area_threshold,int?input_w,?int?input_h,?int?num_class,?int?r_rows,?int?r_cols,?std::vector<int>?s,?std::vector<float>?i_mean,std::vector<float>?i_std){

????_xml_path?=?xml_path;

????_cof_threshold?=?cof_threshold;

????_nms_area_threshold?=?nms_area_threshold;

????INPUT_W?=?input_w;

????INPUT_H?=?input_h;

????NUM_CLASS?=?num_class;

????refer_rows?=?r_rows;

????refer_cols?=?r_cols;

????strides?=?s;

????img_mean?=?i_mean;

????img_std?=?i_std;

????Core?ie;

????auto?cnnNetwork?=?ie.ReadNetwork(_xml_path);?

????InputsDataMap?inputInfo(cnnNetwork.getInputsInfo());

????InputInfo::Ptr&?input?=?inputInfo.begin()->second;

????_input_name?=?inputInfo.begin()->first;

????input->setPrecision(Precision::FP32);

????input->getInputData()->setLayout(Layout::NCHW);

????ICNNNetwork::InputShapes?inputShapes?=?cnnNetwork.getInputShapes();

????SizeVector&?inSizeVector?=?inputShapes.begin()->second;

????cnnNetwork.reshape(inputShapes);

????_outputinfo?=?OutputsDataMap(cnnNetwork.getOutputsInfo());

????for?(auto?&output?:?_outputinfo)?{

????????output.second->setPrecision(Precision::FP32);

????}

????_network?=??ie.LoadNetwork(cnnNetwork,?"CPU");

????return?true;

}

//模型推理及獲取輸出結(jié)果

vector?Detector::process_frame(Mat&?inframe) {

????cv::Mat?showImage?=?inframe.clone();

????std::vector<float>?pr_img?=?prepareImage(inframe);

????InferRequest::Ptr?infer_request?=?_network.CreateInferRequestPtr();

????Blob::Ptr?frameBlob?=?infer_request->GetBlob(_input_name);

????InferenceEngine::LockedMemory<void>?blobMapped?=?InferenceEngine::as(frameBlob)->wmap();

????float*?blob_data?=?blobMapped.as<float*>();

????memcpy(blob_data,?pr_img.data(),?3?*?INPUT_H?*?INPUT_W?*?sizeof(float));

????infer_request->Infer();

????vector?origin_rect;

????vector<float>?origin_rect_cof;

????int?i=0;

????vector?bboxes;

????for?(auto?&output?:?_outputinfo)?{

????????auto?output_name?=?output.first;

????????Blob::Ptr?blob?=?infer_request->GetBlob(output_name);

????????LockedMemory<const?void>?blobMapped?=?as(blob)->rmap();

????????float?*output_blob?=?blobMapped.as<float?*>();

????????bboxes?=?postProcess(showImage,output_blob);

????????++i;

????}

????return?bboxes;

}

//對模型輸出結(jié)果進(jìn)行解碼及nms

std::vector?Detector::postProcess(const?cv::Mat?&src_img,

??????????????????????????????float?*output)? {

????GenerateReferMatrix();

????std::vector?result;

????float?*out?=?output;

????float?ratio?=?std::max(float(src_img.cols)?/?float(INPUT_W),?float(src_img.rows)?/?float(INPUT_H));

????cv::Mat?result_matrix?=?cv::Mat(refer_rows,?NUM_CLASS?+?4,?CV_32FC1,?out);

????for?(int?row_num?=?0;?row_num?????????Detector::Bbox?box;

????????auto?*row?=?result_matrix.ptr<float>(row_num);

????????auto?max_pos?=?std::max_element(row?+?4,?row?+?NUM_CLASS?+?4);

????????box.prob?=?row[max_pos?-?row];

????????if?(box.prob?????????????continue;

????????box.classes?=?max_pos?-?row?-?4;

????????auto?*anchor?=?refer_matrix.ptr<float>(row_num);

????????box.x?=?(anchor[0]?-?row[0]?*?anchor[2]?+?anchor[0]?+?row[2]?*?anchor[2])?/?2?*?ratio;

????????box.y?=?(anchor[1]?-?row[1]?*?anchor[2]?+?anchor[1]?+?row[3]?*?anchor[2])?/?2?*?ratio;

????????box.w?=?(row[2]?+?row[0])?*?anchor[2]?*?ratio;

????????box.h?=?(row[3]?+?row[1])?*?anchor[2]?*?ratio;

????????result.push_back(box);

????}

????NmsDetect(result);

????return?result;

}

0x5. 推理時間展示及預(yù)測結(jié)果展示

至此完成了NanoDet在X86 CPU上的部署,希望有幫助到大家。

歡迎關(guān)注GiantPandaCV/PandaCV, 在這里你將看到獨家的深度學(xué)習(xí)分享,堅持原創(chuàng),每天分享我們學(xué)習(xí)到的新鮮知識。( ? ?ω?? )?

有對文章相關(guān)的問題,或者想要加入交流群,歡迎添加BBuf微信:

為了方便讀者獲取資料以及我們公眾號的作者發(fā)布一些Github工程的更新,我們成立了一個QQ群,二維碼如下,感興趣可以加入。