R語言 lightgbm 算法優(yōu)化:不平衡二分類問題(附代碼)

轉(zhuǎn)自 | 大數(shù)據(jù)文摘

作者|蘇高生

本案例使用的數(shù)據(jù)為kaggle中“Santander Customer Satisfaction”比賽的數(shù)據(jù)。此案例為不平衡二分類問題,目標為最大化auc值(ROC曲線下方面積)。目前此比賽已經(jīng)結(jié)束。

競賽題目鏈接為:

https://www.kaggle.com/c/santander-customer-satisfaction?

2.建模思路

本文檔采用微軟開源的lightgbm算法進行分類,運行速度極快。具體步驟為:

讀取數(shù)據(jù);

并行運算:由于lightgbm包可以通過設(shè)置相應參數(shù)進行并行運算,因此不再調(diào)用doParallel與foreach包進行并行運算;

特征選擇:使用mlr包提取了99%的chi.square;

調(diào)參:逐步調(diào)試lgb.cv函數(shù)的參數(shù),并多次調(diào)試,直到滿意為止;

預測結(jié)果:用調(diào)試好的參數(shù)值構(gòu)建lightgbm模型,輸出預測結(jié)果;本案例所用程序輸出結(jié)果的ROC值為0.833386,已超過Private Leaderboard排名第一的結(jié)果(0.829072)。

3.lightgbm算法

由于lightgbm算法沒有給出具體的數(shù)學公式,因此此處不再介紹,如有需要,請查看github項目網(wǎng)址。

lightgbm算法具體介紹網(wǎng)址:

https://github.com/Microsoft/LightGBM

讀取數(shù)據(jù)

options(java.parameters = "-Xmx8g") ## 特征選擇時使用,但是需要在加載包之前設(shè)置

library(readr)

lgb_tr1 <- read_csv("C:/Users/Administrator/Documents/kaggle/scs_lgb/train.csv")

lgb_te1 <- read_csv("C:/Users/Administrator/Documents/kaggle/scs_lgb/test.csv")

數(shù)據(jù)探索

1.設(shè)置并行運算

library(dplyr)

library(mlr)

library(parallelMap)

parallelStartSocket(2)

2.數(shù)據(jù)各列初步探索

summarizeColumns(lgb_tr1) %>% View()

3.處理缺失值

impute missing values by mean and mode

imp_tr1 <- impute(

? ?as.data.frame(lgb_tr1),

? ?classes = list(

? ? ? ?integer = imputeMean(),

? ? ? ?numeric = imputeMean()

? ?)

)

imp_te1 <- impute(

? ?as.data.frame(lgb_te1),

? ?classes = list(

? ? ? ?integer = imputeMean(),

? ? ? ?numeric = imputeMean()

? ?)

)

處理缺失值后

summarizeColumns(imp_tr1$data) %>% View()

4.觀察訓練數(shù)據(jù)類別的比例–數(shù)據(jù)類別不平衡

table(lgb_tr1$TARGET)

5.剔除數(shù)據(jù)集中的常數(shù)列

lgb_tr2 <- removeConstantFeatures(imp_tr1$data)

lgb_te2 <- removeConstantFeatures(imp_te1$data)

6.保留訓練數(shù)據(jù)集與測試數(shù)據(jù)及相同的列

tr2_name <- data.frame(tr2_name = colnames(lgb_tr2))

te2_name <- data.frame(te2_name = colnames(lgb_te2))

tr2_name_inner <- tr2_name %>%

? ?inner_join(te2_name, by = c('tr2_name' = 'te2_name'))

TARGET = data.frame(TARGET = lgb_tr2$TARGET)

lgb_tr2 <- lgb_tr2[, c(tr2_name_inner$tr2_name[2:dim(tr2_name_inner)[1]])]

lgb_te2 <- lgb_te2[, c(tr2_name_inner$tr2_name[2:dim(tr2_name_inner)[1]])]

lgb_tr2 <- cbind(lgb_tr2, TARGET)

注:

1)由于本次使用lightgbm算法,故而不對數(shù)據(jù)進行標準化處理;

2)lightgbm算法運行效率極高,1GB內(nèi)不進行特征篩選也可以運行的極快,但是此處進行特征篩選,以進一步加快運行速率;

3)本案例直接進行特征篩選,未生成衍生變量,原因為:不知特征實際意義,不好隨機生成。

特征篩選–卡方檢驗

library(lightgbm)

1.試算最大權(quán)重值程序,后面將繼續(xù)優(yōu)化

grid_search <- expand.grid(

? ?weight = seq(1, 30, 2)

? ?## table(lgb_tr1$TARGET)[1] / table(lgb_tr1$TARGET)[2] = 24.27261

? ?## 故而設(shè)定weight在[1, 30]之間

)

lgb_rate_1 <- numeric(length = nrow(grid_search))

set.seed(0)

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr2$TARGET * i + 1) / sum(lgb_tr2$TARGET * i + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr2[, 1:300]),

? ? ? ?label = lgb_tr2$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc'

? ?)

? ?# 交叉驗證

? ?lgb_tr2_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?learning_rate = .1,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?lgb_rate_1[i] <- unlist(lgb_tr2_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr2_mod$record_evals$valid$auc$eval))]

}

library(ggplot2)

grid_search$perf <- lgb_rate_1

ggplot(grid_search,aes(x = weight, y = perf)) +

? ?geom_point()

從此圖可知auc值受權(quán)重影響不大,在weight=5時達到最大。

3.特征選擇

1)特征選擇

lgb_tr2$TARGET <- factor(lgb_tr2$TARGET)

lgb.task <- makeClassifTask(data = lgb_tr2, target = 'TARGET')

lgb.task.smote <- oversample(lgb.task, rate = 5)

fv_time <- system.time(

? ?fv <- generateFilterValuesData(

? ? ? ?lgb.task.smote,

? ? ? ?method = c('chi.squared')

? ? ? ?## 此處可以使用信息增益/卡方檢驗的方法,但是不建議使用隨機森林方法,效率極低

? ? ? ?## 如果有興趣,也可以嘗試IV值方法篩選

? ? ? ?## 特征工程決定目標值(此處為auc)的上限,可以把特征篩選方法作為超參數(shù)處理

? ?)

)

2)制圖查看

# plotFilterValues(fv)

plotFilterValuesGGVIS(fv)

3)提取99%的chi.squared(lightgbm算法效率極高,因此可以取更多的變量)

注:提取的X%的chi.squared中的X可以作為超參數(shù)處理。

fv_data2 <- fv$data %>%

? ?arrange(desc(chi.squared)) %>%

? ?mutate(chi_gain_cul = cumsum(chi.squared) / sum(chi.squared))

fv_data2_filter <- fv_data2 %>% filter(chi_gain_cul <= 0.99)

dim(fv_data2_filter) ## 減少了一半的自變量

fv_feature <- fv_data2_filter$name

lgb_tr3 <- lgb_tr2[, c(fv_feature, 'TARGET')]

lgb_te3 <- lgb_te2[, fv_feature]

4)寫出數(shù)據(jù)

write_csv(lgb_tr3, 'C:/users/Administrator/Documents/kaggle/scs_lgb/lgb_tr3_chi.csv')

write_csv(lgb_te3, 'C:/users/Administrator/Documents/kaggle/scs_lgb/lgb_te3_chi.csv')

算法

lgb_tr <- rxImport('C:/Users/Administrator/Documents/kaggle/scs_lgb/lgb_tr3_chi.csv')

lgb_te <- rxImport('C:/Users/Administrator/Documents/kaggle/scs_lgb/lgb_te3_chi.csv')

## 建議lgb_te數(shù)據(jù)在預測時再讀取,以節(jié)約內(nèi)存

library(lightgbm)

1.調(diào)試weight參數(shù)

grid_search <- expand.grid(

? ?weight = 1:30

)

perf_weight_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * i + 1) / sum(lgb_tr$TARGET * i + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc'

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?learning_rate = .1,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_weight_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

library(ggplot2)

grid_search$perf <- perf_weight_1

ggplot(grid_search,aes(x = weight, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在weight=4時達到最大,呈遞減趨勢。

2.調(diào)試learning_rate參數(shù)

grid_search <- expand.grid(

? ?learning_rate = 2 ^ (-(8:1))

)

perf_learning_rate_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_learning_rate_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_learning_rate_1

ggplot(grid_search,aes(x = learning_rate, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在learning_rate=2^(-5) 時達到最大,但是 2^(-(6:3)) 區(qū)別極小,故取learning_rate = .125,提高運行速度。

3.調(diào)試num_leaves參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = seq(50, 800, 50)

)

perf_num_leaves_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_num_leaves_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_num_leaves_1

ggplot(grid_search,aes(x = num_leaves, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在num_leaves=650時達到最大。

4.調(diào)試min_data_in_leaf參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?min_data_in_leaf = 2 ^ (1:7)

)

perf_min_data_in_leaf_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?min_data_in_leaf = grid_search[i, 'min_data_in_leaf']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_min_data_in_leaf_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_min_data_in_leaf_1

ggplot(grid_search,aes(x = min_data_in_leaf, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值對min_data_in_leaf不敏感,因此不做調(diào)整。

5.調(diào)試max_bin參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin = 2 ^ (5:10)

)

perf_max_bin_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_max_bin_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_max_bin_1

ggplot(grid_search,aes(x = max_bin, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在max_bin=2^10 時達到最大,需要再次微調(diào)max_bin值。

6.微調(diào)max_bin參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin = 100 * (6:15)

)

perf_max_bin_2 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_max_bin_2[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_max_bin_2

ggplot(grid_search,aes(x = max_bin, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在max_bin=1000時達到最大。

7.調(diào)試min_data_in_bin參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 2 ^ (1:9)

? ?

)

perf_min_data_in_bin_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_min_data_in_bin_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_min_data_in_bin_1

ggplot(grid_search,aes(x = min_data_in_bin, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在min_data_in_bin=8時達到最大,但是變化極其細微,因此不做調(diào)整。

8.調(diào)試feature_fraction參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = seq(.5, 1, .02)

? ?

)

perf_feature_fraction_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_feature_fraction_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_feature_fraction_1

ggplot(grid_search,aes(x = feature_fraction, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在feature_fraction=.62時達到最大,feature_fraction在[.60,.62]之間時,auc值保持穩(wěn)定,表現(xiàn)較好;從.64開始呈下降趨勢。

9.調(diào)試min_sum_hessian參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = seq(0, .02, .001)

)

perf_min_sum_hessian_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_min_sum_hessian_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_min_sum_hessian_1

ggplot(grid_search,aes(x = min_sum_hessian, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在min_sum_hessian=0.005時達到最大,建議min_sum_hessian取值在[0.002, 0.005]區(qū)間,0.005后呈遞減趨勢。

10.調(diào)試lamda參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = seq(0, .01, .002),

? ?lambda_l2 = seq(0, .01, .002)

)

perf_lamda_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_lamda_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_lamda_1

ggplot(data = grid_search, aes(x = lambda_l1, y = perf)) +

? ?geom_point() +

? ?facet_wrap(~ lambda_l2, nrow = 5)

從此圖可知建議lambda_l1 = 0, lambda_l2 = 0

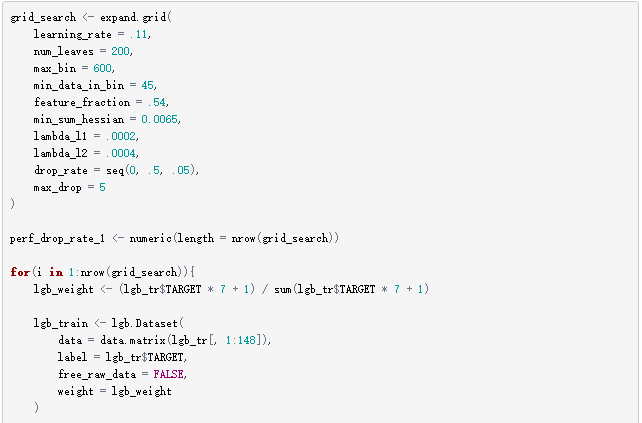

11.調(diào)試drop_rate參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = seq(0, 1, .1)

)

perf_drop_rate_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_drop_rate_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_drop_rate_1

ggplot(data = grid_search, aes(x = drop_rate, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在drop_rate=0.2時達到最大,在0, .2, .5較好;在[0, 1]變化不大。

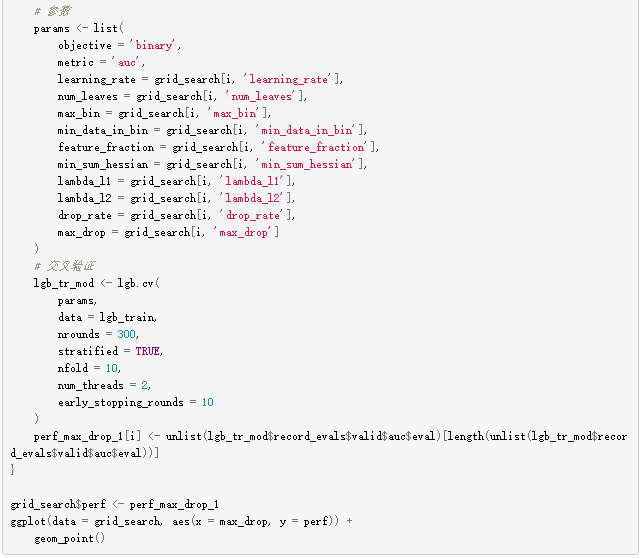

12.調(diào)試max_drop參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = seq(1, 10, 2)

)

perf_max_drop_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 4 + 1) / sum(lgb_tr$TARGET * 4 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_max_drop_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_max_drop_1

ggplot(data = grid_search, aes(x = max_drop, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在max_drop=5時達到最大,在[1, 10]區(qū)間變化較小。

二次調(diào)參

1.調(diào)試weight參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .125,

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_weight_2 <- numeric(length = nrow(grid_search))

for(i in 1:20){

? ?lgb_weight <- (lgb_tr$TARGET * i + 1) / sum(lgb_tr$TARGET * i + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[1, 'learning_rate'],

? ? ? ?num_leaves = grid_search[1, 'num_leaves'],

? ? ? ?max_bin = grid_search[1, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[1, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[1, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[1, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[1, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[1, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[1, 'drop_rate'],

? ? ? ?max_drop = grid_search[1, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?learning_rate = .1,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_weight_2[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

library(ggplot2)

ggplot(data.frame(num = 1:length(perf_weight_2), perf = perf_weight_2), aes(x = num, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

從此圖可知auc值在weight>=3時auc趨于穩(wěn)定, weight=7 the max

2.調(diào)試learning_rate參數(shù)

grid_search <- expand.grid(

? ?learning_rate = seq(.05, .5, .03),

? ?num_leaves = 650,

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_learning_rate_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_learning_rate_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_learning_rate_1

ggplot(data = grid_search, aes(x = learning_rate, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

結(jié)論:learning_rate=.11時,auc最大。

3.調(diào)試num_leaves參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .11,

? ?num_leaves = seq(100, 800, 50),

? ?max_bin=1000,

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_num_leaves_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_num_leaves_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_num_leaves_1

ggplot(data = grid_search, aes(x = num_leaves, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

結(jié)論:num_leaves=200時,auc最大。

4.調(diào)試max_bin參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .11,

? ?num_leaves = 200,

? ?max_bin = seq(100, 1500, 100),

? ?min_data_in_bin = 8,

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_max_bin_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_max_bin_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_max_bin_1

ggplot(data = grid_search, aes(x = max_bin, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

結(jié)論:max_bin=600時,auc最大;400,800也是可接受值。

5.調(diào)試min_data_in_bin參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .11,

? ?num_leaves = 200,

? ?max_bin = 600,

? ?min_data_in_bin = seq(5, 50, 5),

? ?feature_fraction = .62,

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_min_data_in_bin_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_min_data_in_bin_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_min_data_in_bin_1

ggplot(data = grid_search, aes(x = min_data_in_bin, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

結(jié)論:min_data_in_bin=45時,auc最大;其中25是可接受值。

6.調(diào)試feature_fraction參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .11,

? ?num_leaves = 200,

? ?max_bin = 600,

? ?min_data_in_bin = 45,

? ?feature_fraction = seq(.5, .9, .02),

? ?min_sum_hessian = .005,

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_feature_fraction_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_feature_fraction_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_feature_fraction_1

ggplot(data = grid_search, aes(x = feature_fraction, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

結(jié)論:feature_fraction=.54時,auc最大, .56, .58時也較好。

7.調(diào)試min_sum_hessian參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .11,

? ?num_leaves = 200,

? ?max_bin = 600,

? ?min_data_in_bin = 45,

? ?feature_fraction = .54,

? ?min_sum_hessian = seq(.001, .008, .0005),

? ?lambda_l1 = 0,

? ?lambda_l2 = 0,

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_min_sum_hessian_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_min_sum_hessian_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_min_sum_hessian_1

ggplot(data = grid_search, aes(x = min_sum_hessian, y = perf)) +

? ?geom_point() +

? ?geom_smooth()

結(jié)論:min_sum_hessian=0.0065時auc取得最大值,取min_sum_hessian=0.003,0.0055時可接受。

8.調(diào)試lambda參數(shù)

grid_search <- expand.grid(

? ?learning_rate = .11,

? ?num_leaves = 200,

? ?max_bin = 600,

? ?min_data_in_bin = 45,

? ?feature_fraction = .54,

? ?min_sum_hessian = 0.0065,

? ?lambda_l1 = seq(0, .001, .0002),

? ?lambda_l2 = seq(0, .001, .0002),

? ?drop_rate = .2,

? ?max_drop = 5

)

perf_lambda_1 <- numeric(length = nrow(grid_search))

for(i in 1:nrow(grid_search)){

? ?lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

? ?

? ?lgb_train <- lgb.Dataset(

? ? ? ?data = data.matrix(lgb_tr[, 1:148]),

? ? ? ?label = lgb_tr$TARGET,

? ? ? ?free_raw_data = FALSE,

? ? ? ?weight = lgb_weight

? ?)

? ?

? ?# 參數(shù)

? ?params <- list(

? ? ? ?objective = 'binary',

? ? ? ?metric = 'auc',

? ? ? ?learning_rate = grid_search[i, 'learning_rate'],

? ? ? ?num_leaves = grid_search[i, 'num_leaves'],

? ? ? ?max_bin = grid_search[i, 'max_bin'],

? ? ? ?min_data_in_bin = grid_search[i, 'min_data_in_bin'],

? ? ? ?feature_fraction = grid_search[i, 'feature_fraction'],

? ? ? ?min_sum_hessian = grid_search[i, 'min_sum_hessian'],

? ? ? ?lambda_l1 = grid_search[i, 'lambda_l1'],

? ? ? ?lambda_l2 = grid_search[i, 'lambda_l2'],

? ? ? ?drop_rate = grid_search[i, 'drop_rate'],

? ? ? ?max_drop = grid_search[i, 'max_drop']

? ?)

? ?# 交叉驗證

? ?lgb_tr_mod <- lgb.cv(

? ? ? ?params,

? ? ? ?data = lgb_train,

? ? ? ?nrounds = 300,

? ? ? ?stratified = TRUE,

? ? ? ?nfold = 10,

? ? ? ?num_threads = 2,

? ? ? ?early_stopping_rounds = 10

? ?)

? ?perf_lambda_1[i] <- unlist(lgb_tr_mod$record_evals$valid$auc$eval)[length(unlist(lgb_tr_mod$record_evals$valid$auc$eval))]

}

grid_search$perf <- perf_lambda_1

ggplot(data = grid_search, aes(x = lambda_l1, y = perf)) +

? ?geom_point() +

? ?facet_wrap(~ lambda_l2, nrow = 5)

結(jié)論:lambda與auc整體呈負相關(guān),取lambda_l1=.0002, lambda_l2 = .0004

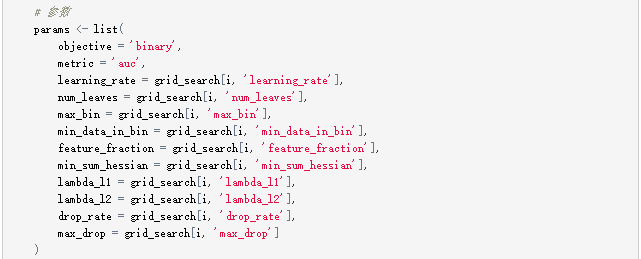

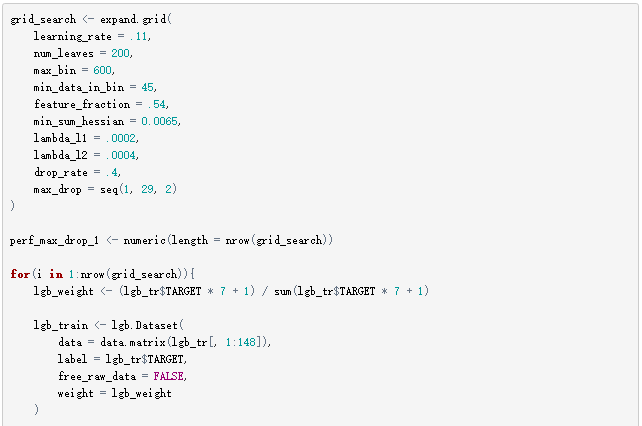

9.調(diào)試drop_rate參數(shù)

結(jié)論:drop_rate=.4時取到最大值,.15, .25可接受。

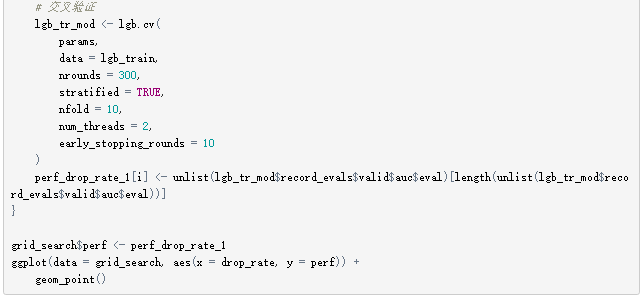

10.調(diào)試max_drop參數(shù)

結(jié)論:drop_rate=.4時取到最大值,.15, .25可接受。

預測

1.權(quán)重

lgb_weight <- (lgb_tr$TARGET * 7 + 1) / sum(lgb_tr$TARGET * 7 + 1)

2.訓練數(shù)據(jù)集

lgb_train <- lgb.Dataset(

? ?data = data.matrix(lgb_tr[, 1:148]),

? ?label = lgb_tr$TARGET,

? ?free_raw_data = FALSE,

? ?weight = lgb_weight

)

3.訓練

# 參數(shù)

params <- list(

? ?learning_rate = .11,

? ?num_leaves = 200,

? ?max_bin = 600,

? ?min_data_in_bin = 45,

? ?feature_fraction = .54,

? ?min_sum_hessian = 0.0065,

? ?lambda_l1 = .0002,

? ?lambda_l2 = .0004,

? ?drop_rate = .4,

? ?max_drop = 14

)

# 模型

lgb_mod <- lightgbm(

? ?params = params,

? ?data = lgb_train,

? ?nrounds = 300,

? ?early_stopping_rounds = 10,

? ?num_threads = 2

)

# 預測

lgb.pred <- predict(lgb_mod, data.matrix(lgb_te))

4.結(jié)果

lgb.pred2 <- matrix(unlist(lgb.pred), ncol = 1)

lgb.pred3 <- data.frame(lgb.pred2)

5.輸出

write.csv(lgb.pred3, "C:/Users/Administrator/Documents/kaggle/scs_lgb/lgb.pred1_tr.csv")

注:此處給在校讀書的朋友一些建議:

1.在學校學習機器學習算法時,測試所用數(shù)據(jù)量一般較少,因此可以嘗試大多數(shù)算法,大多數(shù)的R函數(shù),例如測試隨機森林算法時,可以選擇randomforest包,如果數(shù)據(jù)量稍微增多,可以設(shè)置并行運算,但是如果數(shù)據(jù)量達到GB級別,并行運算randomforest包也處理不了了,并且內(nèi)存會溢出;建議使用專業(yè)版R中的函數(shù);

2.學校學習主要針對理論進行學習,測試數(shù)據(jù)一般較為干凈,實際數(shù)據(jù)結(jié)構(gòu)一般更為復雜一些。

往期精彩:

【原創(chuàng)首發(fā)】機器學習公式推導與代碼實現(xiàn)30講.pdf

【原創(chuàng)首發(fā)】深度學習語義分割理論與實戰(zhàn)指南.pdf