如何計算深度學(xué)習(xí)模型參數(shù)量和推理速度

大家好,我是DASOU;

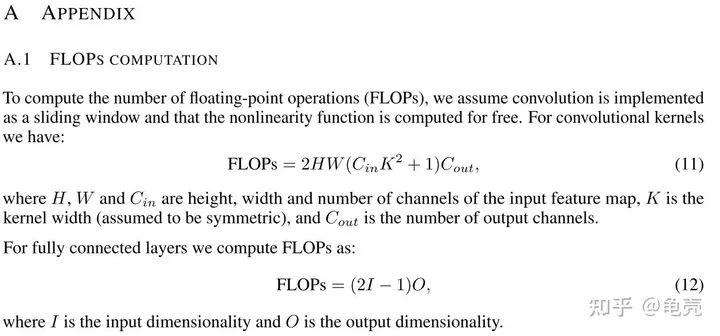

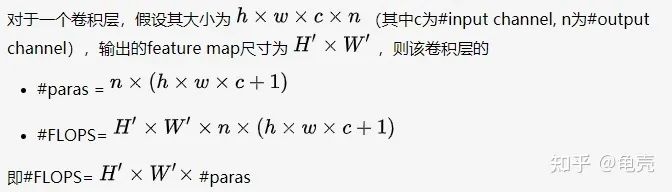

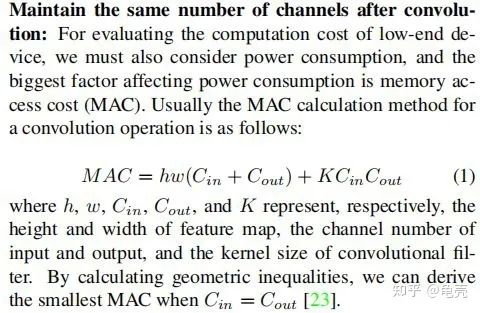

1.FLOPs和Params計算

1.1概念理解

計算公式:

對卷積層:(K_h * K_w * C_in * C_out)?*?(H_out * W_out)

對全連接層:C_in * C_out

模型參數(shù)量計算公式為:

對卷積層:(K_h * K_w * C_in)* C_out

對全連接層:C_in * C_out

注意:

1.params只與你定義的網(wǎng)絡(luò)結(jié)構(gòu)有關(guān),和forward的任何操作無關(guān)。即定義好了網(wǎng)絡(luò)結(jié)構(gòu),參數(shù)就已經(jīng)決定了。FLOPs和不同的層運算結(jié)構(gòu)有關(guān)。如果forward時在同一層(同一名字命名的層)多次運算,F(xiàn)LOPs不會增加

2.Model_size?=?4*params??模型大小約為參數(shù)量的4倍

補充:

1.2計算方法

'''

code?by?zzg-2020-05-19

pip?install?thop

'''

import?torch

from?thop?import?profile

from?models.yolo_nano?import?YOLONano

device?=?torch.device("cpu")

#input_shape?of?model,batch_size=1

net?=?YOLONano(num_classes=20,?image_size=416)?##定義好的網(wǎng)絡(luò)模型

input?=?torch.randn(1,?3,?416,?416)

flops,?params?=?profile(net,?inputs=(input,?))

print("FLOPs=",?str(flops/1e9)?+'{}'.format("G"))

print("params=",?str(params/1e6)+'{}'.format("M")

'''

在PyTorch中,可以使用torchstat這個庫來查看網(wǎng)絡(luò)模型的一些信息,包括總的參數(shù)量params、MAdd、顯卡內(nèi)存占用量和FLOPs等

pip?install?torchstat

'''

from?torchstat?import?stat

from?torchvision.models?import?resnet50

model?=?resnet50()

stat(model,?(3,?224,?224))

#pip?install?ptflops

from?ptflops?import?get_model_complexity_info

from?torchvision.models?import?resnet50

model?=?resnet50()

flops,?params?=?get_model_complexity_info(model,?(3,?224,?224),?as_strings=True,?print_per_layer_stat=True)

print('Flops:??'?+?flops)

print('Params:?'?+?params)

2.模型推理速度計算

2.1 模型推理速度正確計算

model?=?EfficientNet.from_pretrained(‘efficientnet-b0’)

device?=?torch.device(“cuda”)

model.to(device)

dummy_input?=?torch.randn(1,?3,?224,?224,dtype=torch.float).to(device)

starter,?ender?=?torch.cuda.Event(enable_timing=True),?torch.cuda.Event(enable_timing=True)

repetitions?=?300

timings=np.zeros((repetitions,1))

#GPU-WARM-UP

for?_?in?range(10):

???_?=?model(dummy_input)

#?MEASURE?PERFORMANCE

with?torch.no_grad():

??for?rep?in?range(repetitions):

?????starter.record()

?????_?=?model(dummy_input)

?????ender.record()

?????#?WAIT?FOR?GPU?SYNC

?????torch.cuda.synchronize()

?????curr_time?=?starter.elapsed_time(ender)

?????timings[rep]?=?curr_time

mean_syn?=?np.sum(timings)?/?repetitions

std_syn?=?np.std(timings)

mean_fps?=?1000.?/?mean_syn

print('?*?Mean@1?{mean_syn:.3f}ms?Std@5?{std_syn:.3f}ms?FPS@1?{mean_fps:.2f}'.format(mean_syn=mean_syn,?std_syn=std_syn,?mean_fps=mean_fps))

print(mean_syn)

2.2 模型吞吐量計算

(批次數(shù)?X?批次大小)/(以秒為單位的總時間)

model?=?EfficientNet.from_pretrained(‘efficientnet-b0’)

device?=?torch.device(“cuda”)

model.to(device)

dummy_input?=?torch.randn(optimal_batch_size,?3,224,224,?dtype=torch.float).to(device)

repetitions=100

total_time?=?0

with?torch.no_grad():

??for?rep?in?range(repetitions):

?????starter,?ender?=?torch.cuda.Event(enable_timing=True),torch.cuda.Event(enable_timing=True)

?????starter.record()

?????_?=?model(dummy_input)

?????ender.record()

?????torch.cuda.synchronize()

?????curr_time?=?starter.elapsed_time(ender)/1000

?????total_time?+=?curr_time

Throughput?=?(repetitions*optimal_batch_size)/total_time

print(‘Final?Throughput:’,Throughput)

評論

圖片

表情