NLP(五十三)在Keras中使用英文Roberta模型實(shí)現(xiàn)文本分類

??

????英文Roberta模型是2019年Facebook在論文RoBERTa: A Robustly Optimized BERT Pretraining Approach中新提出的預(yù)訓(xùn)練模型,其目的是改進(jìn)BERT模型存在的一些問題,當(dāng)時(shí)也刷新了一眾NLP任務(wù)的榜單,達(dá)到SOTA效果,其模型和代碼已開源,放在Github中的fairseq項(xiàng)目中。眾所周知,英文Roberta模型使用Torch框架訓(xùn)練的,因此,其torch版本模型最為常見。

??當(dāng)然,torch模型也是可以轉(zhuǎn)化為tensorflow模型的。本文將會(huì)介紹如何將原始torch版本的英文Roberta模型轉(zhuǎn)化為tensorflow版本模型,并且Keras中使用tensorflow版本模型實(shí)現(xiàn)英語文本分類。

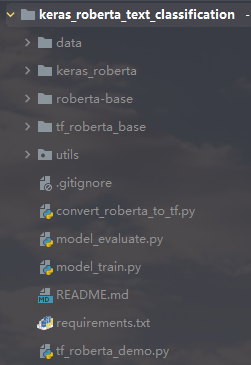

??項(xiàng)目結(jié)構(gòu)如下圖所示:

項(xiàng)目結(jié)構(gòu)圖

項(xiàng)目結(jié)構(gòu)圖模型轉(zhuǎn)化

??本項(xiàng)目首先會(huì)將原始torch版本的英文Roberta模型轉(zhuǎn)化為tensorflow版本模型,該部分代碼主要參考Github項(xiàng)目keras_roberta。

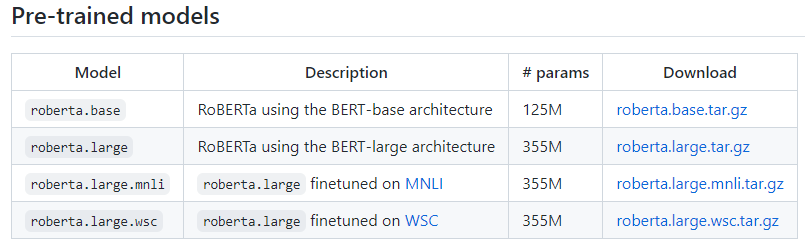

??首先需下載Facebook發(fā)布在fairseq項(xiàng)目中的roberta base模型,其訪問網(wǎng)址為: https://github.com/pytorch/fairseq/blob/main/examples/roberta/README.md。

Roberta模型

Roberta模型運(yùn)行

convert_roberta_to_tf.py腳本,將torch模型轉(zhuǎn)化為tensorflow模型。具體代碼不在此給出,可以參考文章后續(xù)給出的Github項(xiàng)目地址。??在模型的tokenizer方面,將RobertaTokenizer改為GPT2Tokenizer,因?yàn)镽obertaTokenizer是繼承自GPT2Tokenizer的,兩者相似性很高。測(cè)試原始torch模型和tensorflow模型的表現(xiàn),代碼如下(tf_roberta_demo.py):

import?os

import?tensorflow?as?tf

from?keras_roberta.roberta?import?build_bert_model

from?keras_roberta.tokenizer?import?RobertaTokenizer

from?fairseq.models.roberta?import?RobertaModel?as?FairseqRobertaModel

import?numpy?as?np

import?argparse

if?__name__?==?'__main__':

????roberta_path?=?'roberta-base'

????tf_roberta_path?=?'tf_roberta_base'

????tf_ckpt_name?=?'tf_roberta_base.ckpt'

????vocab_path?=?'keras_roberta'

????config_path?=?os.path.join(tf_roberta_path,?'bert_config.json')

????checkpoint_path?=?os.path.join(tf_roberta_path,?tf_ckpt_name)

????if?os.path.splitext(checkpoint_path)[-1]?!=?'.ckpt':

????????checkpoint_path?+=?'.ckpt'

????gpt_bpe_vocab?=?os.path.join(vocab_path,?'encoder.json')

????gpt_bpe_merge?=?os.path.join(vocab_path,?'vocab.bpe')

????roberta_dict?=?os.path.join(roberta_path,?'dict.txt')

????tokenizer?=?RobertaTokenizer(gpt_bpe_vocab,?gpt_bpe_merge,?roberta_dict)

????model?=?build_bert_model(config_path,?checkpoint_path,?roberta=True)??#?建立模型,加載權(quán)重

????#?編碼測(cè)試

????text1?=?"hello,?world!"

????text2?=?"This?is?Roberta!"

????sep?=?[tokenizer.sep_token]

????cls?=?[tokenizer.cls_token]

????#?1.?先用'bpe_tokenize'將文本轉(zhuǎn)換成bpe?tokens

????tokens1?=?cls?+?tokenizer.bpe_tokenize(text1)?+?sep

????tokens2?=?sep?+?tokenizer.bpe_tokenize(text2)?+?sep

????#?2.?最后轉(zhuǎn)換成id

????token_ids1?=?tokenizer.convert_tokens_to_ids(tokens1)

????token_ids2?=?tokenizer.convert_tokens_to_ids(tokens2)

????token_ids?=?token_ids1?+?token_ids2

????segment_ids?=?[0]?*?len(token_ids1)?+?[1]?*?len(token_ids2)

????print(token_ids)

????print(segment_ids)

????print('\n?=====?tf?model?predicting?=====\n')

????our_output?=?model.predict([np.array([token_ids]),?np.array([segment_ids])])

????print(our_output)

????print('\n?=====?torch?model?predicting?=====\n')

????roberta?=?FairseqRobertaModel.from_pretrained(roberta_path)

????roberta.eval()??#?disable?dropout

????input_ids?=?roberta.encode(text1,?text2).unsqueeze(0)??#?batch?of?size?1

????print(input_ids)

????their_output?=?roberta.model(input_ids,?features_only=True)[0]

????print(their_output)

輸出結(jié)果如下:

[0,?42891,?6,?232,?328,?2,?2,?713,?16,?1738,?102,?328,?2]

[0,?0,?0,?0,?0,?0,?1,?1,?1,?1,?1,?1,?1]

?=====?tf?model?predicting?=====

[[[-0.01123665??0.05132651?-0.02170264?...?-0.03562857?-0.02836962

???-0.00519008]

??[?0.04382067??0.07045364?-0.00431021?...?-0.04662359?-0.10770167

????0.1121687?]

??[?0.06198474??0.05240346??0.11088232?...?-0.08883709?-0.02932207

???-0.12898633]

??...

??[-0.00229368??0.045834????0.00811818?...?-0.11751424?-0.06718166

????0.04085271]

??[-0.08509324?-0.27506304?-0.02425355?...?-0.24215901?-0.15481825

????0.17167582]

??[-0.05180666??0.06384835?-0.05997407?...?-0.09398533?-0.05159672

???-0.03988626]]]

?=====?torch?model?predicting?=====

tensor([[????0,?42891,?????6,???232,???328,?????2,?????2,???713,????16,??1738,

???????????102,???328,?????2]])

tensor([[[-0.0525,??0.0818,?-0.0170,??...,?-0.0546,?-0.0569,?-0.0099],

?????????[-0.0765,?-0.0568,?-0.1400,??...,?-0.2612,?-0.0455,??0.2975],

?????????[-0.0142,??0.1184,??0.0530,??...,?-0.0844,??0.0199,??0.1340],

?????????...,

?????????[-0.0019,??0.1263,?-0.0787,??...,?-0.3986,?-0.0626,??0.1870],

?????????[?0.0127,?-0.2116,??0.0696,??...,?-0.1622,?-0.1265,??0.0986],

?????????[-0.0473,??0.0748,?-0.0419,??...,?-0.0892,?-0.0595,?-0.0281]]],

???????grad_fn=)

可以看到,兩者在tokenize時(shí)的token_ids是一致的。

英語文本分類

??接著我們需要看下轉(zhuǎn)化為的tensorflow版本的Roberta模型在英語文本分類數(shù)據(jù)集上的效果了。

??這里我們使用的是GLUE數(shù)據(jù)集中的SST-2。SST-2(The Stanford Sentiment Treebank,斯坦福情感樹庫),單句子分類任務(wù),包含電影評(píng)論中的句子和它們情感的人類注釋。這項(xiàng)任務(wù)是給定句子的情感,類別分為兩類正面情感(positive,樣本標(biāo)簽對(duì)應(yīng)為1)和負(fù)面情感(negative,樣本標(biāo)簽對(duì)應(yīng)為0),并且只用句子級(jí)別的標(biāo)簽。也就是,本任務(wù)也是一個(gè)二分類任務(wù),針對(duì)句子級(jí)別,分為正面和負(fù)面情感。關(guān)于該數(shù)據(jù)集的具體介紹可參考網(wǎng)址:https://nlp.stanford.edu/sentiment/index.html。

??SST-2數(shù)據(jù)集中訓(xùn)練集樣本數(shù)量為67349,驗(yàn)證集樣本數(shù)量為872,測(cè)試集樣本數(shù)量為1820,數(shù)據(jù)存儲(chǔ)格式為tsv,讀取數(shù)據(jù)的代碼如下:(utils/load_data.py)

def?read_model_data(file_path):

????data?=?[]

????with?open(file_path,?'r',?encoding='utf-8')?as?f:

????????lines?=?[_.strip()?for?_?in?f.readlines()]

????for?i,?line?in?enumerate(lines):

????????if?i:

????????????items?=?line.split('\t')

????????????label?=?[0,?1]?if?int(items[1])?else?[1,?0]

????????????data.append([label,?items[0]])

????return?data

??在tokenizer部分,我們采用GTP2Tokenizer,該部分代碼如下(utils/roberta_tokenizer.py):

#?roberta?tokenizer?function?for?text?pair

def?tokenizer_encode(tokenizer,?text,?max_seq_length):

????sep?=?[tokenizer.sep_token]

????cls?=?[tokenizer.cls_token]

????#?1.?先用'bpe_tokenize'將文本轉(zhuǎn)換成bpe?tokens

????tokens1?=?cls?+?tokenizer.bpe_tokenize(text)?+?sep

????#?2.?最后轉(zhuǎn)換成id

????token_ids?=?tokenizer.convert_tokens_to_ids(tokens1)

????segment_ids?=?[0]?*?len(token_ids)

????pad_length?=?max_seq_length?-?len(token_ids)

????if?pad_length?>=?0:

????????token_ids?+=?[0]?*?pad_length

????????segment_ids?+=?[0]?*?pad_length

????else:

????????token_ids?=?token_ids[:max_seq_length]

????????segment_ids?=?segment_ids[:max_seq_length]

????return?token_ids,?segment_ids

??創(chuàng)建模型如下(model_train.py):

#?構(gòu)建模型

def?create_cls_model():

????#?Roberta?model

????roberta_model?=?build_bert_model(CONFIG_FILE_PATH,?CHECKPOINT_FILE_PATH,?roberta=True)??#?建立模型,加載權(quán)重

????for?layer?in?roberta_model.layers:

????????layer.trainable?=?True

????cls_layer?=?Lambda(lambda?x:?x[:,?0])(roberta_model.output)????#?取出[CLS]對(duì)應(yīng)的向量用來做分類

????p?=?Dense(2,?activation='softmax')(cls_layer)?????#?多分類

????model?=?Model(roberta_model.input,?p)

????model.compile(

????????loss='categorical_crossentropy',

????????optimizer=Adam(1e-5),???#?用足夠小的學(xué)習(xí)率

????????metrics=['accuracy']

????)

????return?model

模型參數(shù)如下:

#?模型參數(shù)配置

EPOCH?=?10??????????????#?訓(xùn)練輪次

BATCH_SIZE?=?64?????????#?批次數(shù)量

MAX_SEQ_LENGTH?=?80?????#?最大長(zhǎng)度

模型訓(xùn)練完后,在驗(yàn)證數(shù)據(jù)集上的準(zhǔn)確率(accuracy)為0.9415,F(xiàn)1值為0.9415,取得了不錯(cuò)效果。

模型預(yù)測(cè)

??我們對(duì)新樣本進(jìn)行模型預(yù)測(cè)(model_predict.py),預(yù)測(cè)結(jié)果如下:

Awesome movie for everyone to watch. Animation was flawless.

label: 1, prob: 0.9999607I almost balled my eyes out 5 times. Almost. Beautiful movie, very inspiring.

label: 1, prob: 0.9999519Not even worth it. It's a movie that's too stupid for adults, and too crappy for everyone. Skip if you're not 13, or even if you are.

label: 0, prob: 0.9999864

總結(jié)

??本文介紹了如何將原始torch版本的英文Roberta模型轉(zhuǎn)化為tensorflow版本模型,并且Keras中使用tensorflow版本模型實(shí)現(xiàn)英語文本分類。

??本項(xiàng)目代碼已放至Github,網(wǎng)址為:https://github.com/percent4/keras_roberta_text_classificaiton。

??感謝閱讀,如有任何問題,歡迎大家交流~

參考網(wǎng)址

fairseq: https://github.com/pytorch/fairseqGLUE tasks: https://gluebenchmark.com/tasksSST-2: https://nlp.stanford.edu/sentiment/index.htmlkeras_roberta: https://github.com/midori1/keras_robertaRoberta paper: https://arxiv.org/pdf/1907.11692.pdf